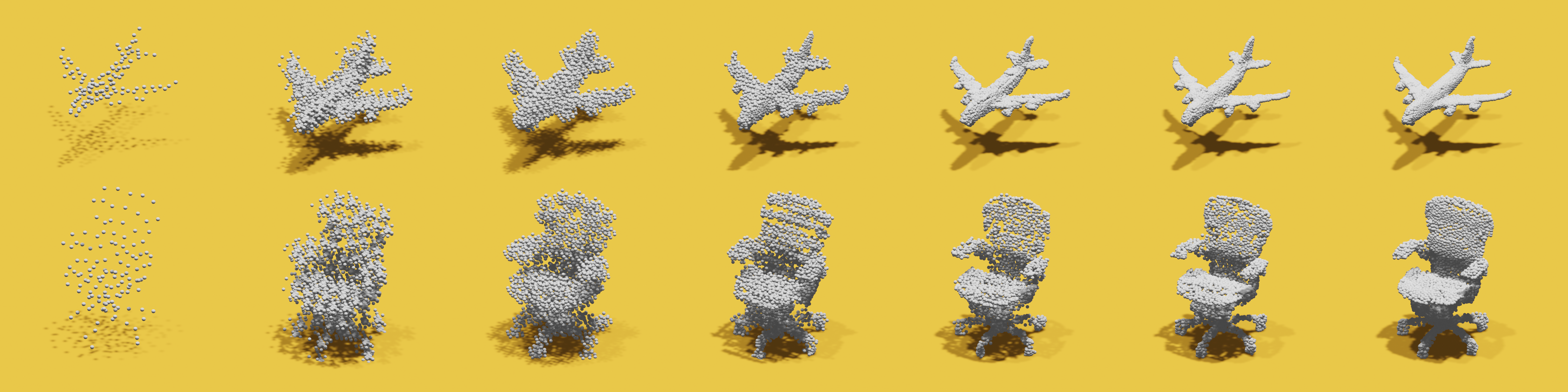

Point cloud is a crucial representation of 3D contents, which has been widely used in many areas such as virtual reality, mixed reality, autonomous driving, etc. With the boost of the number of points in the data, how to efficiently compress point cloud becomes a challenging problem. In this paper, we propose a set of significant improvements to patch-based point cloud compression, i.e., a learnable context model for entropy coding, octree coding for sampling centroid points, and an integrated compression and training process. In addition, we propose an adversarial network to improve the uniformity of points during reconstruction. Our experiments show that the improved patch-based autoencoder outperforms the state-of-the-art in terms of rate-distortion performance, on both sparse and large-scale point clouds. More importantly, our method can maintain a short compression time while ensuring the reconstruction quality.

Python 3.9.6 and Pytorch 1.9.0

Other dependencies:

pytorch3d 0.5.0 for KNN and chamfer loss: https://github.com/facebookresearch/pytorch3d

geo_dist for PSNR calculation: https://github.com/mauriceqch/geo_dist

We have uploaded the .ply files which already converted from ModelNet40, ShapeNet and S3DIS raw format, you can get access to these data by the following link:

8192-points ModelNet40 training and test set 2048-points ShapeNet test set S3DIS-Area1 point clouds

The following steps will show you a general way to prepare point clouds in our experiment.

ModelNet40

-

Download the ModelNet40 data http://modelnet.cs.princeton.edu

-

Convert CAD models(.off) to point clouds(.ply) by using

sample_modelnet.py:python ./sample_modelnet.py ./data/ModelNet40 ./data/ModelNet40_pc_8192 --n_point 8192

ShapeNet

-

Download the ShapeNet data here

-

Sampling point clouds by using

sample_shapenet.py:python ./sample_shapenet.py ./data/shapenetcore_partanno_segmentation_benchmark_v0_normal ./data/ShapeNet_pc_2048 --n_point 2048

S3DIS

-

Download the S3DIS data http://buildingparser.stanford.edu/dataset.html

-

Sampling point clouds by using

sample_stanford3d.py:python ./sample_stanford3d.py ./data/Stanford3dDataset_v1.2_Aligned_Version/Area_1/*/*.txt ./data/Stanford3d_pc/Area_1

We provided our trained models at: Link

Otherwise you can use train.py to train our model on ModelNet40 training set:

python ./train.py './data/ModelNet40_pc_01_8192p/**/train/*.ply' './model/K256' --K 256

We use compress.py and decompress.py to perform compress on point clouds:

python ./compress.py './data/ModelNet40_pc_01_8192p/**/test/*.ply' './data/ModelNet40_K256_compressed' './model/K256' --K 256

python ./decompress.py './data/ModelNet40_K256_compressed' './data/ModelNet40_K256_decompressed' './model/K256' --K 256

The Evaluation process uses the same software geo_dist as in Quach's code. We use eval.py to calculate bitrate、PSNR and UC.

python ./eval.py './data/ModelNet40_pc_01_8192p/**/test/*.ply' './data/ModelNet40_K256_compressed' './data/ModelNet40_K256_decompressed' './eval/ModelNet40_K256.csv' '../geo_dist/build/pc_error'