Magnet: We Never Know How Text-to-Image Diffusion Models Work, Until We Learn How Vision-Language Models Function

Chenyi Zhuang, Ying Hu, Pan Gao

I2ML, Nanjing University of Aeronautics and Astronautics

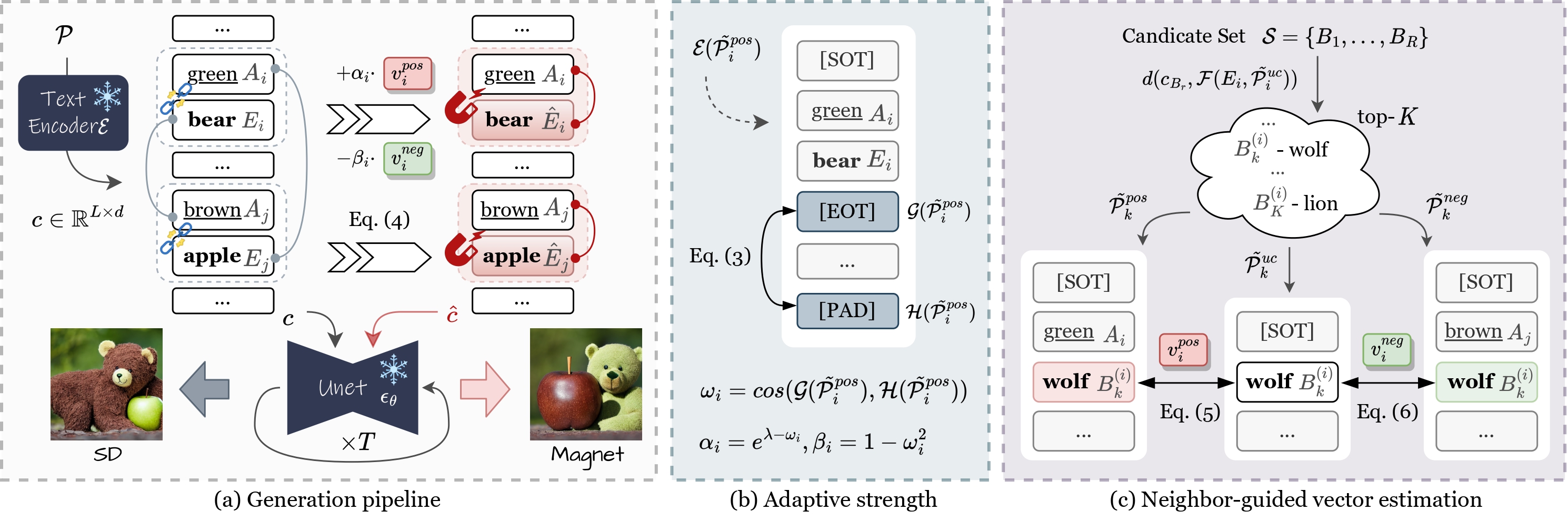

We propose Magnet, a training-free approach that improves attribute binding by manipulating object embeddings, enhancing disentanglement within the textual space.

- In-depth analysis and exploration of the CLIP text encoder, highlighting the context issue of padding embeddings;

- Improve text alignment by applying positive and negative binding vectors on object embeddings, with negligible cost.

- Plug-and-play to various T2I models and controlling methods, e.g., ControlNet.

conda create --name magnet python=3.11

conda activate magnet

# Install requirements

pip install -r requirements.txtIf you are curious about how different types of text embedding influence generation, we recommend running (1) visualize_attribute_bias.ipynb to explore the attribute bias on different objects, (2) emb_swap_cases.py to reproduce the swapping experiment.

Download the pre-trained SD V1.4, SD V1.5 (unfortunately now 404), SD V2, SD V2.1, or SDXL.

# Run magnet on SD V1.4

python run.py --sd_path path-to-stable-diffusion-v1-4 --magnet_path bank/candidates_1_4.pt --N 2 --run_sd

# Run magnet on SDXL

python run.py --sd_path path-to-stable-diffusion-xl --magnet_path bank/candidates_sdxl.pt --N 2 --run_sd

# Remove the "run_sd" argument if you don't want the standard model runYou can also try ControlNet conditioned on Depth estimation DPT-Large.

# Run magnet with ControlNet

python run_with_controlnet.py --sd_path path-to-stable-diffusion-v1-5 --magnet_path bank/candidates_1_5.pt --N 2 --controlnet_path path-to-sd-controlnet-depth --dpt_path path-to-dpt-large --run_sdWe also provide run_vanilla_pipeline.py to use magnet via the prompt_embeds argument in the standard StableDiffusionPipeline.

Demos of cross-attention visualization are in visualize_attention.ipynb.

Feel free to explore Magnet and leave any questions in this repo!

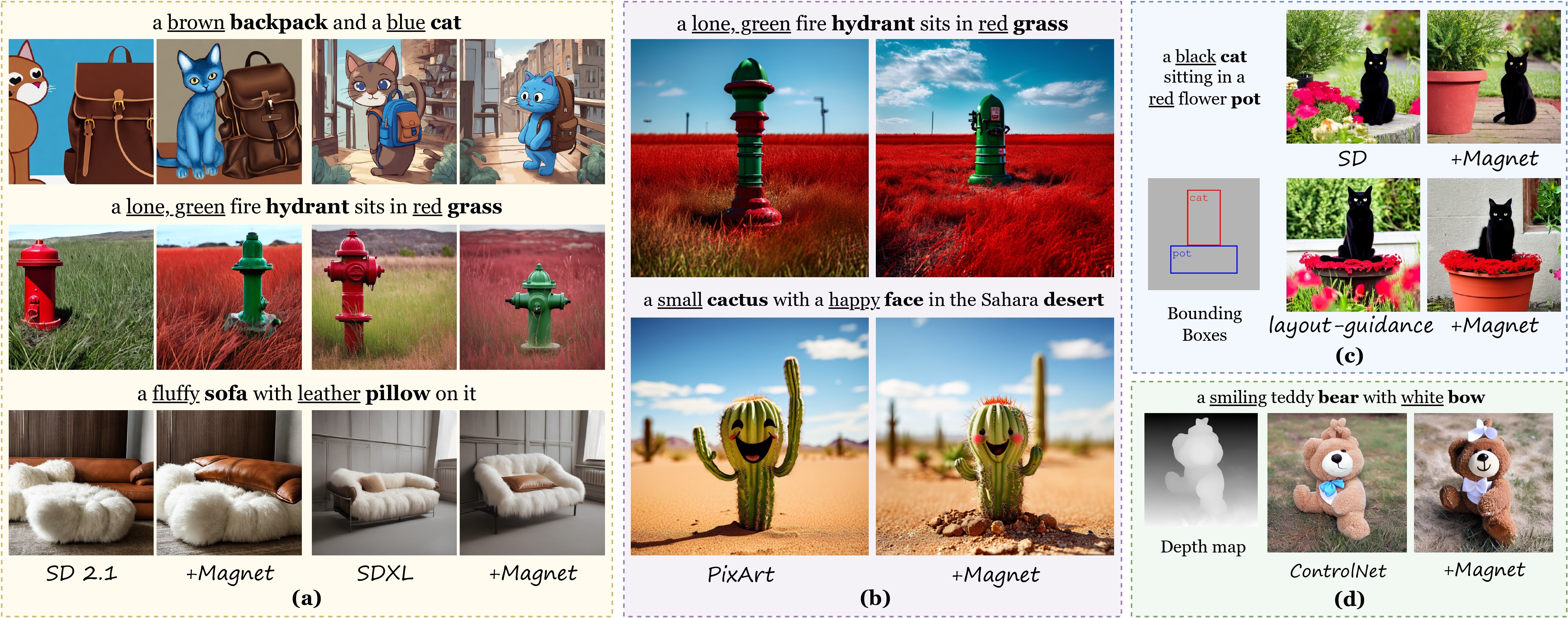

Compare to state-of-the-art approaches:

Integrate Magnet into other T2I pipelines and T2I controlling modules:

Magnet's performance is largely dependent on the pre-trained T2I model. It may not provide meaningful modifications due to the limited power of text-based manipulation alone. You can manually adjust the prompt, seed, or hyperparameters, and combine other techniques to get a better result if you are not satisfied with the output.

Most prompts are based on datasets obtained from Structure Diffusion. We also refer to Prompt-to-Prompt and PixArt.

- Release the source code and model.

- Extend to more T2I models.

- Extend to controlling approaches.