ComfyUI implementation of Motion-I2V This is currently a diffusers wrapper with code adapted from https://github.com/G-U-N/Motion-I2V

- [2024/9/24] 🔥 First Release

- [2024/9/23] 🔥 Interactive Motion Painter UI for ComfyUI

- [2024/9/20] 🔥 Added basic IP Adapter integration

- [2024/9/16] 🔥 Uodated model code to be compatible with Comfy's diffusers version

- Convert the code to be Comfy Native

- Reduce VRAM usage

- More motion controls

- Train longer context models

-

MI2V Flow Predictor takes as input a first frame and option motion prompt, mask and vectors. Outputs a predicted optical flow for a 16 frame animation with the input image as the first frame. You can view a preview of the motion where the colors correspond to movement in 2 Dimensions

-

MI2V Flow Animator takes the predicted flow and a starting image and generates a 16 frame animation based on these

-

MI2V Motion Painter allows you to draw motion vectors onto an image to be used by MI2V Flow Predictor

-

MI2V Pause allows you to pause the execution of the workflow. Useful for loading a resized image into MI2V Flow Predictor or checking you like the predicted motion before committing to further animation

Here are some example workflows. They can be found along with their input images here:

-

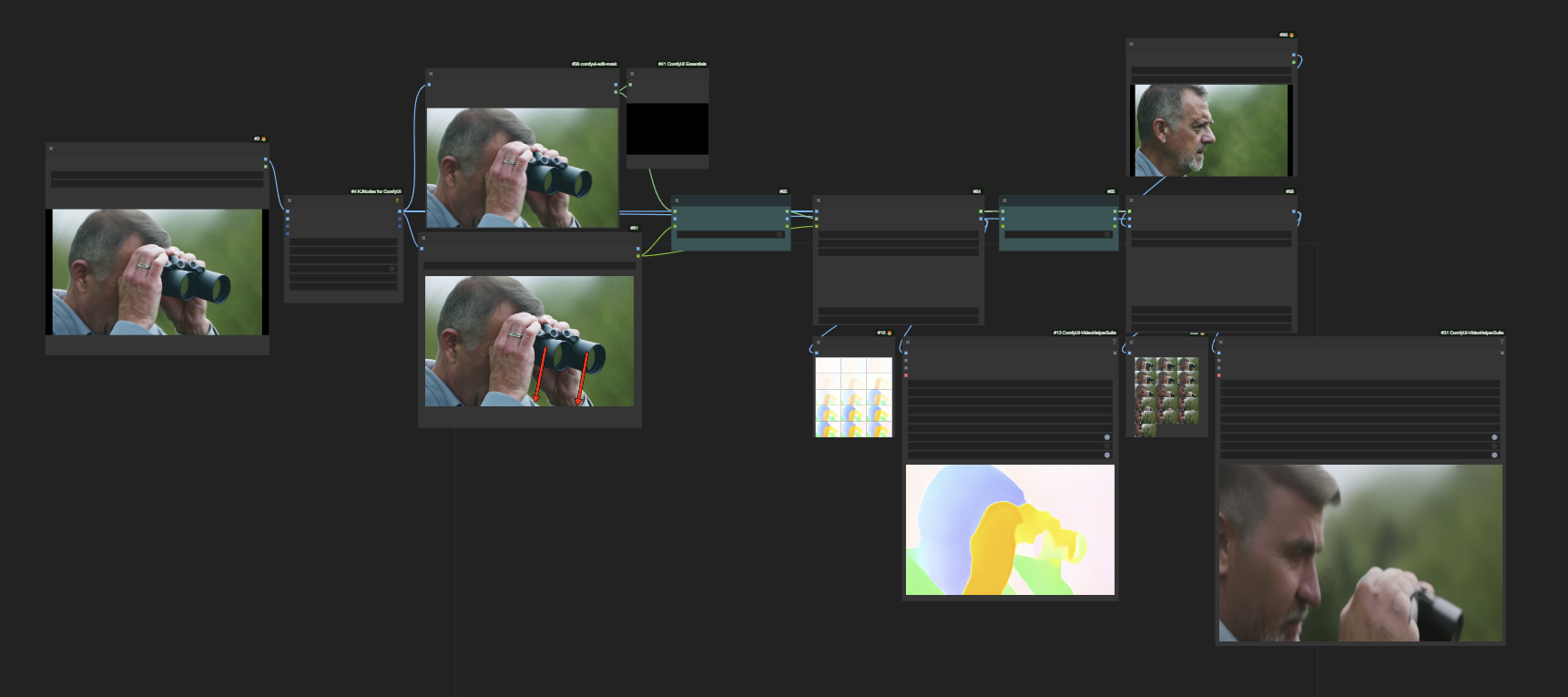

Motion Painter Here we use motion painting to instruct the binoculars to be lowered. This causes the man's face to be shown, however the model doesn't know what this face should look like as it wasn't in the initial image. To help, we give it a second image of the man's face via a simple IP Adapter (Once the nodes are ComfyUI native this will work even better if we use Tiled IP Adapter)

AnimateDiff_06130.mp4

-

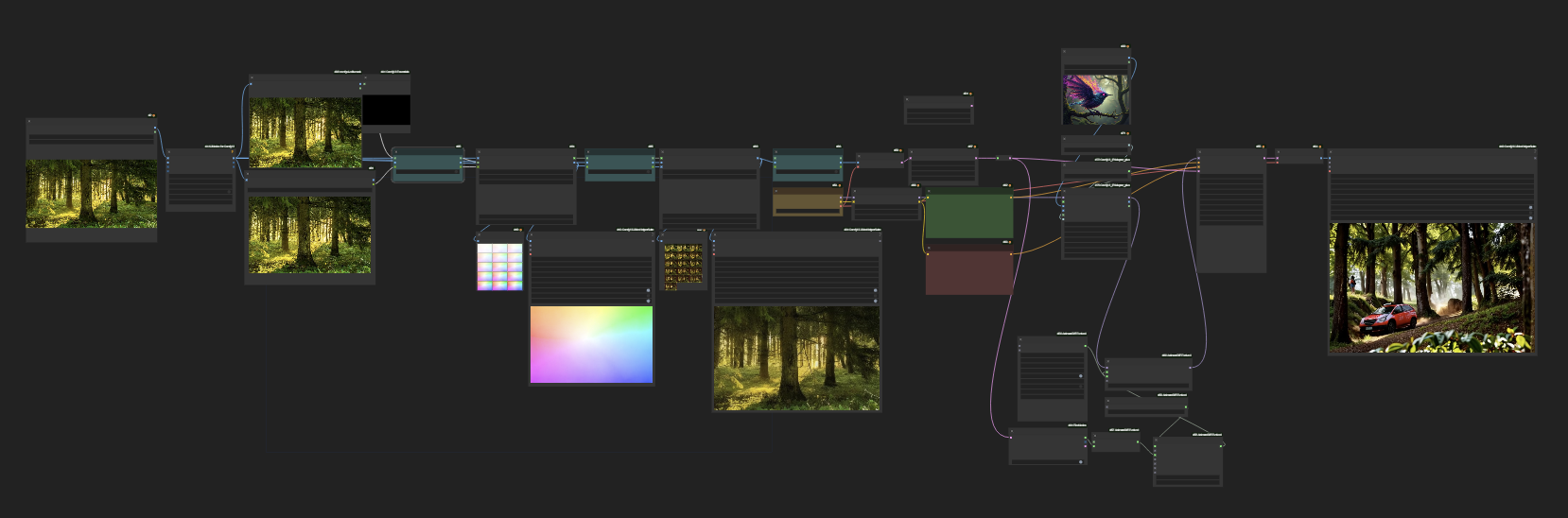

Using motion as an input into another animation Here we use an image of a forest and a prompt of "zoom out" to get a simple video of zooming out motion. This is then input into a second Animate Diff animation to give us complex and controlled motion that would have been difficult to acheive otherwise

AnimateDiff_06115.mp4

AnimateDiff_05800.mp4

AnimateDiff_05907.mp4

- Motion-I2V: Consistent and Controllable Image-to-Video Generation with Explicit Motion Modeling by Xiaoyu Shi1*, Zhaoyang Huang1*, Fu-Yun Wang1*, Weikang Bian1*, Dasong Li 1, Yi Zhang1, Manyuan Zhang1, Ka Chun Cheung2, Simon See2, Hongwei Qin3, Jifeng Dai4, Hongsheng Li1 1CUHK-MMLab 2NVIDIA 3SenseTime 4 Tsinghua University

- Motion Painter node was adapted from code in this node https://github.com/AlekPet/ComfyUI_Custom_Nodes_AlekPet