The Artl@s project focus on the global circulation of images from the 1890s to the advent of the Internet, using digital methodologies. Among its projects, BasArt is an online database of exhibition catalogs from the 19th and 20th centuries. In order to broaden this database, Caroline Corbières, intern of the project in 2020, worked on the automatisation of its process. A scanned exhibition catalog is taken as an input and then encoded in XML-TEI and structured in csv.

In this context, the aim of this repository is to improve the ocerization and segmentation of this Catalogs Workflow. This step occurs at the beginning of the workflow and transforms the data from an image to a text. The idea was not only to refine the OCR for Artl@s but also to make a useful tool for researchers who need to ocerize their catalogues. Therefore, this dataset holds exhibition catalogs, prepared by Caroline Corbières, catalogs of 19th to nowadays manuscripts fairs of the Katabase project, arranged by Simon Gabay and owners directories from the Adresses et Annuaires group of Paris Time Machine of the EHESS, produced by Gabriela Elgarrista.

The dataset is composed of 277 original pages, with 50 pages of Annuaires, 97 pages of manuscripts' fairs catalogs and 130 pages of exhibition catalogs, which were used to create the first models and 3 complete exhibition catalogs produced using this models. For more informations, see dataset.csv.

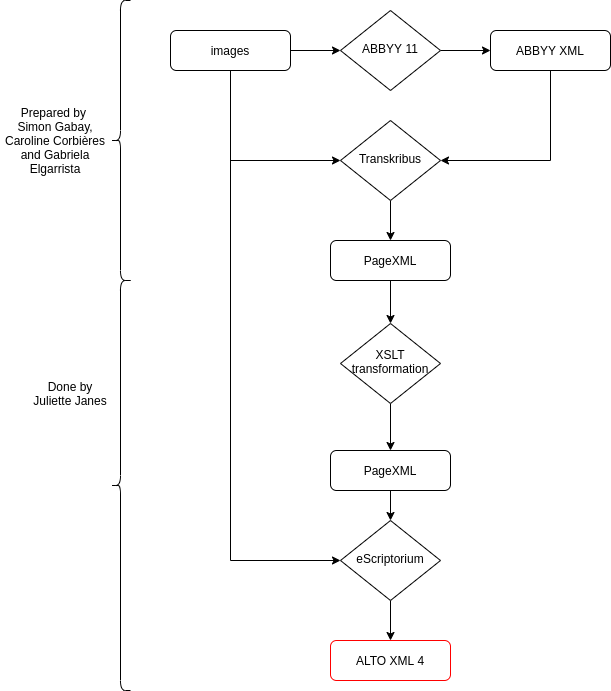

The schema below explains its process of creation. Since the pageXML from Transkribus was displayed without the manual corrections done in the transcription, a XSLT transformation has been done on them. It can be found here. Then, the pages have been prepared and segmented in eScriptorium using the SegmOnto ontology which allows to name the differents zones and lines. This work is developped here. Lastly, the work done has been exported in ALTO4 format, accessible in this repository, along with the images used.

In your terminal:

- Go to the directory

3_Scripts_training_construction - Chose the dataset you want:

- All the dataset

- Only one of the catalogs types (Annuaires, exhibition catalogs or manuscripts' fair catalogs) - Use the corresponding script with the command

bash [SCRIPT] - You will get a

TrainingDatadirectory containing all the data

The test dataset used for our training can be found in the directory 3_Scripts_training_construction along with two python scripts, one splitting the data in train, test and eval datasets and the other removing the entries zones.

├── 1_Data

│ ├── annuaires

│ │ ├─ alto_eScriptorium

| | ├─ page_Transkribus

│ │ └─ images

│ │

│ ├── Cat_expositions

│ │ ├─ complete_catalogs

| │ │ ├─ alto_eScriptorium

| │ │ └─ image

│ │ ├─ first_data

| │ │ ├─ alto_eScriptorium

| | | ├─ page_Transkribus

| | | ├─ page_transforme

| │ │ └─ image

| | └─ README.md

| |

| └── Cat_manuscrits

│ ├─ alto_eScriptorium

| ├─ page_Transkribus

| ├─ page_transforme

│ └─ image

|

├── 2_ToolBox

| └── Joint Toolbox for dataset's preparation

|

├── 3_Scripts_training_construction

│ ├─ build_train_alto.sh

| ├─ tests files

| ├─ random_data.py

│ └─ remove_entries

|

├── 4_Models

│ ├─ HTR

| │ ├─ model_htr_abondance.mlmodel

| │ ├─ model_htr_beaufort.mlmodel

| │ ├─ model_htr_chaource.mlmodel

| │ ├─ model_htr_danablu.mlmodel

| │ ├─ model_htr_epoisse.mlmodel

| │ ├─ model_htr_fourme.mlmodel

| │ ├─ model_htr_gruyere.mlmodel

| │ └─ README.md

| |

| └─ Segment

| ├─ model_segmentation_abondance.mlmodel

| ├─ model_segmentation_beaufort.mlmodel

| ├─ model_segmentation_chaource.mlmodel

| ├─ model_segmentation_coulommiers.mlmodel

| └─ README.md

|

├── images

|

└─ Dataset.csv

Thanks to Simon Gabay, Claire Jahan, Caroline Corbières, Gabriela Elgarrista and Carmen Brando for their help and work.

This repository is developed by Juliette Janes, intern of the Artl@s project, with the help of Simon Gabay under the supervision of Béatrice Joyeux-Prunel.

- Manuscripts' catalogs preparation has been done by Simon Gabay.

- Exhibitions' catalogs preparation has been done by Caroline Corbières.

- Annuaires preparation has been done by Gabriela Elgarrista, under the supervision of Carmen Brando.

Images from catalogs published prior 1920 and transcriptions are CC-BY.

The other images are extracts of catalogs published after 1920 and are the intellectual property of their productor.

Juliette Janes, Simon Gabay, Béatrice Joyeux-Prunel, HTRCatalogs: Dataset for Historical Catalogs Segmentation and HTR, 2021, Paris: ENS Paris https://github.com/Juliettejns/cataloguesSegmentationOCR/

If you have any questions or remarks, please contact juliette.janes@chartes.psl.eu and simon.gabay@unige.ch.