Partial Multi-view Clustering via Consistent GAN

This repo contains the source code and dataset for our ICDM paper:

Qianqian Wang, Zengming Ding, Zhiqiang Tao, Quanxue Gao and Yun Fu, Partial Multi-view Clustering via Consistent GAN, IEEE ICDM, 2018: 1290-1295.

@inproceedings{wang2018partial,

title={Partial multi-view clustering via consistent GAN},

author={Wang, Qianqian and Ding, Zhengming and Tao, Zhiqiang and Gao, Quanxue and Fu, Yun},

booktitle={IEEE ICDM},

pages={1290--1295},

year={2018},

}

This model has been extended to partial multi-view clustering on TIP:

PVC-GAN Model:

Introduction

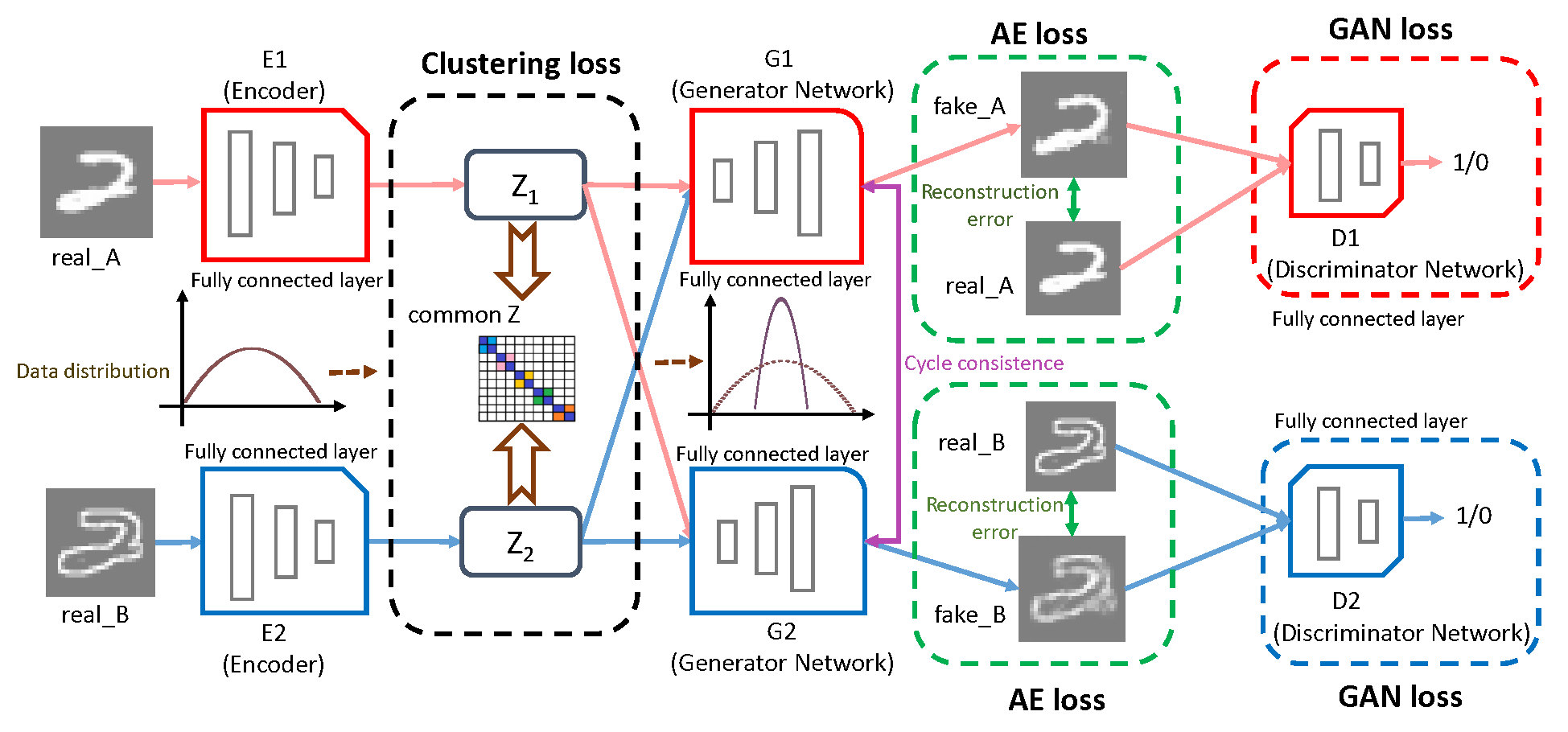

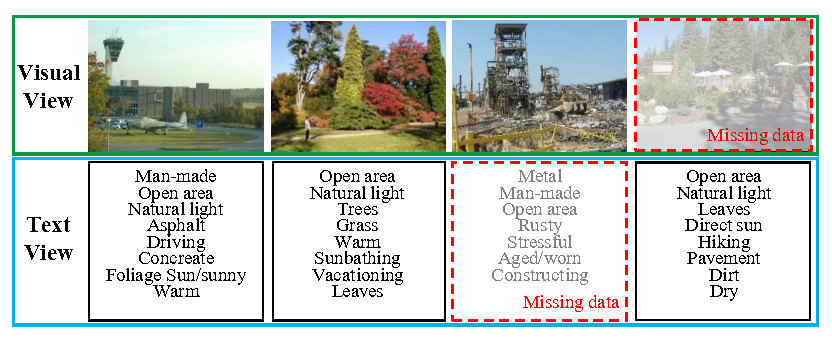

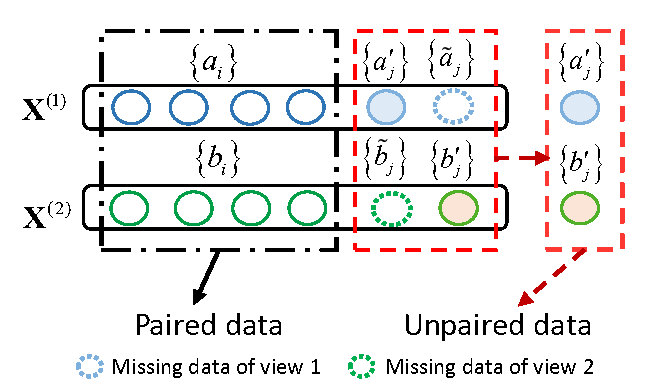

With the development of data collection and feature extraction methods, nowadays, multi-view data are easily to obtain. Multi-view clustering, as one of the most important methods to analyze this kind of data, has been widely used in many real-world applications. Most existing multi-view clustering methods perform well on the assumption that each sample appears in all views. Nevertheless, in real-world application, each view may well face the problem of the data missing due to noise or malfunction or equipments malfunction. In this paper, a new consistent generative adversarial network is proposed for partial multi-view clustering. We learn a common low-dimensional representation, which can both generate the missing view data and capture a better common structure from partial multi-view data for clustering. Different from the most existing methods, we use the common representation encoded by one view to generate the missing data of the corresponding view by generative adversarial networks, then we use the encoder and clustering networks. This is intuitive and meaningful because encoding common representation and generating the missing data in our model will promote mutually. To be specific, the common representation learned by encoder networks can help impute the missing view data and the generated missing view data can put forward to learn a more consistent low-dimensional representation. Experimental results on three different multi-view databases illustrate the superiority of the proposed method.

Dataset:

BDGP is a two-view database. One is visual view and the other is textual view. It contains 2,500 images about drosophila embryos belonging to 5 categories. Each image is represented by a 1,750-D visual vector and a 79-D textual feature vector. In our experiment, we use all data on BDGP database, and evaluate the performance on both visual feature and textual feature.

Requirements

Python: Python 3.6.2:

Pytorch: 0.1.12

Numpy: 1.13.1

TorchVision: 0.1.8

Cuda: 11.2

Train the model

python train.py

Acknowledgments