This is the official PyTorch implementation of our NeurIPS 2021 paper

SalKG: Learning From Knowledge Graph Explanations for Commonsense Reasoning

Aaron Chan, Jiashu Xu*, Boyuan Long*, Soumya Sanyal, Tanish Gupta, Xiang Ren

NeurIPS 2021

*=equal contritbution

Please note that this is still under construction

TODO

- fine occl

- hybrid models

- release dataset files

- clean up configs

- python >= 3.6

- pytorch >= 1.7.0

After you have pytorch (preferably with cuda support), please install other requirements

by pip install -r requirements.txt

First download csqa data and unzip. The default folder is data.

Then download embeddings, unzip and put tzw.ent.np to data/mhgrn_data/cpnet/ and glove.transe.sgd.rel.npy to data/mhgrn_data/transe.

The final dataset folder should look like this

data/ # root dir

csqa/

path_embedding.pickle

mhgrn_data/

csqa/

graph/ # extracted subgraphs

paths/ # unpruned/pruned paths

statement/ # csqa statement

cpnet/

transe/

We use neptune to track our experiment. Please set the api token and project id by

export NEPTUNE_API_TOKEN='<YOUR API KEY>'

export NEPTUNE_PROJ_NAME='<YOUR PROJECT NAME>'

The model weight would be saved to save with the subfolder name equal to the neptune id.

The pipeline to train SalKG models (for detail parameters that we suggest tuning, please see the bash scripts)

-

run

runs/build_qa.shfor generating indexed dataset required by nokg and kg modelIn the script, the flag

--fine-occlwould generate indexed dataset required by fine occl model -

run

runs/qa.shto run nokg and kg model -

run

runs/save_target_saliency.shwith nokg / kg checkpoints to generate the model's saliency. Currently we suppport coarse occlusion and fine {occlusion, gradient} saliency

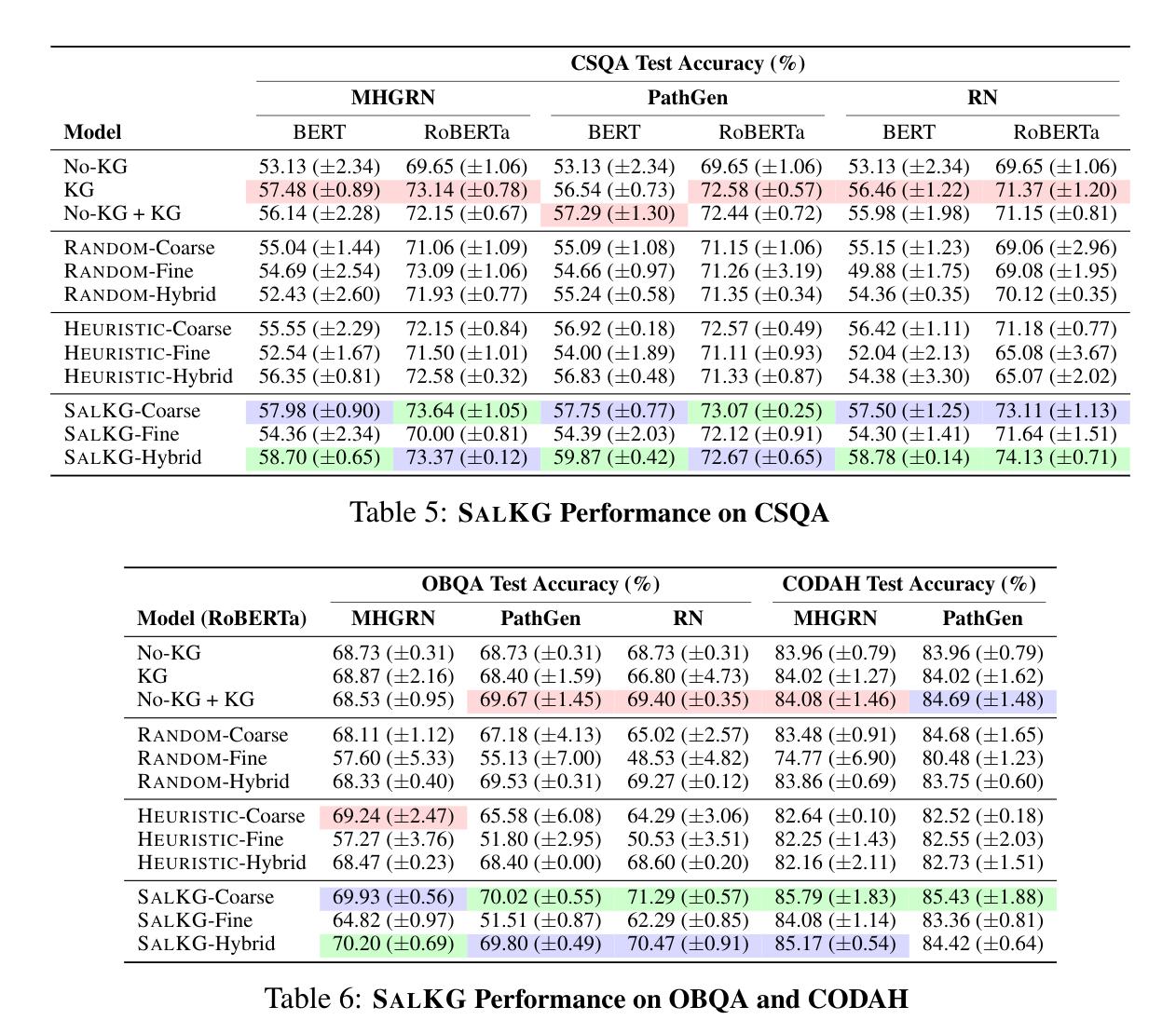

The table below shows our results in three commonly used QA benchmarks: CommonsenseQA (CSQA), OpenbookQA (OBQA), and CODAH

For each column,

- Green cells are the best performance

- Blue cells are the second-best performance

- Red cells arae the non-SalKG best performance

Across the 3 datasets, we find that SalKG-Hybrid and SalKG-Coarse consistently outperform other models.