The repository introduces GIRT-Model, an open-source assistant language model that automatically generates Issue Report Templates (IRTs) or Issue Templates. It creates IRTs based on the developer’s instructions regarding the structure and necessary fields.

- Model: https://huggingface.co/nafisehNik/girt-t5-base

- Dataset: https://huggingface.co/datasets/nafisehNik/girt-instruct

- Space: https://huggingface.co/spaces/nafisehNik/girt-space

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

# load model and tokenizer

model = AutoModelForSeq2SeqLM.from_pretrained('nafisehNik/girt-t5-base')

tokenizer = AutoTokenizer.from_pretrained('nafisehNik/girt-t5-base')

# Ensure that the model is on the GPU for cpu use 'cpu' instead of 'cuda'

model = model.to('cuda')

# method for computing issue report template generation

def compute(sample, top_p, top_k, do_sample, max_length, min_length):

inputs = tokenizer(sample, return_tensors="pt").to('cuda')

outputs = model.generate(

**inputs,

min_length= min_length,

max_length=max_length,

do_sample=do_sample,

top_p=top_p,

top_k=top_k)

generated_texts = tokenizer.batch_decode(outputs, skip_special_tokens=False)

generated_text = generated_texts[0]

replace_dict = {

'\n ': '\n',

'</s>': '',

'<pad> ': '',

'<pad>': '',

'<unk>!--': '<!--',

'<unk>': '',

}

postprocess_text = generated_text

for key, value in replace_dict.items():

postprocess_text = postprocess_text.replace(key, value)

return postprocess_text

prompt = "YOUR INPUT INSTRUCTION"

result = compute(prompt, top_p = 0.92, top_k=0, do_sample=True, max_length=300, min_length=30)A dataset in the format of pairs of instructions and corresponding outputs. GIRT-Instruct is constructed based on GIRT-Data, a dataset of IRTs. We use both GIRT-Data metadata and the Zephyr-7B-Beta language model to generate the instructions. This dataset is used to train the GIRT-Model.

We have 4 different types in GIRT-Instruct. These types include:

- default: This type includes instructions with the GIRT-Data metadata.

- default+mask: This type includes instructions with the GIRT-Data metadata, wherein two fields of information in each instruction are randomly masked.

- default+summary: This type includes instructions with the GIRT-Data metadata and the field of summary.

- default+summary+mask: This type includes instructions with the GIRT-Data metadata and the field of summary. Also, two fields of information in each instruction are randomly masked.

from datasets import load_dataset

dataset = load_dataset('nafisehNik/girt-instruct', split='train')

print(dataset['train'][0]) # First row of trainThe code for fine-tuning the GIRT-Model and evaluation in a zero-shot setting is available here. It downloads the GIRT-Instruct and fine-tunes the t5-base model.

We also provide the code and prompts used for the Zephyr model to generate summaries of instructions.

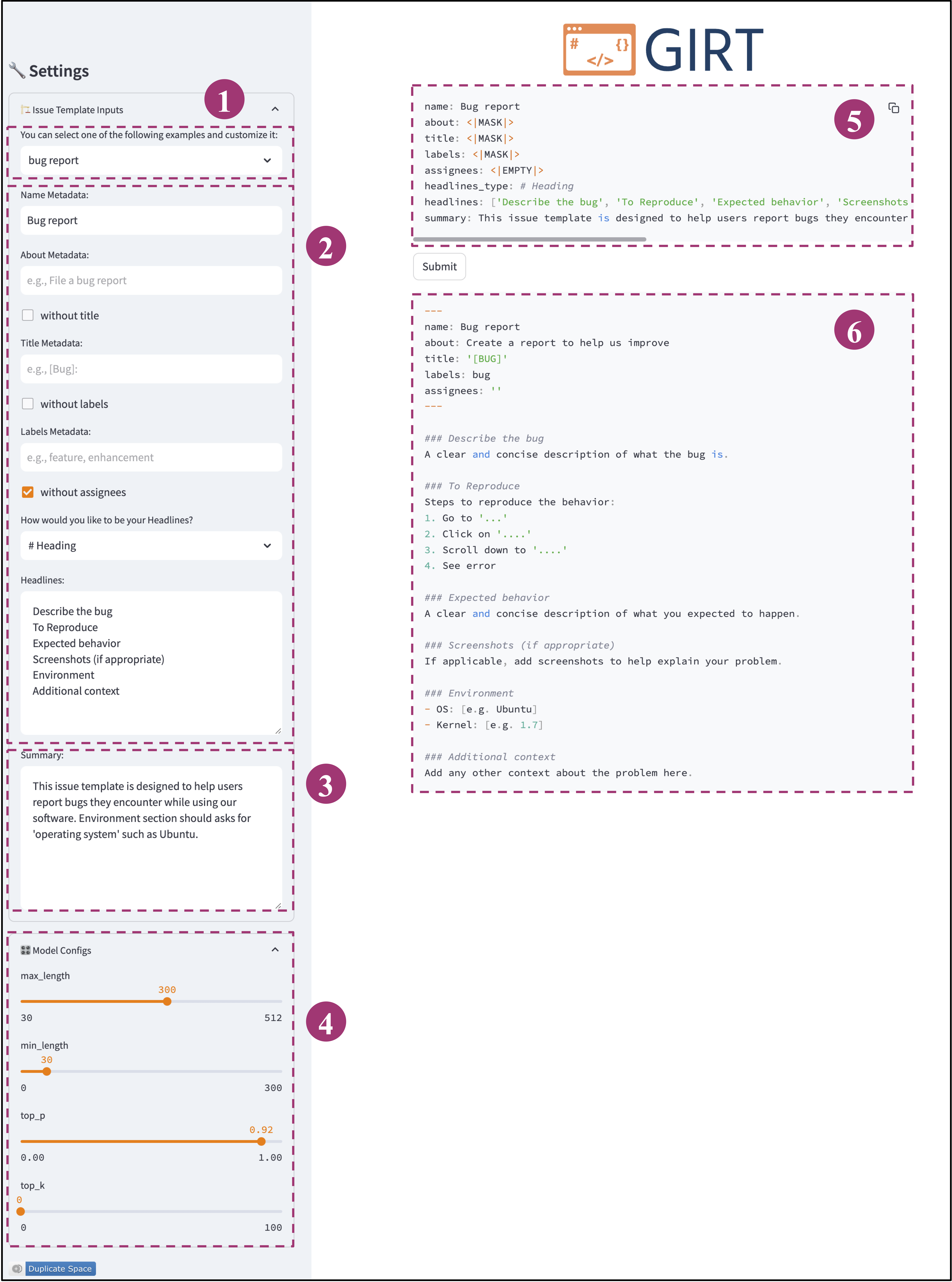

This UI is designed to interact with GIRT-Model, it is also accessible in huggingface: https://huggingface.co/spaces/nafisehNik/girt-space

- IRT input examples

- metadata fields of IRT inputs

- summary field of IRT inputs

- model config

- generated instruction based on the IRT inputs

- generated IRT

This work is accepted for publication in MSR 2024 conference, under the title of "GIRT-Model: Automated Generation of Issue Report Templates".

@inproceedings{nikeghbal2024girt-model,

title={GIRT-Model: Automated Generation of Issue Report Templates},

booktitle={21st IEEE/ACM International Conference on Mining Software Repositories (MSR)},

author={Nikeghbal, Nafiseh and Kargaran, Amir Hossein and Heydarnoori, Abbas},

month={April},

year={2024},

publisher={IEEE/ACM},

address={Lisbon, Portugal},

url = {https://doi.org/10.1145/3643991.3644906},

}

-orange)