The implementation Relativistic average GAN with Keras

[paper] [blog] [original code(pytorch)]

mkdir result

python RaGAN_CustomLoss.py --dataset [dataset] --loss [loss]

python RaGAN_CustomLayers.py --dataset [dataset] --loss [loss] [dataset]: mnist, fashion_mnist, cifar10

[loss]: BXE for Binary Crossentropy, LS for Least Squares

italic arguments are default

Custom Loss [Colab][NBViewer]

Custom Layer [Colab][NBViewer]

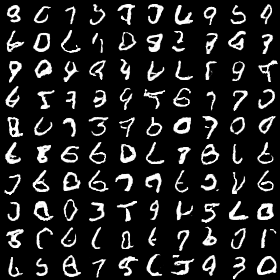

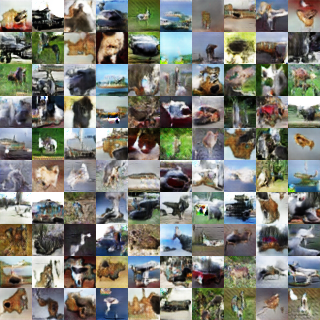

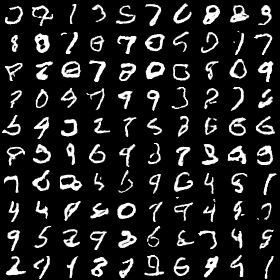

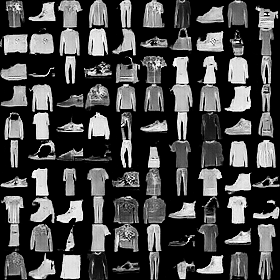

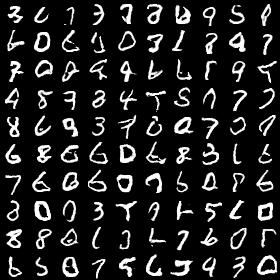

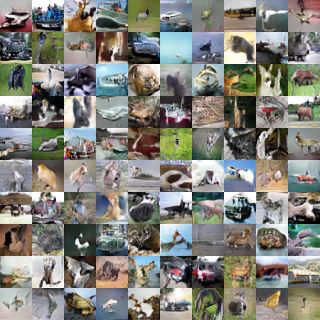

| 1 epoch | MNIST | Fashion MNIST | CIFAR10 |

|---|---|---|---|

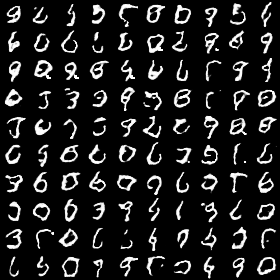

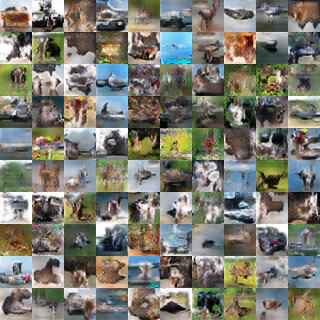

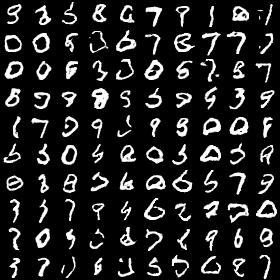

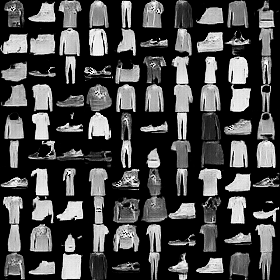

| Binary Cross Entropy |  |

|

|

| Least Square |  |

|

|

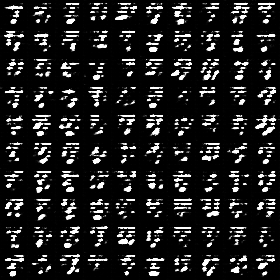

| 10 epoch | MNIST | Fashion MNIST | CIFAR10 |

|---|---|---|---|

| Binary Cross Entropy |  |

|

|

| Least Square |  |

|

|

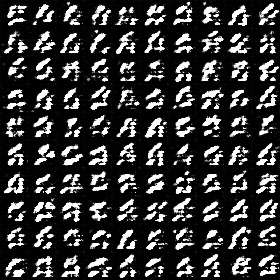

| 50epoch | MNIST | Fashion MNIST | CIFAR10 |

|---|---|---|---|

| Binary Cross Entropy |  |

|

|

| Least Square |  |

|

|

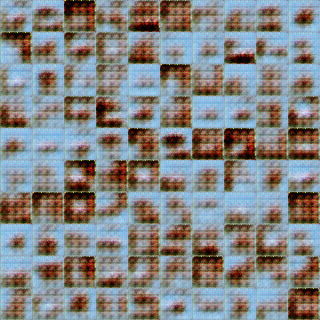

| 100epoch | MNIST | Fashion MNIST | CIFAR10 |

|---|---|---|---|

| Binary Cross Entropy |  |

|

|

| Least Square |  |

|

|

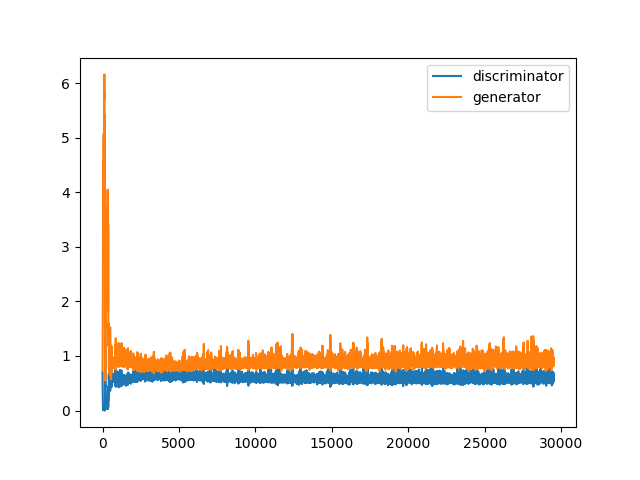

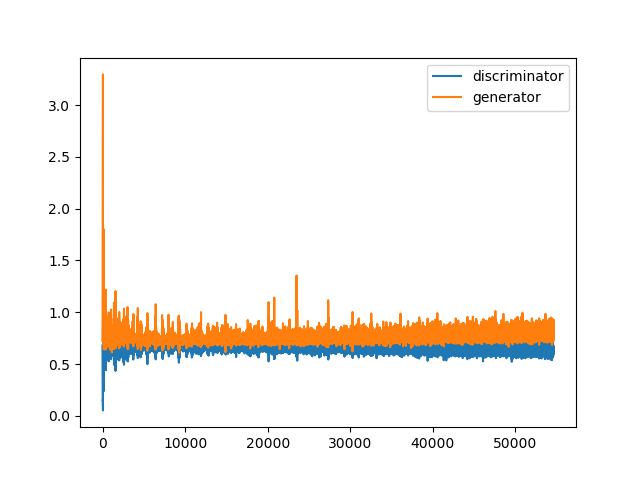

| Loss | MNIST | Fashion MNIST | CIFAR10 |

|---|---|---|---|

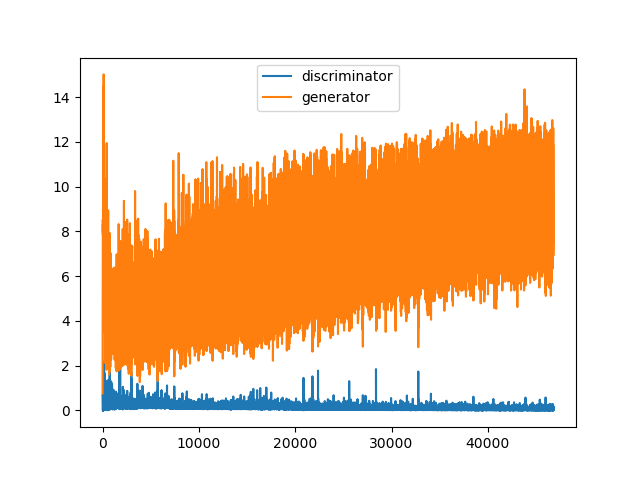

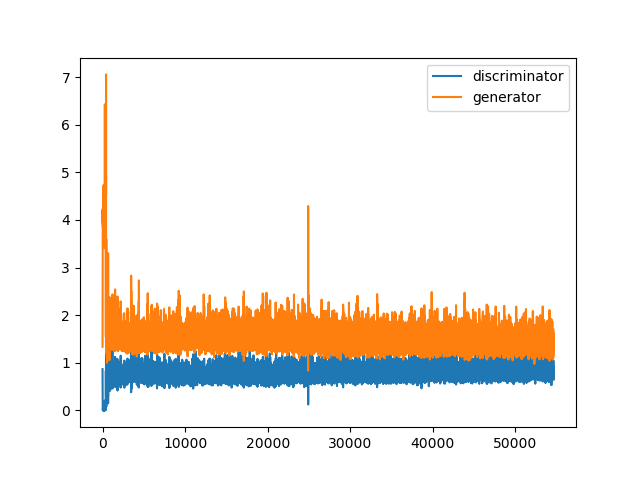

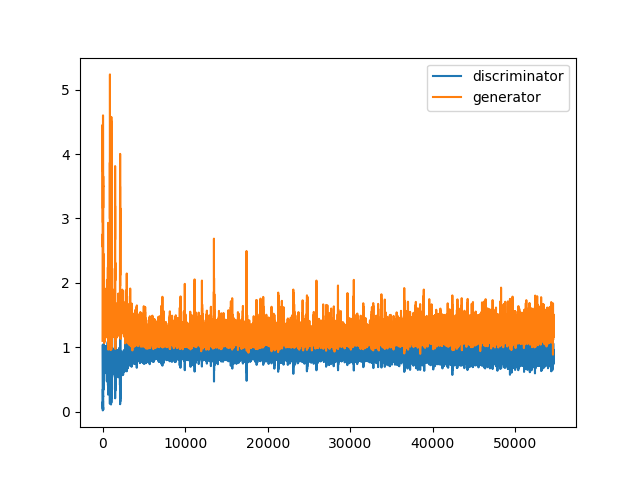

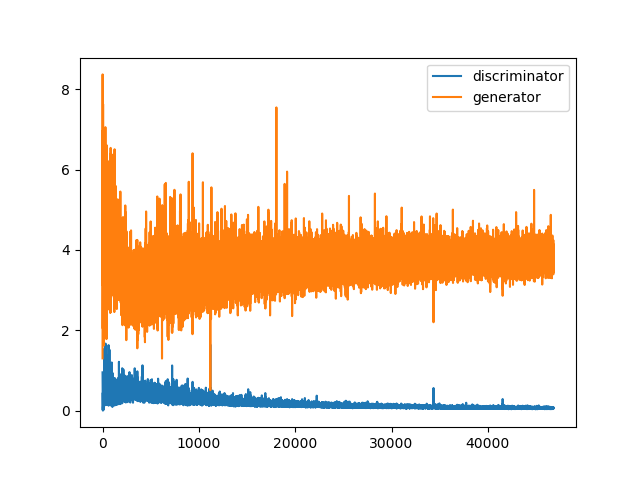

| Binary Cross Entropy |  |

|

|

| Least Square |  |

|

|

For better math equations rendering, check out HackMD Version

The GAN is the two player game which subject as below

is a value function( aka loss or cost function)

is a generator,

is a sample noise from the distribution we known(usually multidimensional Gaussian distribution).

is a fake data generated by the generator. We want

in the real data distribution.

is a discriminator, which finds out that

is a real data (output 1) or a fake data(output 0)

In the training iteration, we will train one neural network first(usual is discriminator), and train the other network. After a lot of iterations, we expect the last generator to map multidimensional Gaussian distribution to the real data distribution.

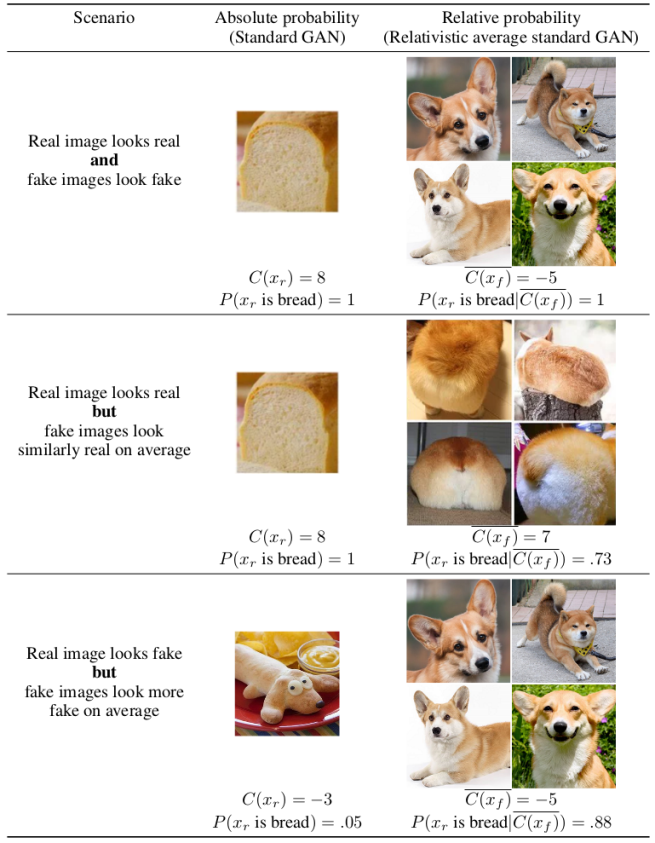

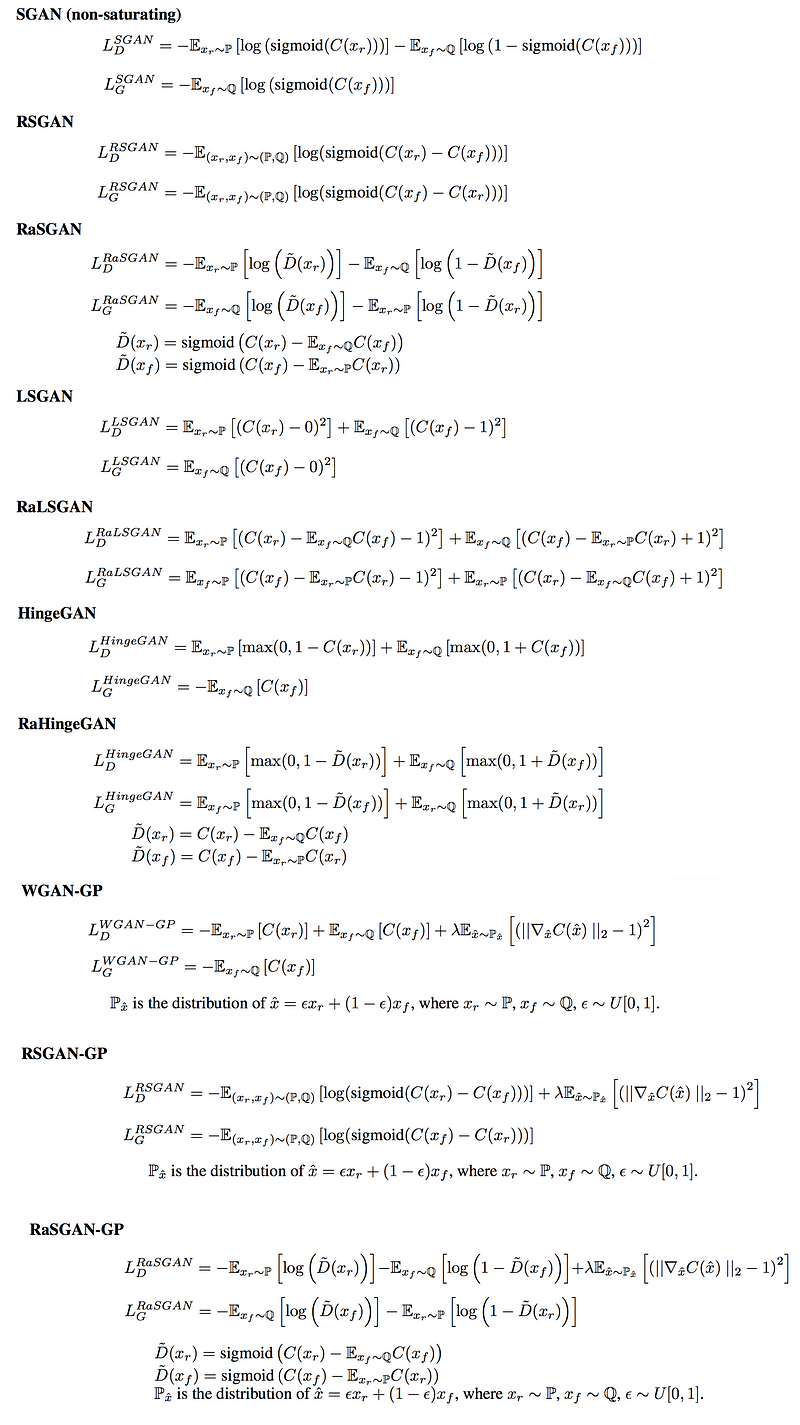

RaGAN's Loss function does not optimize discriminator to distinguish data real or fake. Instead, RaGAN's discriminator distinguishes that "real data isn’t like average fake data" or "fake data isn’t like average real data".

the discriminator estimates the probability that the given real data is more realistic than a randomly sampled fake data. paper subsection.4.1

Given Discriminator output

Origin GAN Loss is as below,

]-\mathbb{E}_{x_{fake}\sim\mathbb{P}_{fake}}[\log ( 1-D(x_{fake}))])

Relativistic average output is and

we can also add relativistic average in Least Square GAN or any other GAN

We got loss, so just code it. 😄

Just kidding, we have two approaches to implement RaGAN.

The important part of implementation is discriminator output.

and

We need to average

and

. We also need "minus" to get

and

.

We can use keras.backend to deal with it, but that means we need to custom loss. We can also write custom layers to apply these computations to keras as a layer, and use Keras default loss to train the model.

-

Custom layer

- Pros:

- Train our RaGAN easily with keras default loss

- Cons:

- Write custom layers to implement it.

- [Colab][NBViewer]

- Pros:

-

Custom Loss

- Pros:

- Do not need to write custom layers. Instead, we need write loss with keras.backend.

- Custom loss is easy to change loss.

- Cons:

- Write custom loss with keras.backend to implement it.

- [Colab][NBViewer]

- Pros: