[arxiv][WACV2020][poster][project page]

This is the source code of our WACV2020 paper "Self-Contained Stylization via Steganographyfor Reverse and Serial Style Transfer".

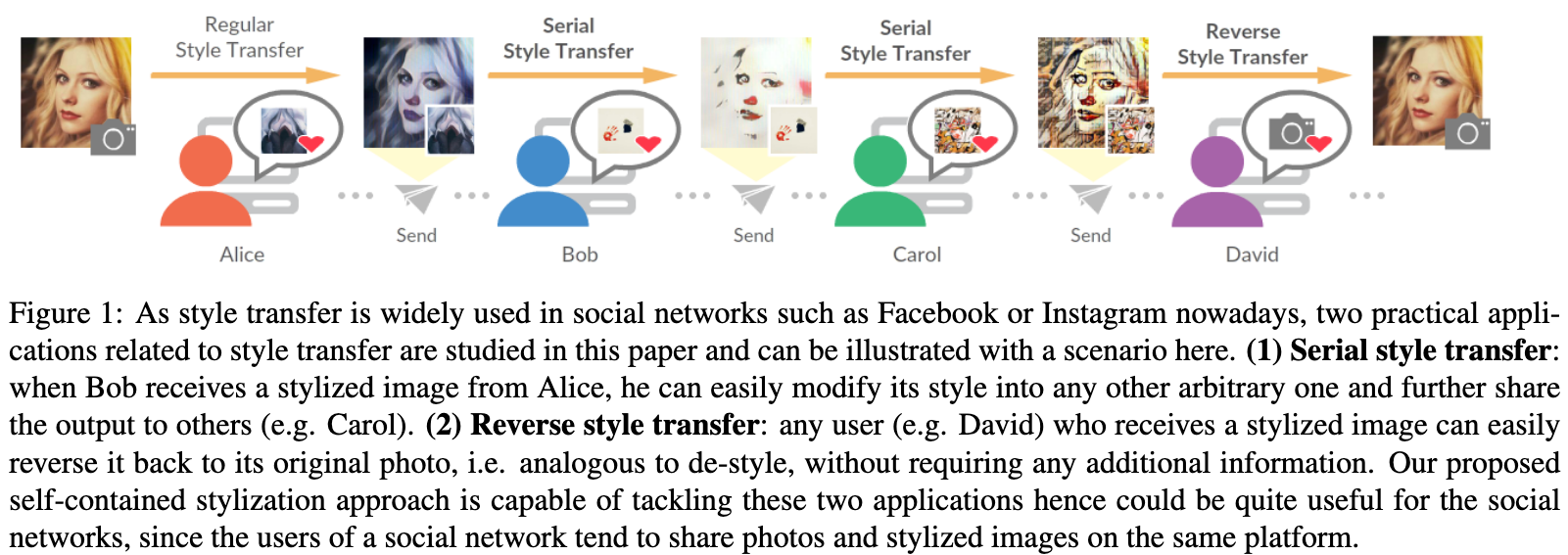

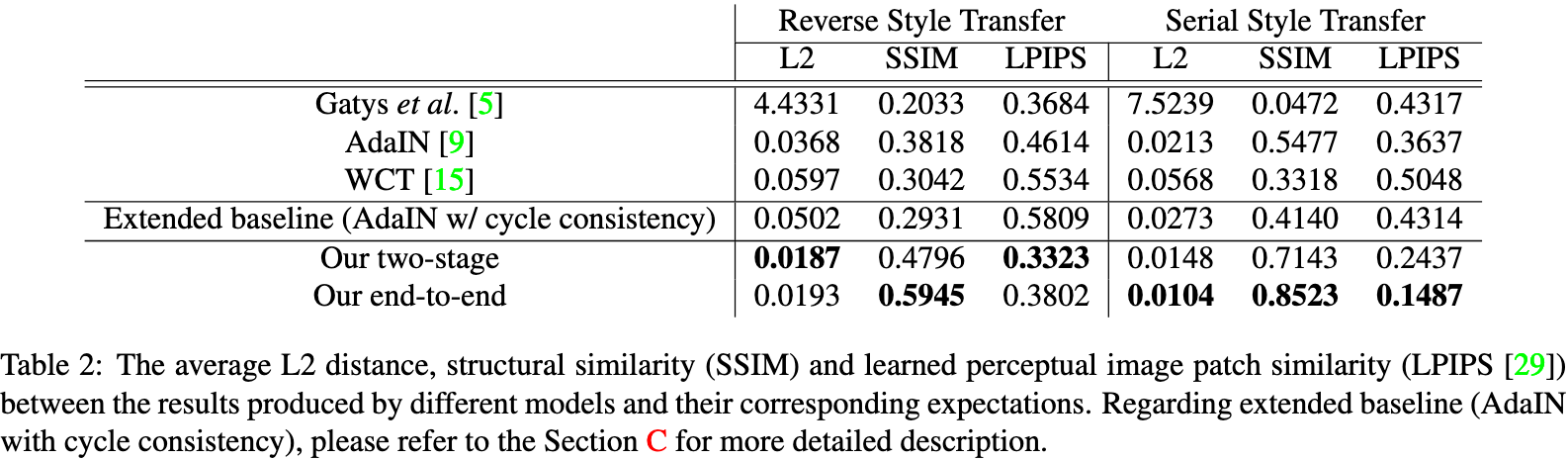

Style transfer has been widely applied to give real-world images a new artistic look. However, given a stylized image, the attempts to use typical style transfer methods for de-stylization or transferring it again into another style usually lead to artifacts or undesired results. We realize that these issues are originated from the content inconsistency between the original image and its stylized output. Therefore, in this paper we advance to keep the content information of the input image during the process of style transfer by the power of steganography, with two approaches proposed: a two-stage model and an end-to-end model. We conduct extensive experiments to successfully verify the capacity of our models, in which both of them are able to not only generate stylized images of quality comparable with the ones produced by typical style transfer methods, but also effectively eliminate the artifacts introduced in reconstructing original input from a stylized image as well as performing multiple times of style transfer in series.

- Python 3.5+

- PyTorch 0.4+

- TorchVision

- Pillow

- tqdm

In each model folder, model.py define the model. In model.py, SelfContained_Style_Transfer moudle has the operations for regular, serial and reverse style transfer.

- download [pretrained_weights] in ./<method_dir>/model_weights/

- test.py can operate regular, serial and reverse style transfer.

- Download MSCOCO images and Wikiart images.

- Download [pretrained_weights]

- run train.py as floder REMEAD.md

@inproceedings{chen20wacv,

title = {Self-Contained Stylization via Steganography for Reverse and Serial Style Transfer},

author = {Hung-Yu Chen and I-Sheng Fang and Chia-Ming Cheng and Wei-Chen Chiu},

booktitle = {IEEE Winter Conference on Applications of Computer Vision (WACV)},

year = {2020}

} Part of the code is based on pytorch-AdaIN