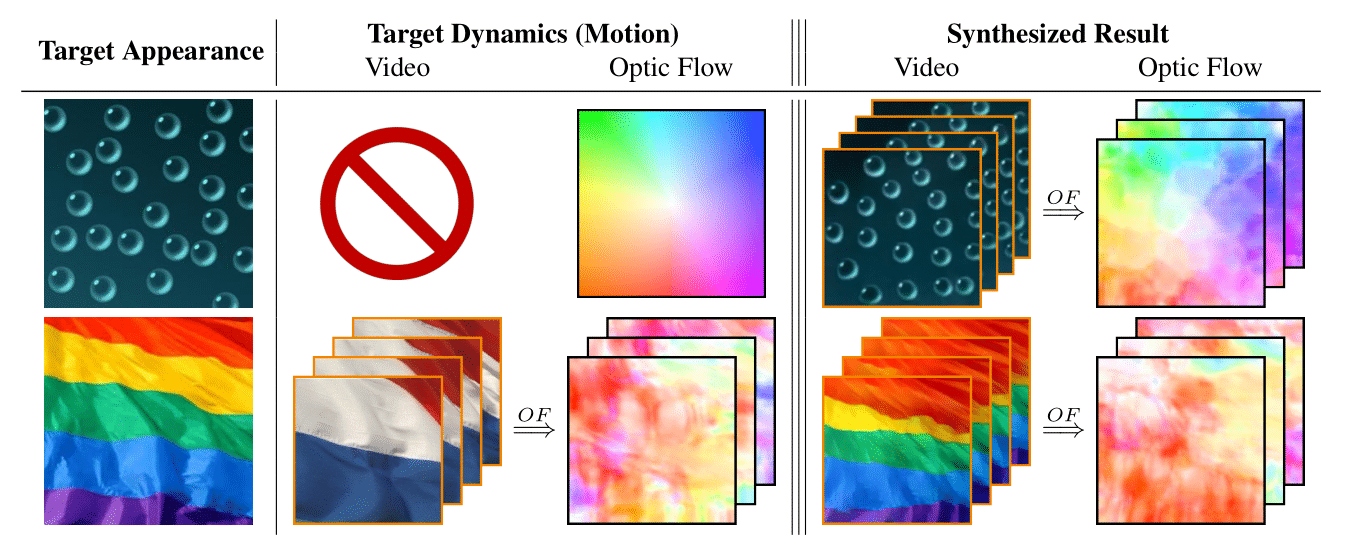

This is the official implementation of DyNCA, framework for real-time and controllable dynamic texture synthesis. Our model can learn to synthesize dynamic texture videos such that:

- The frames of the video resemble a given target appearance

- The succession of the frames induces the motion of the target dynamic which can either be a video or a motion vector field

DyNCA can learn the target motion either from a video or a motion vector field. In the table below you can find the corresponding notebooks for different modes of training DyNCA.

| Target Motion | Colab Notebook | Jupyter Notebook |

|---|---|---|

| VectorField | vector_field_motion.ipynb | |

| Video | video_motion.ipynb |

If you would like to train DyNCA in a local environment, please follow the steps outlined below.

- System Requirements

- Python 3.8

- CUDA 11

- GPU with minimum 12 GB RAM

- Dependencies

- Install PyTorch using the command from the official tutorial. We have tested on PyTorch 1.10.0cu113.

- Install other dependencies by running the following command.

pip install -r requirements.txt

- Vector Field Motion

python fit_vector_field_motion.py --target_appearance_path /path/to/appearance-image --motion_vector_foeld_name "circular"

See utils/loss/vector_field_loss.py and our supplementary materials for more names of vector fields.

- Video Motion

python fit_video_motion.py --target_dynamics_path /path/to/target-dynamic-video --target_appearance_path /path/to/appearance-image-or-video

We provide some sample videos under data/VideoMotion/Motion

After the training process is completed, the results can be visualized using the streamlit app, which is available in the result folder.

cd apps

streamlit run visualize_trained_models.py

If you make use of our work, please cite our paper:

@InProceedings{pajouheshgar2022dynca,

title = {DyNCA: Real-Time Dynamic Texture Synthesis Using Neural Cellular Automata},

author = {Pajouheshgar, Ehsan and Xu, Yitao and Zhang, Tong and S{\"u}sstrunk, Sabine},

booktitle = {Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2023},

}

This work is licensed under a Creative Commons Attribution-NonCommercial-ShareAlike 4.0 International License.