Short introduction to project assigment.

This work was done by Daahir Ali, Louis Tschall, Konstantin Krause during the IWI276 Autonome Systeme Labor at the Karlsruhe University of Applied Sciences (Hochschule Karlsruhe - Technik und Wirtschaft) in SS 2020.

- Python 3.6 (or above)

- OpenCV 4.0 (or above)

- Jetson Nano

- Jetpack 4.2

- Install requirements:

Installation in virtual environment:

- Pytorch

wget https://nvidia.box.com/shared/static/3ibazbiwtkl181n95n9em3wtrca7tdzp.whl -O torch-1.5.0-cp36-cp36m-linux_aarch64.whl sudo apt-get install python3-pip libopenblas-base pip install Cython pip install numpy torch-1.4.0-cp36-cp36m-linux_aarch64.whl - Torchvision

sudo apt-get install libjpeg-dev zlib1g-dev git clone --branch v0.6.0 https://github.com/pytorch/vision torchvision cd torchvision sudo python setup.py install cd ../ - torch2trt Follow manual on https://github.com/NVIDIA-AI-IOT/torch2trt#setup

- Face_Recognition (https://github.com/ageitgey/face_recognition)

pip install face_recognition

- Pytorch

- Convert pretrained model

- Conversion

python convert2trt.py <mode> <source> <target> - Info: the pipeline expects the converted model to be located under

'./models/resnet50.224.trt.pth'. If needed this has to be changed inface_expression_recognition.py.

Pre-trained model is available at pretrained-models.

The converteed pretrained model has to be unzipped using 7-Zip, because of GitHub's 100mb upload limit

This can be done with 7z x archive.7z.001

The converted pretrained model resnet50.224.trt.pth may or may not work on your machine bunt in any case a self-converted model is signtificantly faster than this one.

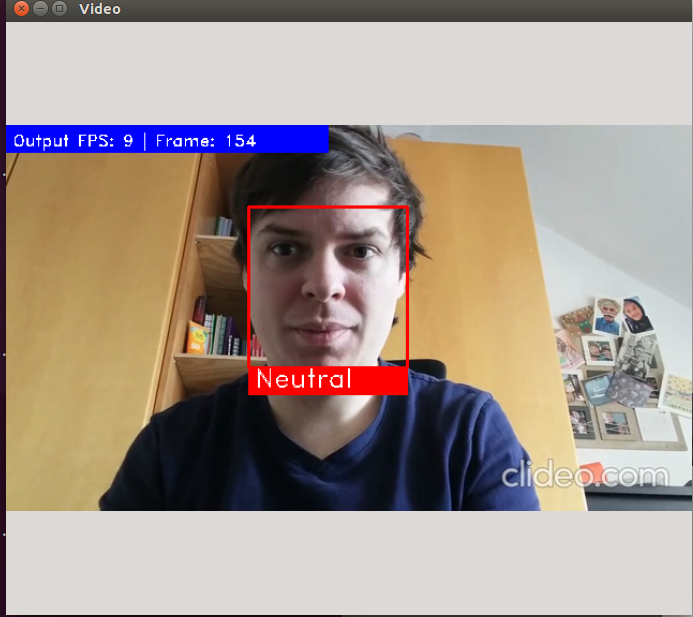

The pipeline can be run with

python pipeline_main.py <source>Available sources are for example:

- Camera-Index (e.g. 0)

- IP-Camera (als URL)

- Video (PfPathad, e.g. mp4)

In file config.yml are settings to improve performance or accuracy.

This repo is based on

Thanks to the original authors for their work!

Please email mickael.cormier AT iosb.fraunhofer.de or tschalllouis AT-Sign gmail.com for further questions.