A face recognition solution on mobile device.

- Anaconda (optional but recommend)

- MXNet and GluonCV (the easiest way to install)

- DLib (may be deprecated in the future)

The easiest way to install DLib is through pip.

pip install dlib| Model | Framework | Size | CPU | LFW | Target |

|---|---|---|---|---|---|

| MobileFace_Identification_V1 | MXNet | 3.40M | 8.5ms | - | Actual Scene |

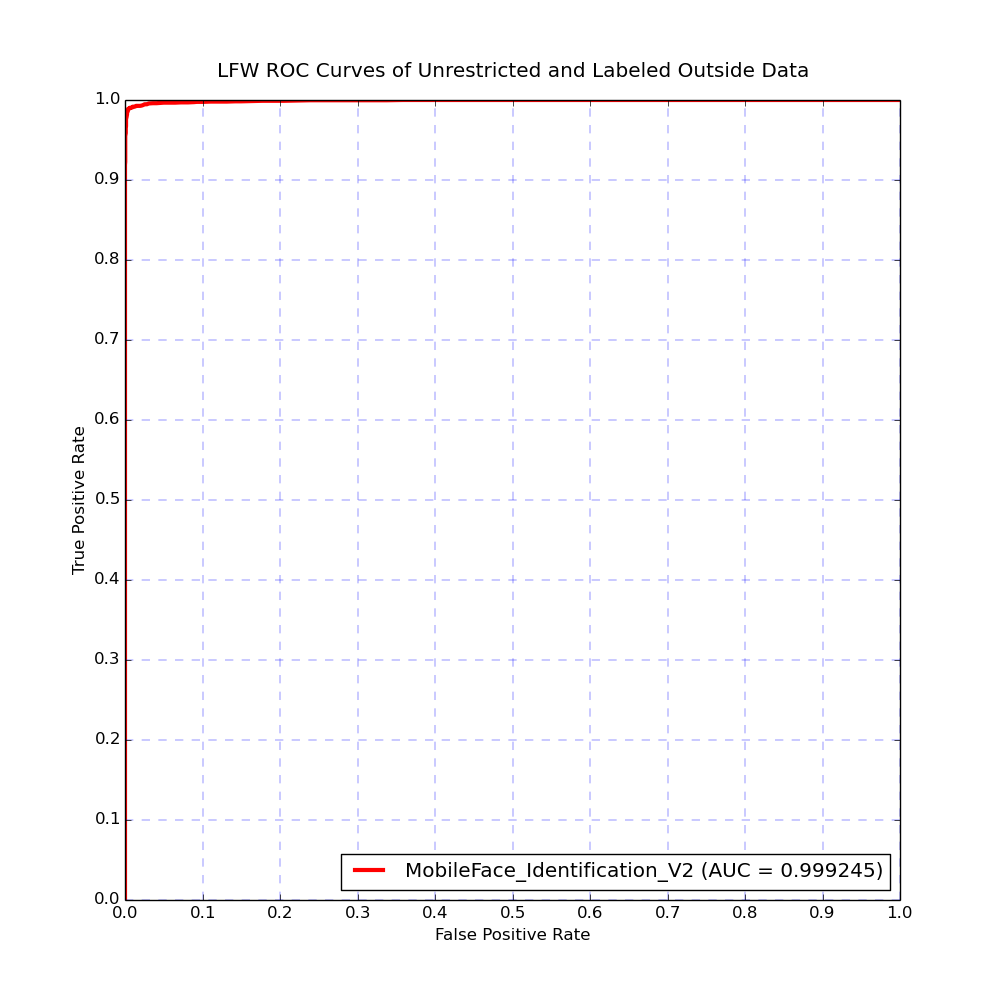

| MobileFace_Identification_V2 | MXNet | 3.41M | 9ms | 99.653% | Benchmark |

| 🌟MobileFace_Identification_V3 | MXNet | 2.10M | 💥3ms(sota) | 95.466%(baseline) | Benchmark |

| Model | Framework | Size | CPU |

|---|---|---|---|

| MobileFace_Detection_V1 | MXNet/GluonCV | 30M | 20ms/50fps |

| Model | Framework | Size | CPU |

|---|---|---|---|

| MobileFace_Landmark_V1 | DLib | 5.7M | <1ms |

| Model | Framework | Size | CPU |

|---|---|---|---|

| MobileFace_Pose_V1 | free | <1K | <0.1ms |

To get fast face feature embedding with MXNet as follow:

cd example

python get_face_feature_mxnet.pyTo get fast face detection result with MXNet/GluonCV as follow:

cd example

python get_face_boxes_gluoncv.pyTo get fast face landmarks result with dlib as follow:

cd example

python get_face_landmark_dlib.pyTo get fast face pose result as follow:

cd example

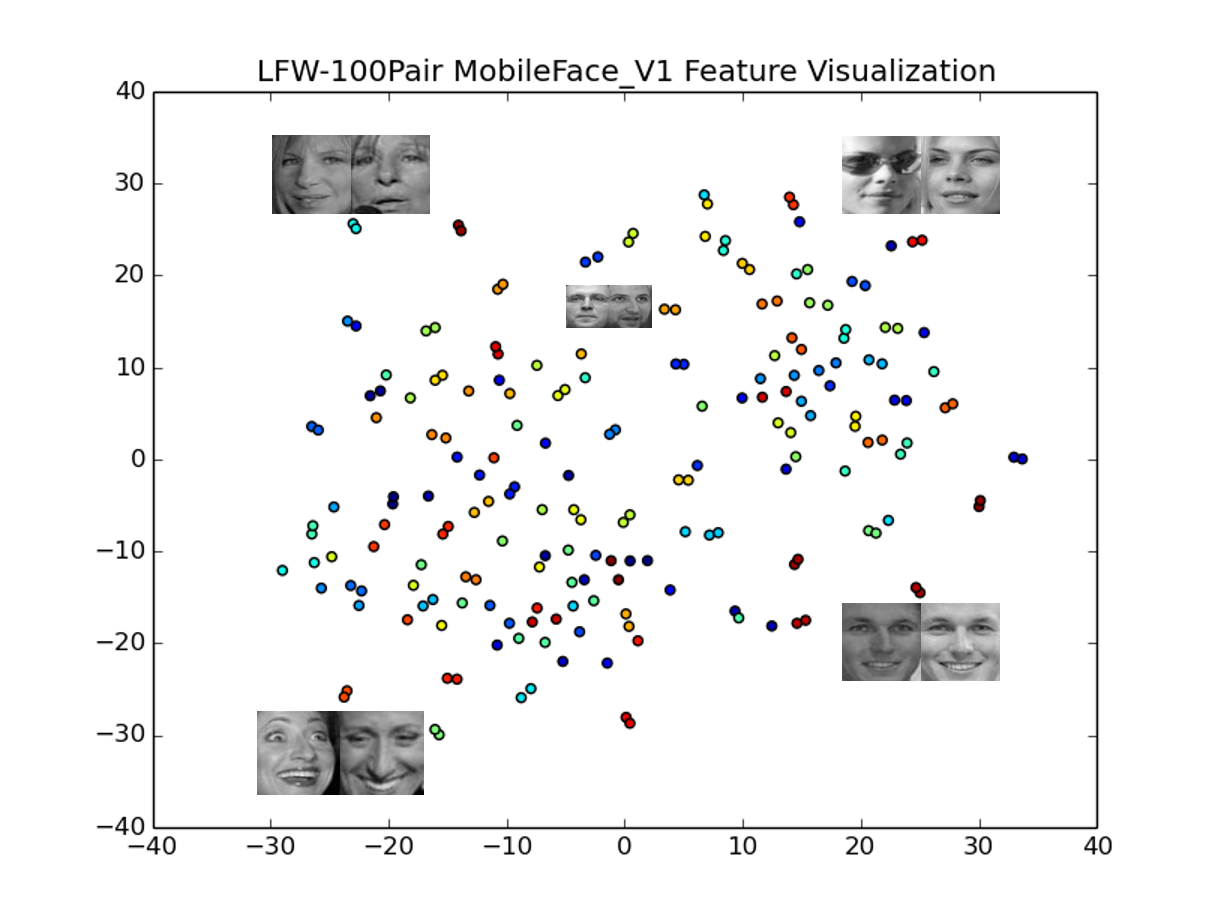

python get_face_pose.pyI used the t-SNE algorithm to visualize in two dimensions the 256-dimensional embedding space. Every color corresponds to a different person(but colors are reused): as you can see, the MobileFace has learned to group those pictures quite tightly. (the distances between clusters are meaningless when using the t-SNE algorithm)

To get the t-SNE feature visualization above as follow:

cd tool/tSNE

python face2feature.py # get features and lables and save them to txt

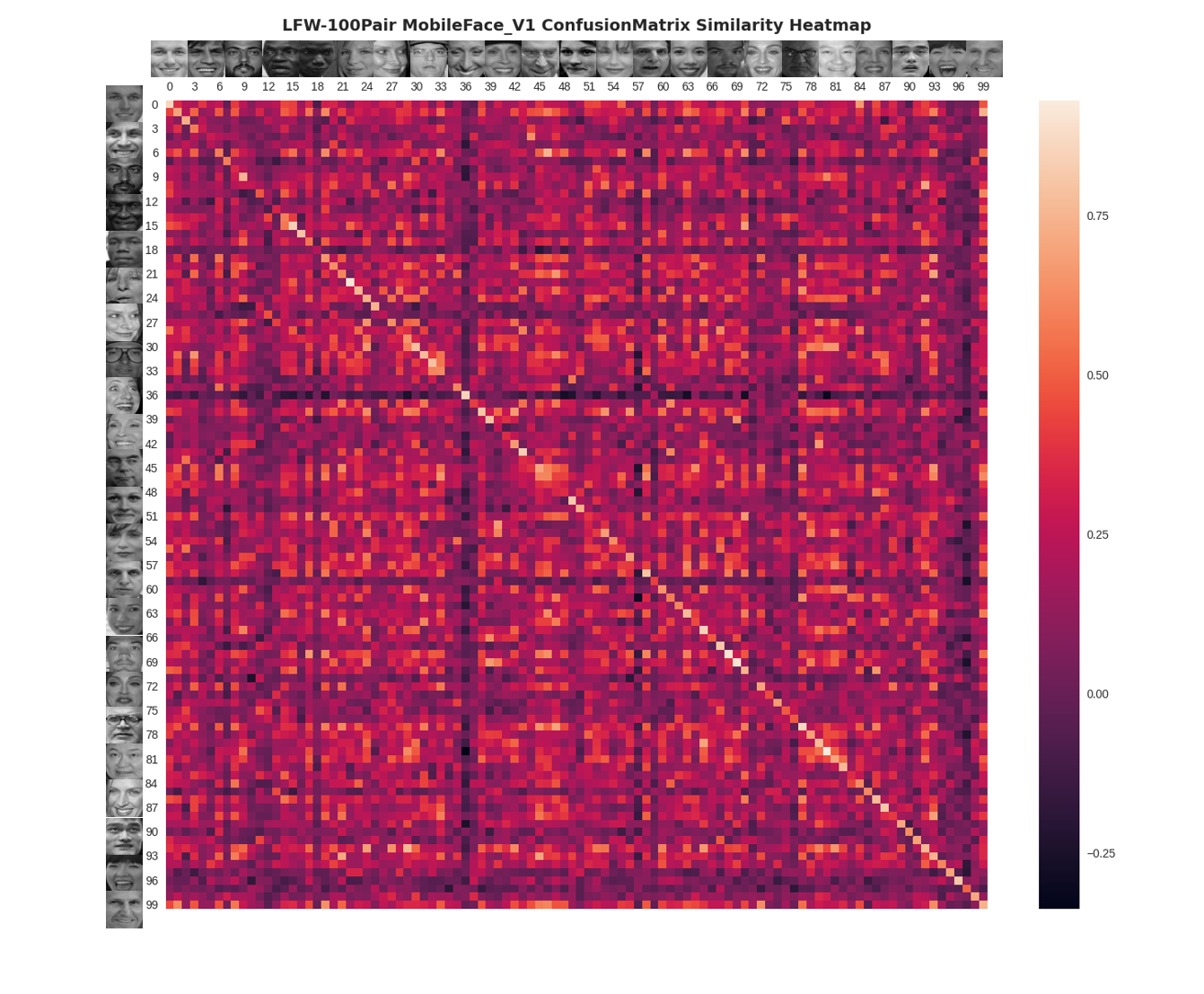

python tSNE_feature_visualization.py # load the txt to visualize face feature in 2D with tSNEI used the ConfusionMatrix to visualize the 256-dimensional feature similarity heatmap of the LFW-Aligned-100Pair: as you can see, the MobileFace has learned to get higher similarity when calculating the same person's different two face photos. Although the performance of the V1 version is not particularly stunning on LFW Dataset, it does not mean that it does not apply to the actual scene.

To get the ConfusionMatrix feature similarity heatmap visualization above as follow:

cd tool/ConfusionMatrix

python ConfusionMatrix_similarity_visualization.pyTo get inference time of different version's MXNet models as follow:

cd tool/time

python inference_time_evaluation_mxnet.py --symbol_version=V3 # default = V1Prune the MXNet model through deleting the needless layers (such as classify layer and loss layer) and only retaining features layers to decrease the model size for inference as follow:

cd tool/prune

python model_prune_mxnet.pyThe LFW test dataset (aligned by MTCNN and cropped to 112x112) can be download from Dropbox or BaiduDrive, and then put it (named lfw.bin) in the directory of data/LFW-bin.

To get the LFW comparison result and plot the ROC curves as follow:

cd benchmark/LFW

python lfw_comparison_and_plot_roc.py- MobileFace_Identification

- MobileFace_Detection

- MobileFace_Landmark

- MobileFace_Align

- MobileFace_Attribute

- MobileFace_Pose

- MobileFace_NCNN

- MobileFace_FeatherCNN

- Benchmark_LFW

- Benchmark_MegaFace

Coming Soon!