This repo contains code for our paper. Our model is implemented in Detectron2.

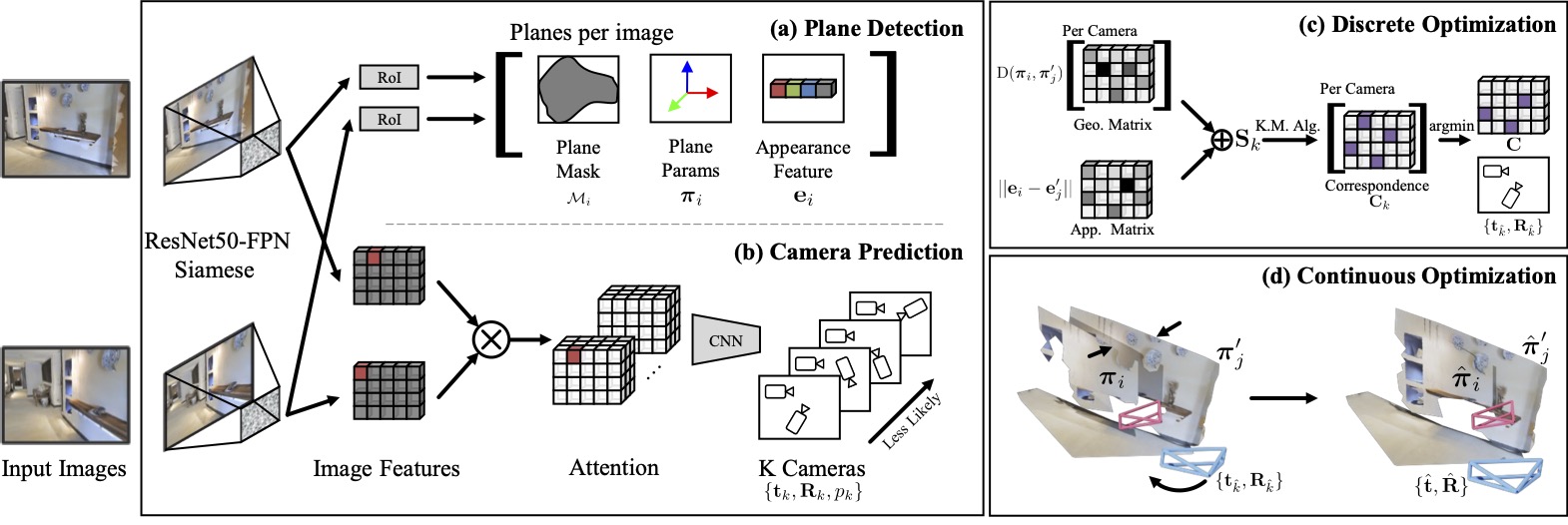

Given two RGB images with an unknown relationship, our system produces a single, coherent planar surface reconstruction of the scene in terms of 3D planes and relative camera poses.

We use a ResNet50-FPN to detect planes and predict probabilities of relative camera poses, and use a two-step optimization to generate a coherent planar reconstruction. (a) For each plane, we predict a segmentation mask, plane parameters, and an appearance feature. (b) Concurrently, we pass image features from the detection backbone through the attention layer and predict the camera transformation between views. (c) Our discrete optimization fuses the prediction of the separate heads to select the best camera pose and plane correspondence. (d) Finally, we use continuous optimization to update the camera and plane parameters.

We use a ResNet50-FPN to detect planes and predict probabilities of relative camera poses, and use a two-step optimization to generate a coherent planar reconstruction. (a) For each plane, we predict a segmentation mask, plane parameters, and an appearance feature. (b) Concurrently, we pass image features from the detection backbone through the attention layer and predict the camera transformation between views. (c) Our discrete optimization fuses the prediction of the separate heads to select the best camera pose and plane correspondence. (d) Finally, we use continuous optimization to update the camera and plane parameters.

- How to setup your environment?

- How to inference the code on a pair of images?

- How to process the dataset?

- How to train your model?

- How to evaluate your model? (TODO)

If you find this code useful, please consider citing:

@inproceedings{jin2021planar,

title={Planar Surface Reconstruction from Sparse Views},

author={Linyi Jin and Shengyi Qian and Andrew Owens and David F. Fouhey},

booktitle = {ICCV},

year={2021}

}

We thank Dandan Shan, Mohamed El Banani, Nilesh Kulkarni, Richard Higgins for helpful discussions. Toyota Research Institute ("TRI") provided funds to assist the authors with their research but this article solely reflects the opinions and conclusions of its authors and not TRI or any other Toyota entity.