Contains a collection of software packages for passive acoustic monitoring (PAM) of forest elephants rumbles.

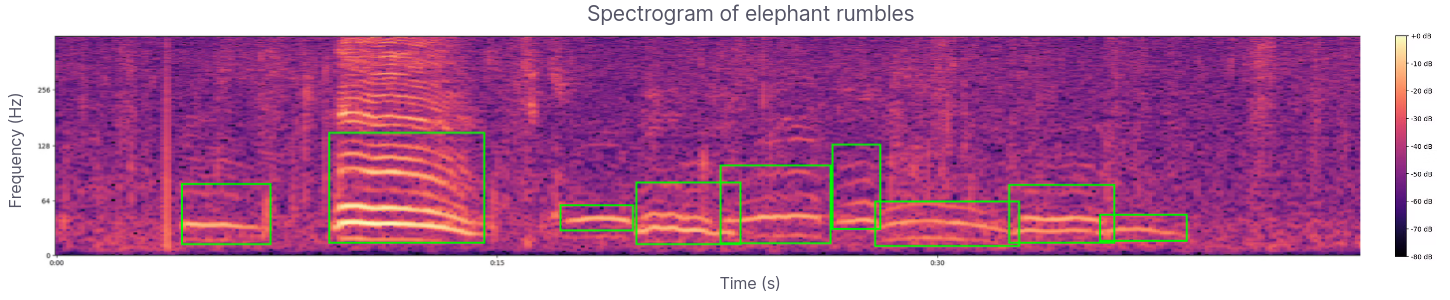

The low fundamental frequencies of elephant rumbles are located at the bottom of the spectrogram and typically range from 14-35 Hz. These are the primary frequencies of the rumbles. Harmonics, which are integer multiples of the fundamental frequencies, appear as several horizontal lines above the fundamental frequency. For example, if the fundamental frequency is 20 Hz, the harmonics will be at 40 Hz, 60 Hz, 80 Hz, and so on. These harmonic lines are spaced at regular intervals and are usually less intense (lighter) than the fundamental frequency.

Elephant rumbles are low-frequency vocalizations produced by elephants, primarily for communication. These rumbles are a fundamental part of elephant social interactions and serve various purposes within their groups. Here’s a detailed explanation:

-

Low Frequency: Elephant rumbles typically fall in the infrasound range, below 20 Hz, which is often below the threshold of human hearing. However, some rumbles can also be heard by humans as a low, throaty sound.

-

Long Distance Communication: Due to their low frequency, rumbles can travel long distances, sometimes several kilometers, allowing elephants to communicate with each other across vast areas, even when they are out of sight. It can also travel through dense forests as the wavelength is very large.

-

Vocal Production: Rumbles are produced by the larynx and can vary in frequency, duration, and modulation. Elephants use different types of rumbles to convey different messages.

-

Coordination and Social Bonding: Elephants use rumbles to maintain contact with members of their herd, coordinate movements, and reinforce social bonds. For example, a matriarch might use a rumble to lead her group to a new location.

-

Reproductive Communication: Male elephants, or bulls, use rumbles to communicate their reproductive status and readiness to mate. Females also use rumbles to signal their estrus status to potential mates.

-

Alarm and Distress Calls: Rumbles can signal alarm or distress, warning other elephants of potential danger. These rumbles can mobilize the herd and prompt protective behavior.

-

Mother-Calf Communication: Mothers and calves use rumbles to stay in contact, especially when they are separated. Calves may rumble to signal hunger or distress, prompting a response from their mothers.

-

Conservation Efforts: Understanding elephant communication helps in conservation efforts by providing insights into their social structure, habitat needs, and responses to environmental changes.

-

Human-Elephant Conflict Mitigation: By recognizing alarm rumbles, conservationists can better manage and mitigate conflicts between humans and elephants, especially in regions where their habitats overlap.

-

Enhancing Animal Welfare: For elephants in captivity, understanding their rumbles can help caretakers improve their welfare by addressing their social and environmental needs more effectively.

Overall, elephant rumbles are a vital aspect of their complex communication system, reflecting the sophistication of their social interactions and the importance of acoustic signals in their daily lives.

This work is a collaboration with The Elephant Listening Project and The Cornell Lab.

To conserve the tropical forests of Africa through acoustic monitoring, sound science, and education, focusing on forest elephants

- The Elephant Listening Project

Fifty microphones are arranged in a grid within the Tropical Forest of Gabon, continuously recording forest sounds around the clock. The provided software is an advanced sound analyzer capable of processing these extensive audio recordings at high speed, allowing for the analysis of terabytes of audio data in just a few days.

Once one has followed the setup section below, it is possible to test the rumble detector using the following command:

python ./scripts/model/yolov8/predict_raven.py \

--input-dir-audio-filepaths ./data/08_artifacts/audio/rumbles/ \

--output-dir ./data/05_model_output/yolov8/predict/ \

--model-weights-filepath ./data/08_artifacts/model/rumbles/yolov8/weights/best.pt \

--verbose \

--loglevel "info"The Rumble Detector is also available as a Docker image, ensuring portability across different operating systems and setups as long as Docker is installed.

To pull the Docker image, use the following command:

docker pull earthtoolsmaker/elephantrumbles-detector:latestTo run the Docker container, execute:

docker run --rm earthtoolsmaker/elephantrumbles-detector:latestNote: By default, audio files and predictions are contained within the container, which limits its practical use. However, this command demonstrates the detector's functionality.

To analyze your own audio files and save the results to your machine, you need to mount two directories into the Docker container:

- input-dir-audio_filepaths: The directory containing the audio files to be analyzed.

- output-dir: The directory where the artifacts and predictions will be saved.

In the example below, the input-dir-audio_filepaths is

./data/03_model_input/sounds/rumbles/ and the output-dir is

./runs/predict/. The program will analyze the audio files in the input

directory and save the results in the output directory.

docker run --rm \

-v ./data/03_model_input/sounds/rumbles/:/app/data/08_artifacts/audio/rumbles/ \

-v ./runs/predict/:/app/data/05_model_output/yolov8/predict \

earthtoolsmaker/elephantrumbles-detector:latestThe pipeline will do the following:

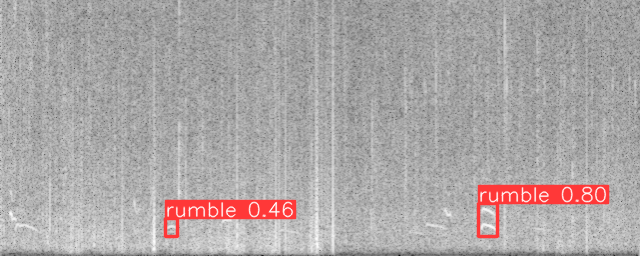

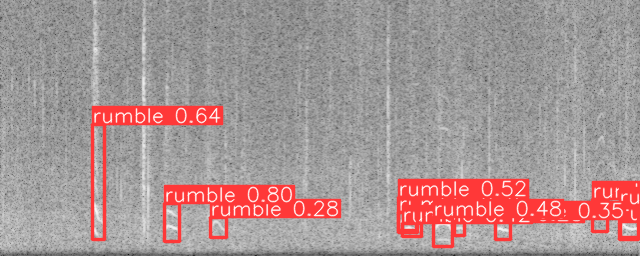

- Generate spectrograms in the frequency range 0-250Hz, where all the elephant rumbles are located

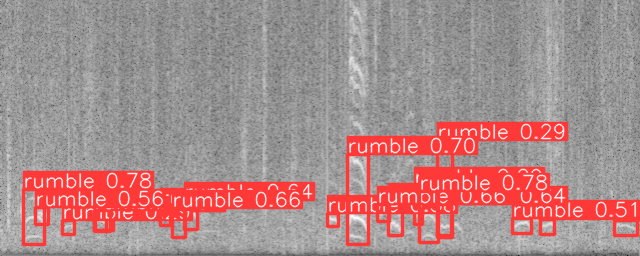

- Run the rumble object detector on batches of spectrograms

- Save the predictions as a CSV

Note: The verbose flag tells the command to also persist the generated

spectrograms and predictions, they will be located in the output-dir.

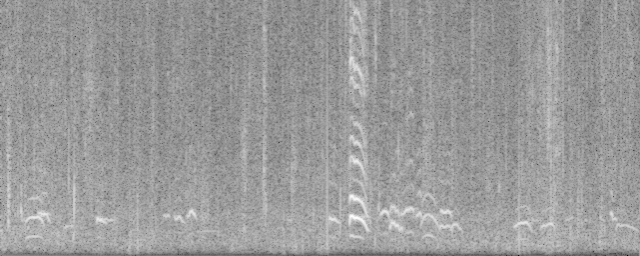

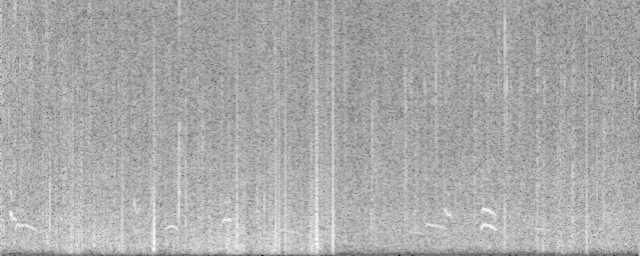

| Spectrogram | Prediction |

|---|---|

|

|

|

|

|

|

Below is a sample of a generated CSV file:

| probability | freq_start | freq_end | t_start | t_end | audio_filepath | instance_class |

|---|---|---|---|---|---|---|

| 0.7848126888275146 | 185.34618616104126 | 238.925039768219 | 6.117525324225426 | 11.526521265506744 | data/08_artifacts/audio/rumbles/sample_0.wav | rumble |

| 0.7789380550384521 | 187.46885657310486 | 237.14002966880798 | 107.4117157459259 | 112.39507365226746 | data/08_artifacts/audio/rumbles/sample_0.wav | rumble |

| 0.6963282823562622 | 150.82329511642456 | 238.47350478172302 | 89.08285737037659 | 94.3071436882019 | data/08_artifacts/audio/rumbles/sample_0.wav | rumble |

| 0.6579649448394775 | 203.18885147571564 | 231.6151112318039 | 44.13426876068115 | 47.50721764564514 | data/08_artifacts/audio/rumbles/sample_0.wav | rumble |

| ... | ... | ... | ... | ... | ... | ... |

The aim of this project is to enable the rapid analysis of large-scale audio datasets, potentially reaching terabyte scales. By leveraging multiprocessing and utilizing the maximum number of CPU and GPU cores, we strive to optimize processing speed and efficiency. Benchmark analyses have been conducted on both CPU and GPU to ensure optimal performance.

Processing a 24-hour audio file on an 8-core CPU takes approximately 35 seconds in total:

- Loading the audio file: ~4 seconds

- Generating spectrograms: ~11 seconds

- Running model inference: ~19 seconds

- Miscellaneous tasks: ~1 second

Processing a 24-hour audio file using a GPU (T4) and an 8-core CPU takes approximately 20 seconds in total:

- Loading the audio file: ~4 seconds

- Generating spectrograms: ~11 seconds

- Running model inference: ~4 seconds

- Miscellaneous tasks: ~1 second

- Number of sound recorders:

$N_{sr} = 50$ - Number of days to analyze:

$N_{d} = 30$ (1 month) - Size of a 24hour audio recording

$W = 657$ MB - Amount of data to analyze:

$N_{sr} \times N_{d} \times W = 986$ GB (1 month) - Time to process a 24h audio file with a CPU:

$T_{CPU} = 35$ s - Time to process a 24h audio file with a GPU:

$T_{GPU} = 20$ s

On a CPU setup with 8 cores, analyzing 1 month of sound data - ~1TB - would

require:

To analyze 6 months of sound data - ~6TB - it would require:

On a CPU setup with 8 cores, analyzing 1 month of sound data would require:

To analyze 6 months of sound data - ~6TB - it would require:

- Poetry: Python packaging and dependency

management - Install it with something like

pipx - Git LFS: Git Large File Storage replaces large files such as jupyter notebooks with text pointers inside Git while storing the file contents on a remote server like github.com

- DVC: Data Version Control - This will get installed automatically

- MLFlow: ML Experiment Tracking - This will get installed automatically

Follow the official documentation to install poetry.

Make sure git-lfs is installed on your system.

Run the following command to check:

git lfs installIf not installed, one can install it with the following:

sudo apt install git-lfs

git-lfs installbrew install git-lfs

git-lfs installDownload and run the latest windows installer.

Create a virtualenv and install python version with conda - or use a combination of pyenv and venv:

conda create -n pyronear-mlops python=3.12Activate the virtual environment:

conda activate pyronear-mlopsInstall python dependencies

poetry installThe project is organized following mostly the cookie-cutter-datascience guideline.

All the data lives in the data folder and follows some data engineering

conventions.

The library code is available under the src/forest_elephants_rumble_detection folder.

The notebooks live in the notebooks folder. They are automatically synced to

the Git LFS storage.

Please follow this

convention

to name your Notebooks.

<step>-<ghuser>-<description>.ipynb - e.g., 0.3-mateo-visualize-distributions.ipynb.

The scripts live in the scripts folder, they are

commonly CLI interfaces to the library

code.

DVC is used to track and define data pipelines and make them

reproducible. See dvc.yaml.

To get an overview of the pipeline DAG:

dvc dagTo run the full pipeline:

dvc reproAn MLFlow server is running when running ML experiments to track hyperparameters and performances and to streamline model selection.

To start the mlflow UI server, run the following command:

make mlflow_startTo stop the mlflow UI server, run the following command:

make mlflow_stopTo browse the different runs, open your browser and navigate to the URL: http://localhost:5000