- Python >= 3.10

- pip >= 22.2.2

- docker

- docker-compose >= 2.10.2

git clone https://github.com/Illumaria/airflow-etl.git

cd airflow-etl- Get

API_KEYandAPI_SECRETfor https://live-score-api.com/. - Either set them as environment variables:

export API_KEY=<your_api_key>

export API_SECRET=<your_api_secret>or put them in a .env file.

- Depending on the method selected in the previous step, run one of the following commands.

docker-compose up --build -dor

docker-compose --env-file <path_to_.env_file> up --build -dIn those cases when docker only runs with sudo, don't forget that sudo has its own environment variables, so you need to add the following to the commands above:

sudo -E docker-compose ...-

Once all the services are up and running, go to

https://localhost:8080(useairflowfor both username and password), then unpause all DAGs and run them for the first time. -

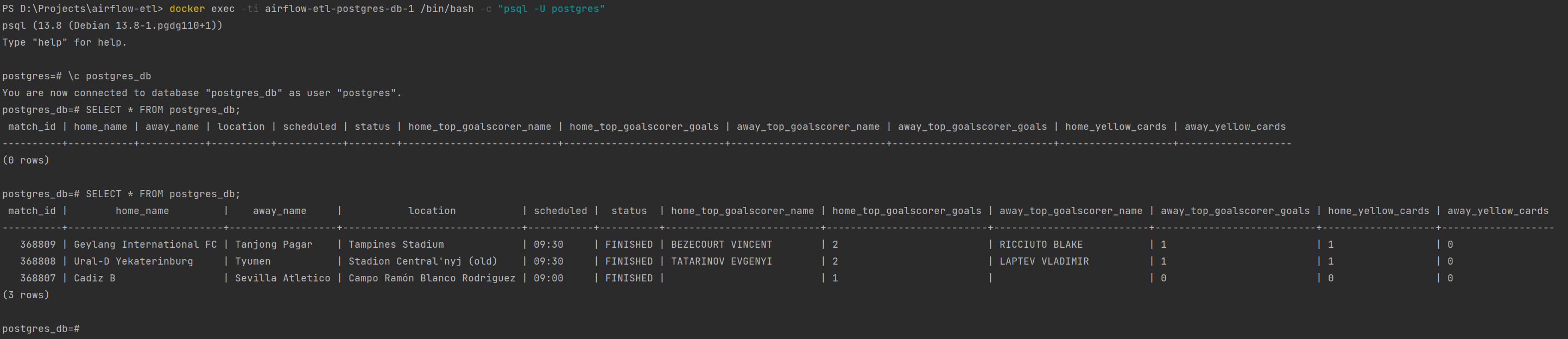

We can check that the DAG wrote to the DB by executing the following commands:

docker exec -ti airflow-etl-postgres-db-1 /bin/bash -c "psql -U postgres"

\c postgres_db

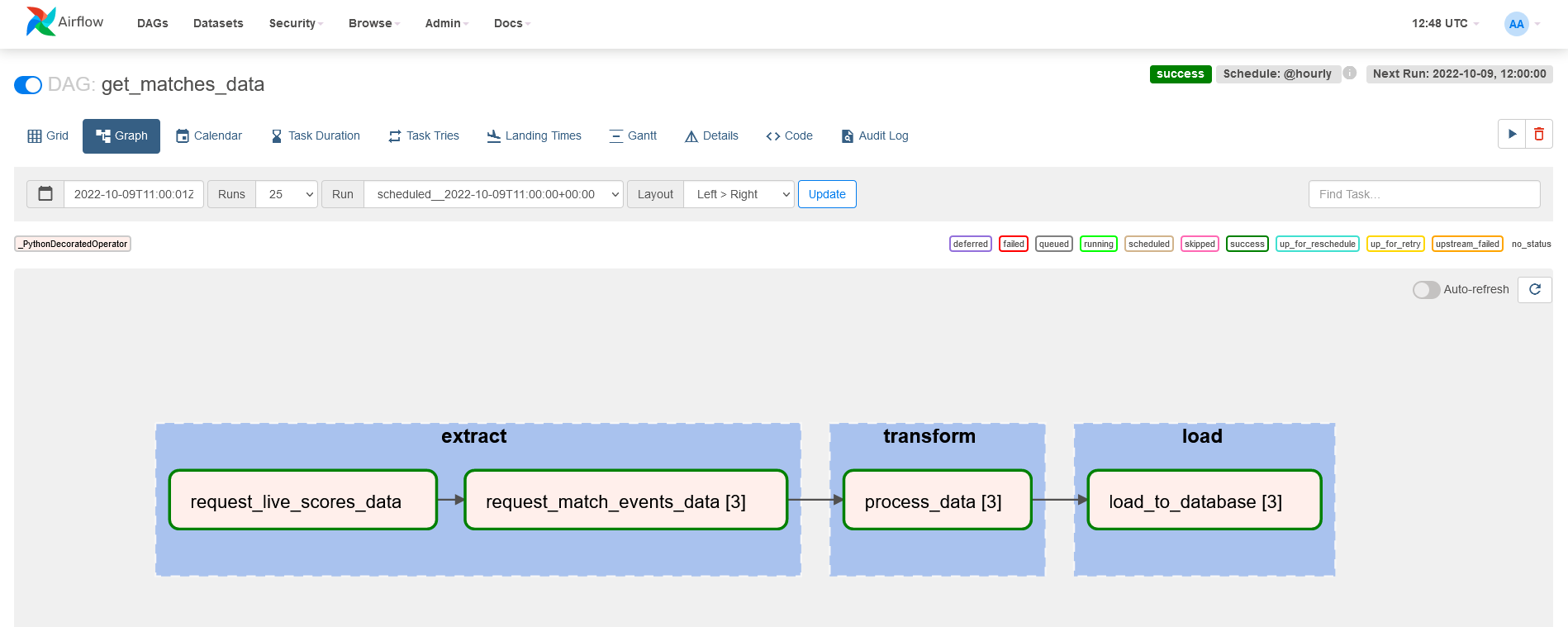

SELECT * FROM postgres_db;This is the expected result for Airflow:

And this is the expected result (before and after DAG trigger) for external Postgres DB: