QCMP is a Reinforcement Learning based load balancing solution implemented within the data plane, providing dynamic policy adjustment with quick response to changes in traffic. This repo is the artifact for the paper "QCMP: Load Balancing via In-Network Reinforcement Learning" PDF in 2nd ACM SIGCOMM Workshop on FIRA '23.

QCMP requires BMv2 as a simulation environment. To install the BMv2, follow the guide from Link. From the link, one setup option is recommended by QCMP, the VirtualBox + VM P4 Tutorial Release 2023-04-24.ova.

To run QCMP, clone the repository git clone https://github.com/In-Network-Machine-Learning/QCMP.git to a local directory, enter the QCMP folder, and run the following 8 steps:

- In terminal:

make run

mininet> xterm h1 h2 h4 h5 s1 s1 s1- In s1 terminal:

./set_switches.sh- In another s1 terminal:

wireshark, monitoring port s6-eth1, open I/O Graph under Statistics bar.

- In h1 terminal:

python3 send.py- In h2 terminal:

python3 get_queues_layer1.py- In h4 terminal:

python3 get_queues_layer2.py- In h5 terminal:

python3 get_queues_layer2.py- In another s1 terminal:

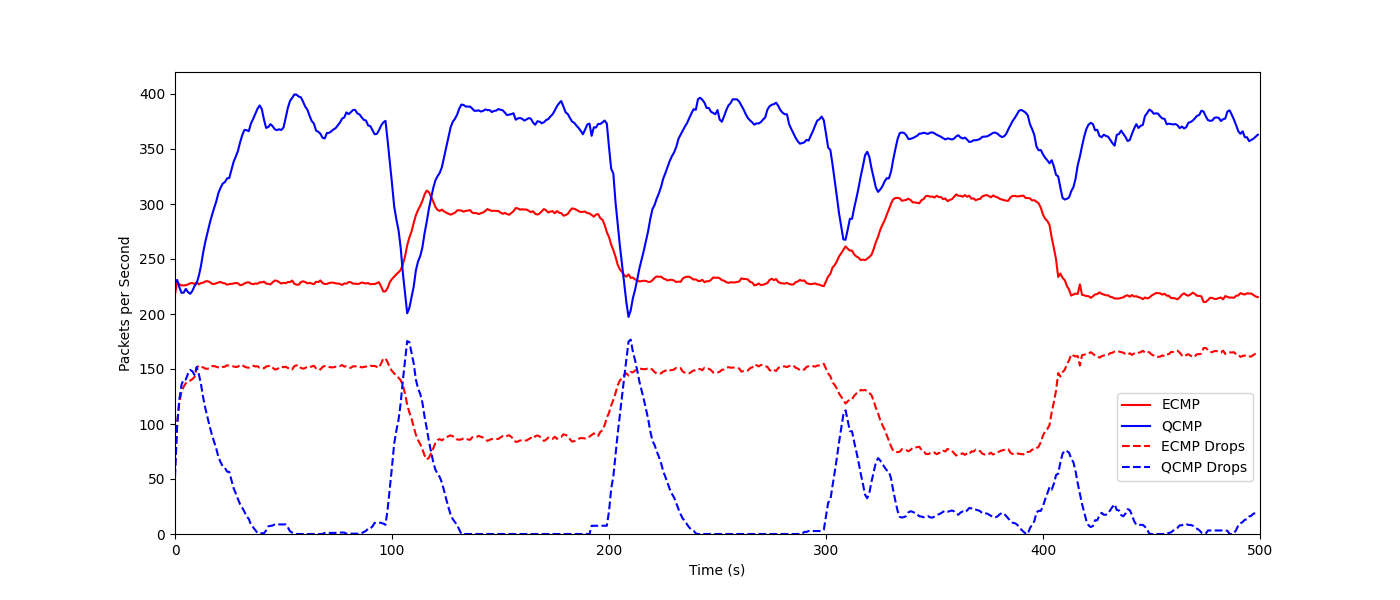

./change_switches.shAfter these 8 steps, wait several minutes and look at the opened I/O Graph window in step 3. The output shows the QCMP throughput, which will be similar to the graph as follows:

To change weights, please change the numbers in the file set_switches.sh change_switches.sh and q_table.py (function init_q_table and update_q_table under the class q_table).

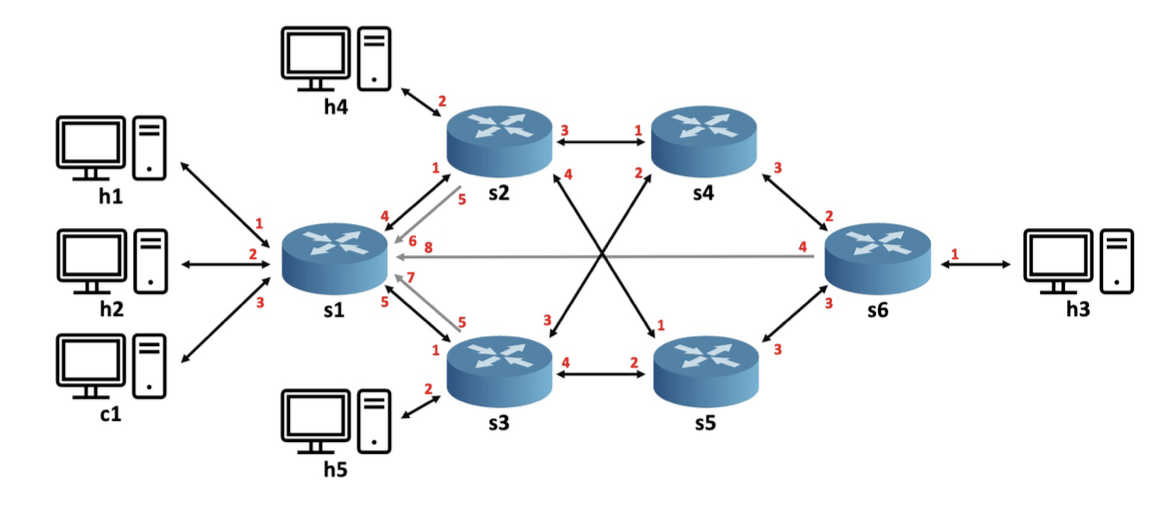

The topology used for this evaluation is shown as follows:

Please submit an issue with the appropriate label on Github.

The files are licensed under Apache License: LICENSE. The text of the license can also be found in the LICENSE file.

If you use this code, please cite our QCMP paper:

@inproceedings{zheng2023qcmp,

title={{QCMP: Load Balancing via In-Network Reinforcement Learning}},

author={Zheng, Changgang and Rienecker, Benjamin and Zilberman, Noa},

booktitle={Proceedings of the 2nd ACM SIGCOMM Workshop on Future of Internet Routing \& Addressing},

pages={35--40},

year={2023}

}

We are also excited to introduce several QCMP related papers (Planter, IIsy, and DINC):

@article{zheng2022automating,

title={{Automating In-Network Machine Learning}},

author={Zheng, Changgang and Zang, Mingyuan and Hong, Xinpeng and Bensoussane, Riyad and Vargaftik, Shay and Ben-Itzhak, Yaniv and Zilberman, Noa},

journal={arXiv preprint arXiv:2205.08824},

year={2022}

}

@article{zheng2022iisy,

title={{IIsy: Practical In-Network Classification}},

author={Zheng, Changgang and Xiong, Zhaoqi and Bui, Thanh T and Kaupmees, Siim and Bensoussane, Riyad and Bernabeu, Antoine and Vargaftik, Shay and Ben-Itzhak, Yaniv and Zilberman, Noa},

journal={arXiv preprint arXiv:2205.08243},

year={2022}

}

@inproceedings{zheng2023dinc,

title={{DINC: Toward Distributed In-Network Computing}},

author={Zheng, Changgang and Tang, Haoyue and Zang, Mingyuan and Hong, Xinpeng and Feng, Aosong and Tassiulas, Leandros and Zilberman, Noa},

booktitle={Proceedings of ACM CoNEXT'23},

year={2023}

}

@incollection{zheng2021planter,

title={{Planter: seeding trees within switches}},

author={Zheng, Changgang and Zilberman, Noa},

booktitle={Proceedings of the SIGCOMM'21 Poster and Demo Sessions},

pages={12--14},

year={2021}

}

QCMP is inspired by Planter and DINC.

Acknowledgements This work was partly funded by VMware. We acknowledge support from Intel. This paper complies with all applicable ethical standards of the authors’ home institution.