Nitro raycast extension. Using LLM right from Raycast.

This is a shameless wrapper around Nitro, to allow easy use in Raycast.

Currently, this is just a placeholder until I can make it work. Behold, the wave of Llamas is coming to you soon!

Reference for logical flow: raycast/extensions | Add speedtest #302

- Using

@janhq/nitro-nodewhen it is published on NPM instead of hacking around the installation hooks. See: janhq/jan#1635 - Auto check and update latest release of

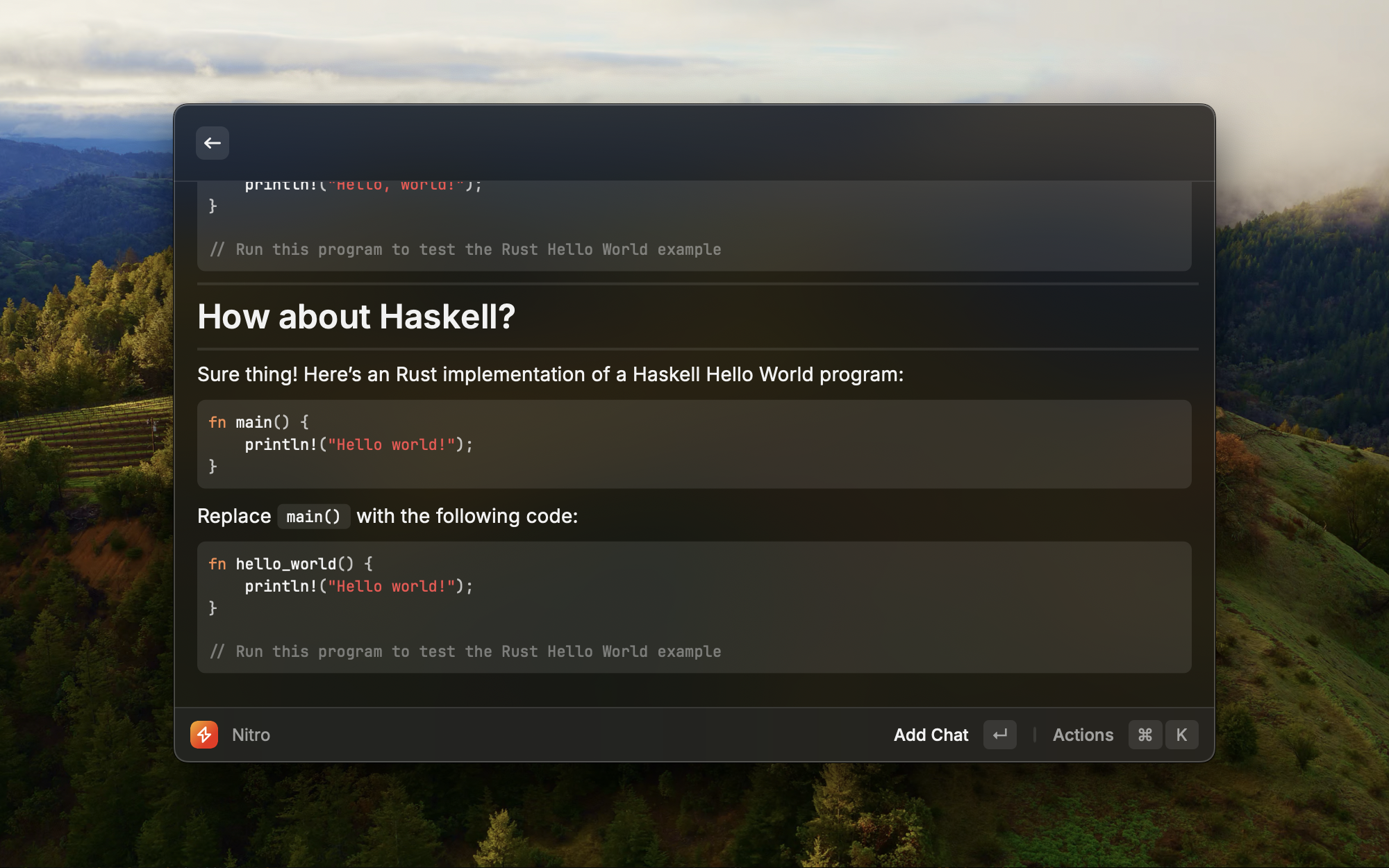

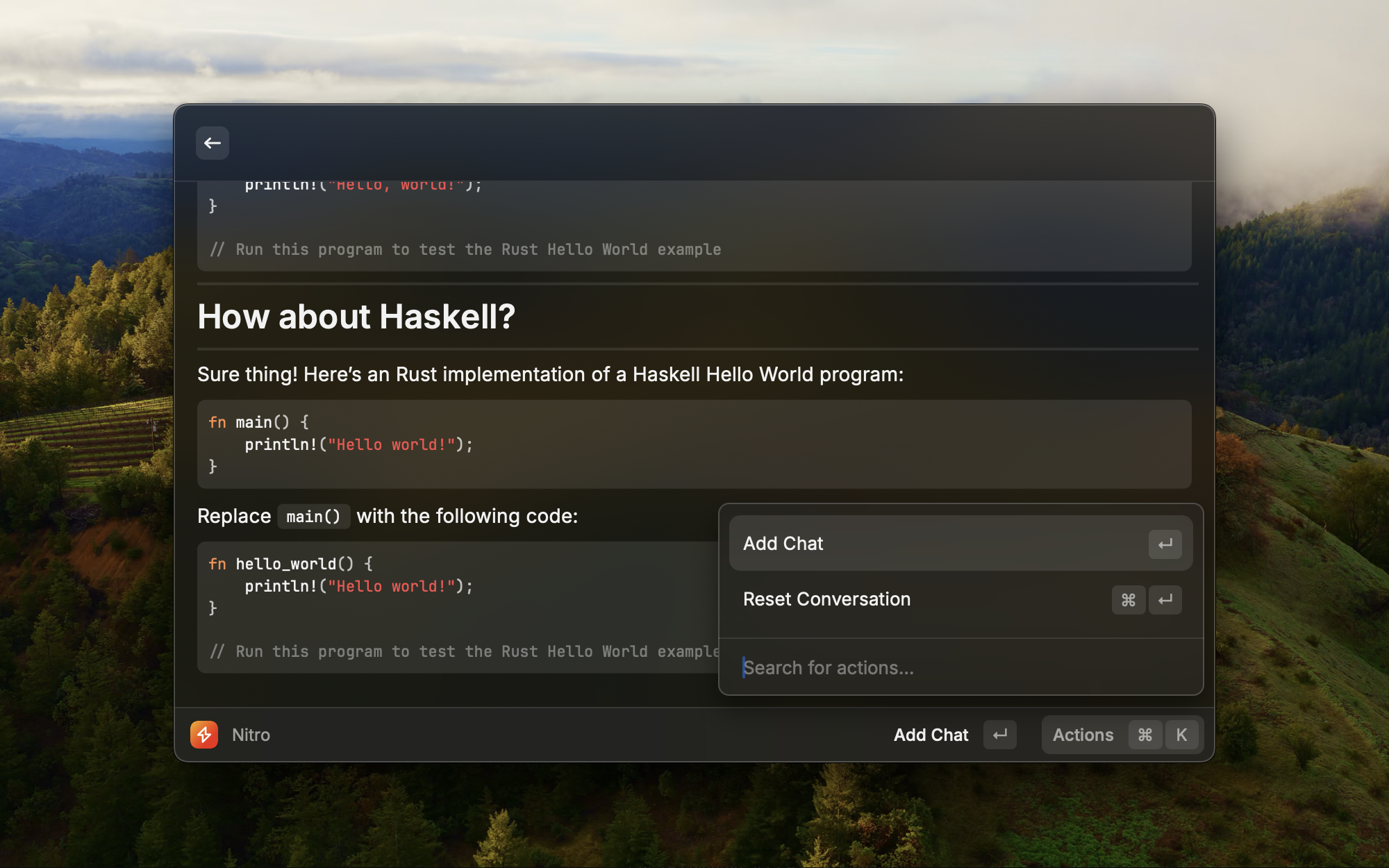

nitroor let user choose the version they want to use. - Shamelessly copy the user interface of OpenAI chat and Hugging Face chat. Sorry I'm not a designer so don't sue me for copying the UI from the other chat models.

- Let users choose models they want to use and download it automatically.

- Let users pick the

prompt_templateon the known list to try on the new model. If they accept theprompt_templateit will be used. - Let LLM (tiny-llama) helps guessing the best prompt template to use and highlight it to user.

- Choice of installation directory and model directory.

- Option to use hugging-chat-api instead of running models locally? Am I going to abuse their servers?!?

- Be really lazy and summon Llamas to work for me by utilizing Raycast.

- Don't abandon this project like other pet projects in the past (most even never leave local machine)

- Summon as many Lllamas as possible.

- Make my robot waifu become real!