This repo will guide you in installing and integrating Cassandra

with Spark2 on Hortonworks HDP 3.0.1. Skip installing HDP 3.0.1 if you already have.

Hortonworks Data Plateform (HDP) is a virtual machine (VM) which can be used to play with Apache Hadoop. Other popular technologies like Hive, Pig Latin, HBase, etc are also installed. Follow the steps to install HDP 3.0.1 which is the latest version provided.

-

Head to cloudera and chose the installation type

Virtualbox. Then submit the information required and download the HPD 3.0.1. Beware, the file will take few minutes because file size is about 20 GB. -

Download latest virtualbox for Windows host.

-

Follow the steps and install the virtualbox.

-

Open virtualbox, (a) click

File, (b) selectImport Appliance, (c) select the HDP file downloaded earlier, (d) clickNextand (e) clickImport. Do Not change any configuration for this VM, 4 VCPU and 10 GB of Base Memory. The import process will take few minutes to complete. -

Start the newly created VM from virtualbox. Open your browser and go to

127.0.0.1:8080. If everything is working, than you will see Ambari homepage. Login usinguser: raj_opsandpassword: raj_ops. Check if Spark2 service is running or not. If not than click onservicesand selectrestart essential services.

We will be installing Cassandra version 3.0.20. Running Cassandra will take some extra resources so either add more Base Memory or turn off some resources. If extra RAM is available then add memory to virtualbox setting. The other option is to stop some services which are not necessary for this application. Follow the steps to remove extra services

-

Start VM and log in to Ambari using

user: adminandpassword: admin. SelectHive,ActionsandStop. Do these steps withRangerandData Analytics Studiotoo -

sshinto VM usingssh root@127.0.0.1 -p 2222and passwordhadoop. Now, if this is the first login then it will prompt to change the password. Create cassandra rpm repo by creating a new file namedcassandra.repoin/etc/yum.repos.dand the following text;[cassandra] name=Apache Cassandra baseurl=https://downloads.apache.org/cassandra/redhat/30x/ gpgcheck=1 repo_gpgcheck=1 gpgkey=https://downloads.apache.org/cassandra/KEYSCassandra requires Python 2 so check python version by running

python --version. Runsudo yum install cassandraand make sure that the package downloading isCassandra 3.0.20. Run cassandra,sudo service cassandra start -

cqlshis a SQL like shell for working with cassandra. Runcqlshcommand to enter in the shell and execute the following code.CREATE KEYSPACE test WITH replication = {'class': 'SimpleStrategy', 'replication_factor': 1 }; CREATE TABLE test.kv(key text PRIMARY KEY, value int); INSERT INTO test.kv(key, value) VALUES ('key1', 1); INSERT INTO test.kv(key, value) VALUES ('key2', 2);

This will create a table

kvin keyspacetestand insert two entries in it. We will retrieve this table from Spark2

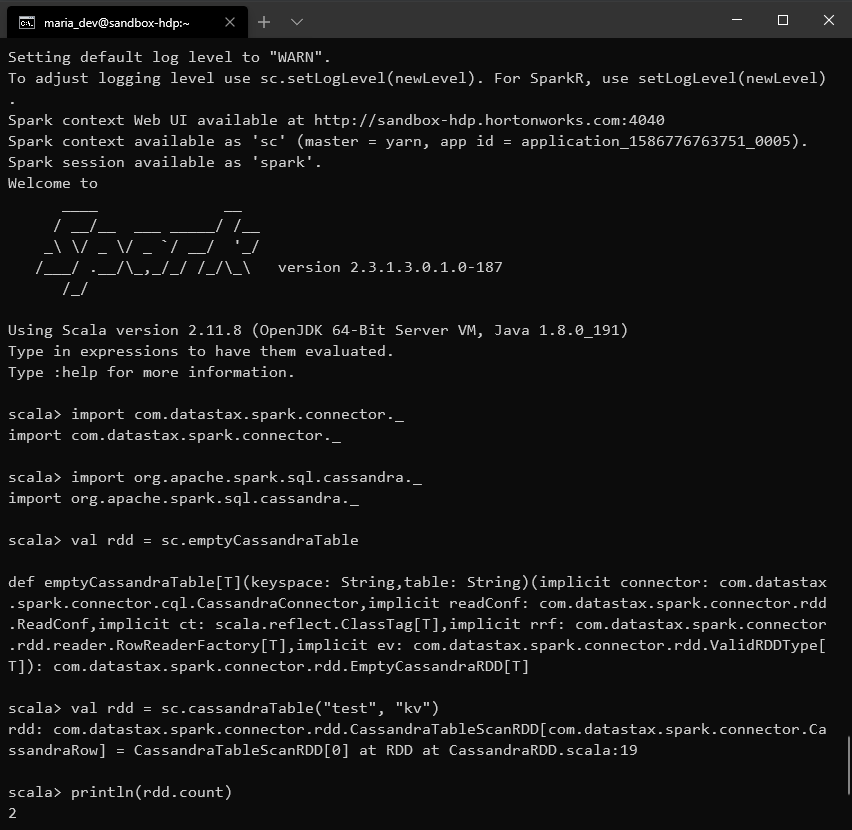

Login HDP using ssh with ssh maria_dev@127.0.0.1 -p 2222

and password: maria_dev. Enter the following command to run

spark-shell with spark-cassandra-connector.

spark-shell --conf spark.cassandra.connection.host=127.0.0.1 \

--packages com.datastax.spark:spark-cassandra-connector_2.11:2.3.1,commons-configuration:commons-configuration:1.6Now we will retrieve dummy values we inserted earlier using cqlsh

import com.datastax.spark.connector._

import org.apache.spark.sql.cassandra._

val rdd = sc.cassandraTable("test", "kv")

println(rdd.count)Create a new python script, CassandraSpark2.py

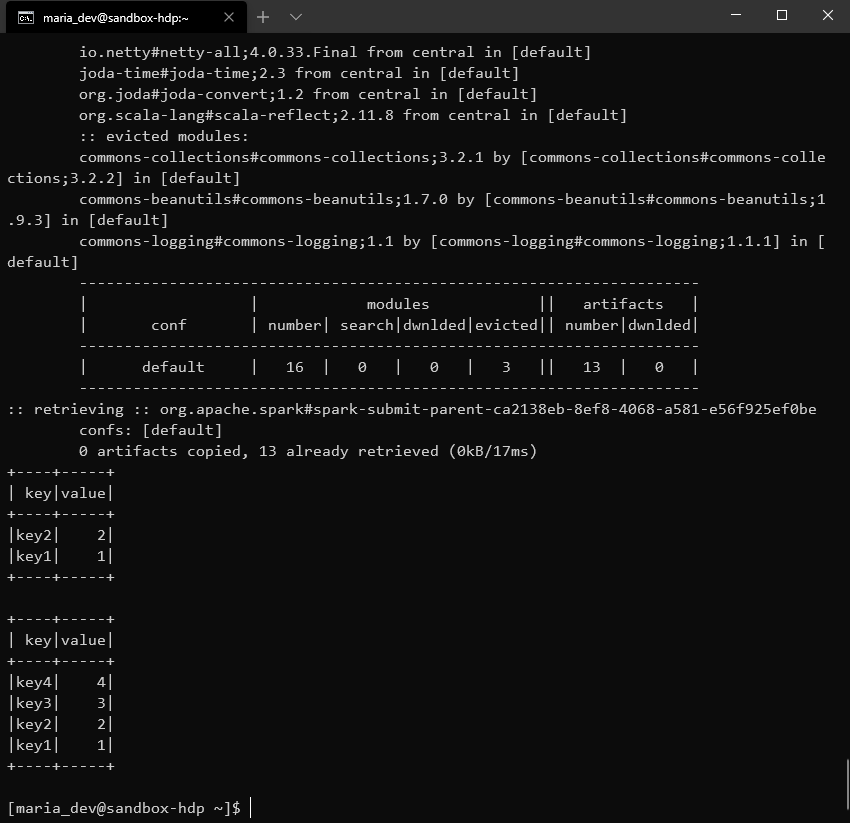

from pyspark.sql import SparkSession

from pyspark.sql import Row

if __name__ == "__main__":

spark = SparkSession.builder.appName("CassandraApp").getOrCreate()

# read data from cassandra

readData = spark.read.format("org.apache.spark.sql.cassandra").options(table="kv", keyspace="test").load()

# print table

readData.show()

# insert data

tmp_df = spark.createDataFrame([("key3", 3), ("key4", 4)], ["key", "value"])

tmp_df.write.format("org.apache.spark.sql.cassandra")\

.mode("append").options(table="kv", keyspace="test").save()

# read new data

readData = spark.read.format("org.apache.spark.sql.cassandra").options(table="kv", keyspace="test").load()

readData.show()

# end spark session

spark.stop()Run the above script using following command;

spark-submit --conf spark.cassandra.connection.host=127.0.0.1 \

--packages com.datastax.spark:spark-cassandra-connector_2.11:2.3.1,commons-configuration:commons-configuration:1.6 \

CassandraSpark2.py