[Paper] [Project Page] [Demo] [Dataset]

We are currently organizing the code for MP5. If you are interested in our work, please star ⭐ our project.

- (2024/2/28) MP5 is accepted to CVPR 2024!

- (2023/12/12) MP5 is released on arXiv.

- (2024/03/29) Code is released!

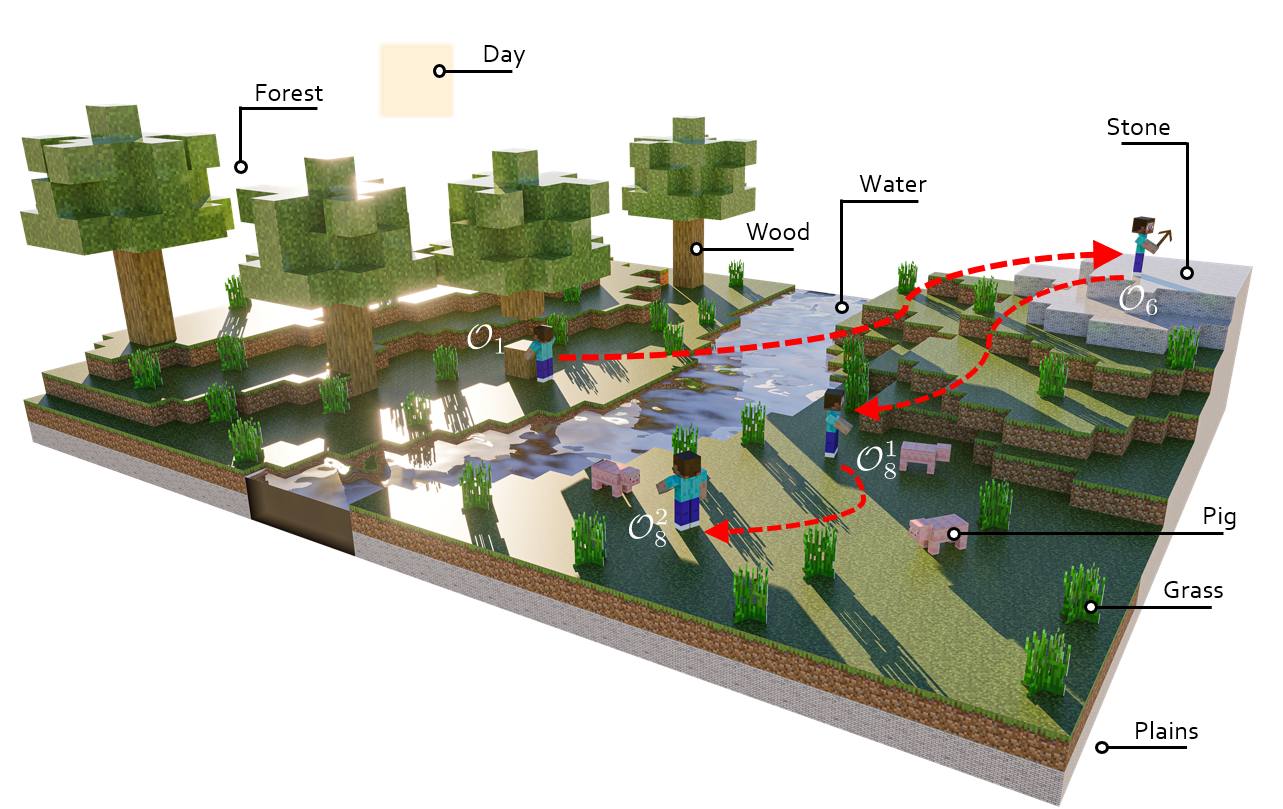

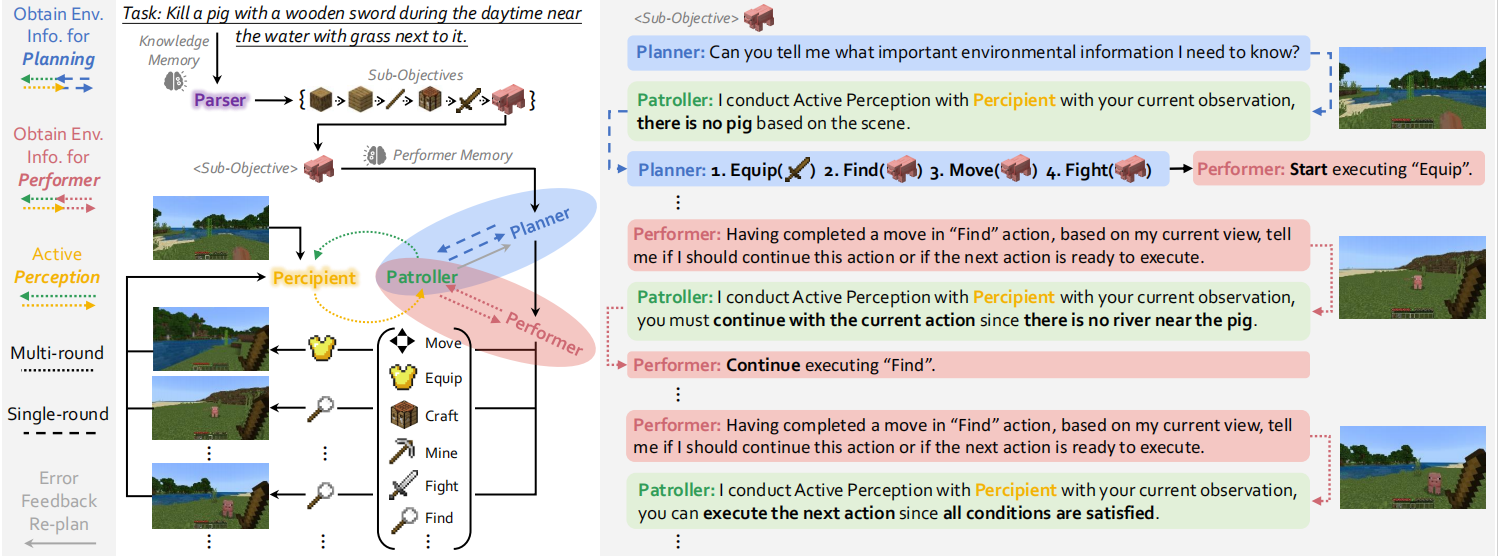

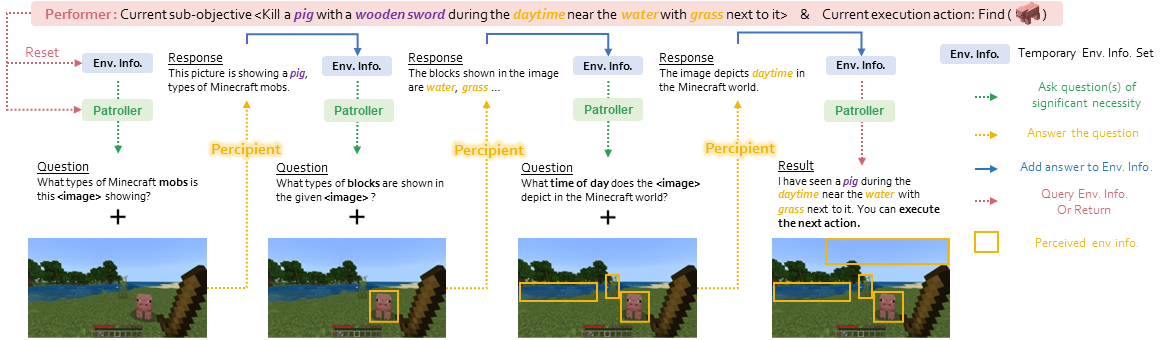

The process of finishing the task ''kill a pig with a stone sward during the daytime near the water with grass next to it.''

.

├── README.md

├── MP5_agnet

│ ├── All agents of MP5.

├── LAMM

│ ├── Scripts and models for training and testing MineLLM.

Note: We provide all the code except the human designed interface code, which you can try to implement yourself or use MineDreamer as a low level control module.

Because MineLLM is deployed on the server side, we need two virtual environments to run MineLLM and MP5_agent.

We recommend running on linux using a conda environment, with python 3.10. You can install the environment following here.

# activate conda env for MineLLM

conda activete minellm

pip install -r requirement.txtMineDojo requires Python ≥ 3.9. We have tested on Ubuntu 20.04 and Mac OS X. Please follow this guide to install the prerequisites first, such as JDK 8 for running Minecraft backend. We highly recommend creating a new Conda virtual env to isolate dependencies. Alternatively, we have provided a pre-built Docker image for easier installation.

Firstly, installing the MineDojo stable version is as simple as:

# activate conda env for MP5_agent

conda activate MP5_agent

pip install minedojoor you could check Minedojo for more details.

Then install other requirement:

pip install -r requirement.txt

To train MineLLM from scratch, please execute the following steps:

- Download datasets from huggingface

- git clone the

LAMMcodebase:cd MP5andgit clone https://github.com/IranQin/LAMM - Put the datasets into

LAMM/datasets/2D_Instructandcd LAMM. - Download the MineCLIP weight

mineclip_image_encoder_vit-B_196tokens.pthfrom here and put it into themodel_zoo/mineclip_ckptfolder. - Train the agent by running:

. src/scripts/train_lamm2d_mc_v1.5_slurm.sh, need to be changed if you do not use slurm.

Note: We provide all the code except the human designed interface code, which you can try to implement yourself or use MineDreamer as a low level control module.

To start the MineLLM service, please execute the following steps:

- Download checkpoints from web

- Put the checkpoints into

LAMM/ckptandcd LAMM. - Start the MineLLM service by running:

. src/scripts/mllm_api_slurm.sh, need to be changed if you do not use slurm.

To run the MP5_agent, please execute the following steps:

cd MP5_agent/

bash scripts/run_agent.sh

If you find this repository useful for your work, please consider citing it as follows:

@inproceedings{qin2024mp5,

title={MP5: A Multi-modal Open-ended Embodied System in Minecraft via Active Perception},

author={Qin, Yiran and Zhou, Enshen and Liu, Qichang and Yin, Zhenfei and Sheng, Lu and Zhang, Ruimao and Qiao, Yu and Shao, Jing},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={16307--16316},

year={2024}

}

@article{zhou2024minedreamer,

title={MineDreamer: Learning to Follow Instructions via Chain-of-Imagination for Simulated-World Control},

author={Zhou, Enshen and Qin, Yiran and Yin, Zhenfei and Huang, Yuzhou and Zhang, Ruimao and Sheng, Lu and Qiao, Yu and Shao, Jing},

journal={arXiv preprint arXiv:2403.12037},

year={2024}

}