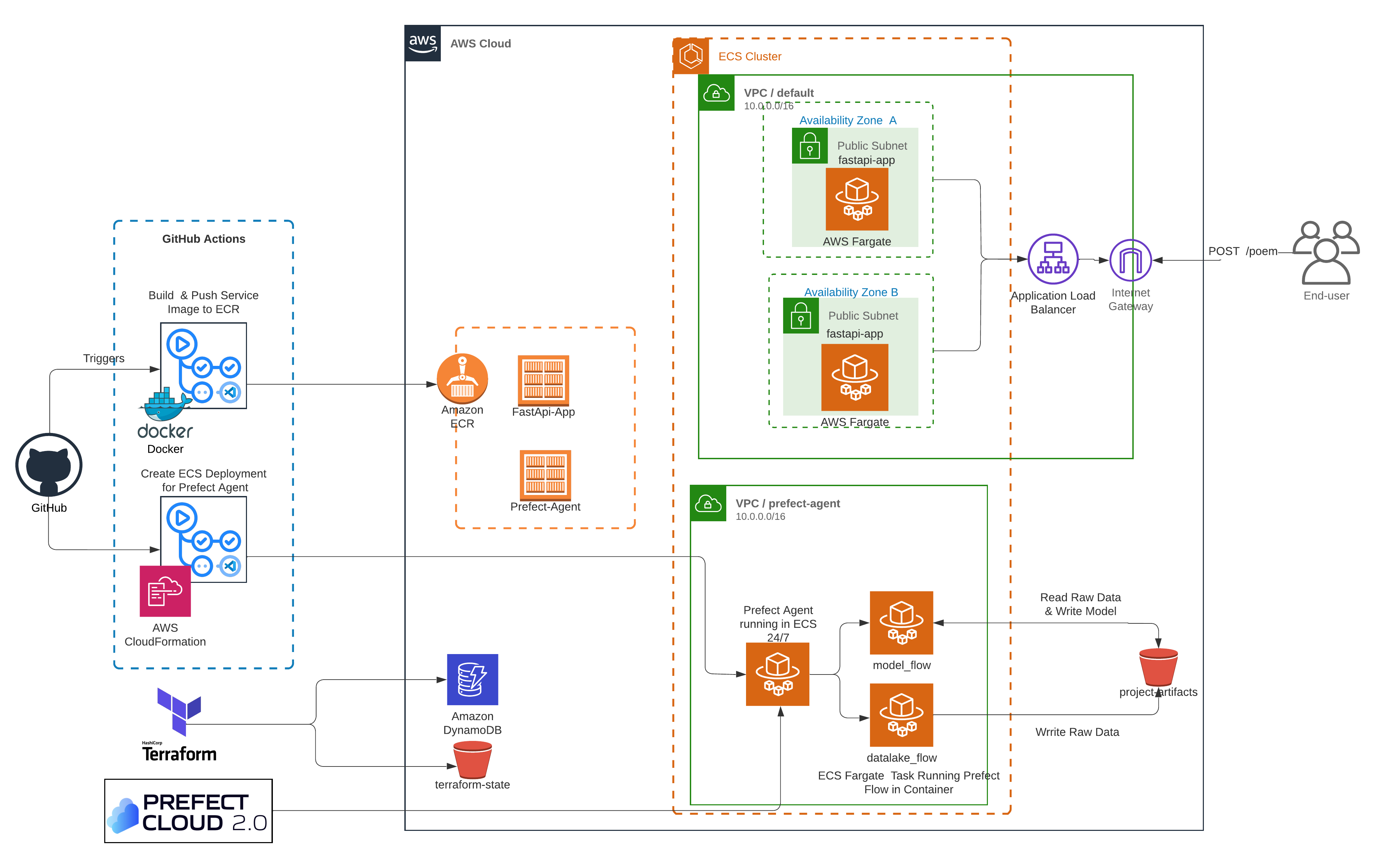

Capstone project for Data Talks MLOps Zoomcamp with aim of practicing automated mlops workflows that works with minimal effort locally and in cloud. The project includes two main capability:

- Fully automated workflow orchestration (with Prefect) for data retrieval and model training tasks.

- Webs service layer for serving machine learning model with Fast API.

The final outcome of the project is simplest poem generator api, which returns a ai-created-poem based on the initial prompt given by the end-user.

Addition to the above mentioned two main capabilities, as part of the project, the cloud deployments are automated by the help of different tools sets for infrastrucre as code (terraform, cloudformation, aws cdk) and CI/CD (GitHub Actions).

Hereby, required accounts and respective keys will be listed.

- AWS Console Account and required secrets

AWS_ACCESS_KEY_IDandAWS_SECRET_ACCESS_KEY. Instructions for retrieving access_key and access_key_id - Kaggle Account and required secrets

KAGGLE_USERNAMEandKAGGLE_KEY. Instructions for finding the Kaggle username and key - Prefect Cloud Accont and required secrets

PREFECT_API_URLandPREFECT_API_KEY.Instructions for creating Cloud Prefect account and finding Prefect api_url and api_key

Caution:: It is highly suggested to collect these parameters in .env file and GitHub Secrets for each of them as a preparation step.

Infrastructure Creation (infrastructure)

- Create AWS resources (ECS cluster and fastapi-app service) by following the instructions in

infrastructurefolder. - Create AWS resources for prefect-agent via Cloudformation.

Running Data Ingestion and Model Creation flows (workfflow_orchestration)

- Run the datalake_flow as local flow or a deployment in aws

- The outcome of the flow is stored raw data in artifacts/raw_data folder.

- Run the model_flow as local flow or a deployment in aws

- The outcmode of the flow is stored model file in artifacts/model folder.

Serving the Model as an FastAPI endpoint (model_serving)

- Run the

build-and-ecr-push-model-serving.ymlGitHub workflow for publishing thefastapi-appimage in ECR. - In that state, all of the building blocks of the project is created successfully. Therefore you can go an check the fastapi-app

and make a POST request on

/poemendpoint.

Poetry is Python Package Management tool that helps managing package dependencies.

-

Install poetry as described in the Poetry installation section

-

Install packages based on defined versions in either

pyproject.tomlorpoetry.lock. If you are interested to know details , please check Installing Dependencies section.poetry install

-

Activate the poetry environment

source {path_to_venv}/bin/activate -

To add new packages to the poetry environment. Check the official documentation if you are unsure of usage

poetry add <package-name><condition><version>

Pre-Commit allows to run hooks on every commit automatically to point out issues such as missing semicolons, trailing whitespaces, etc.

-

Install pre-commit as described in the installation section

-

Pre-Commit configuration file is already configured in

.pre-commit-config.yaml -

Running Pre-Commit on the repository, can be done in two different approach

3.1. Run on each commit, in that case, the hook scripts would not allow you to push your changes in GitHub and inform your code success after each commit. For enabling that you need to initiate that once on the repository level as following

pre-commit install

3.2. Run agains each file, allow you freedom to run hooks when you want, in that case there is no guarantee that each commit fits the coding standards that you defined in precommit configuration. But you can run against all of the files, whenever you want.

pre-commit run --all-files