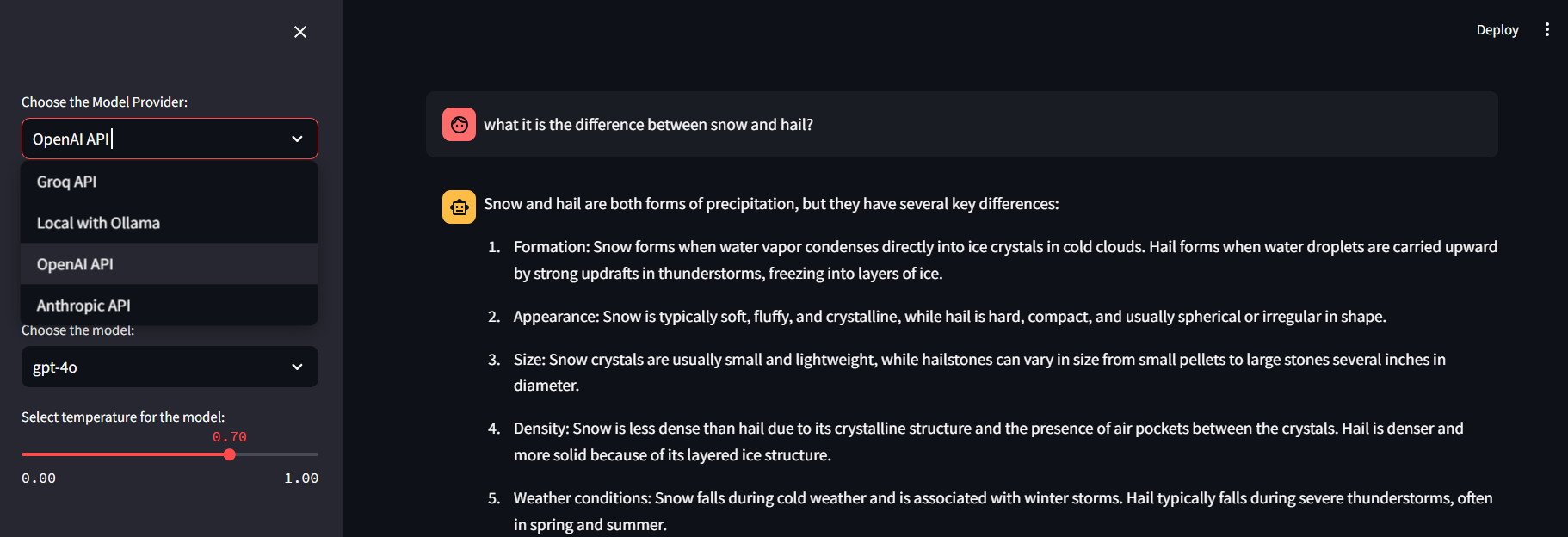

Use many large language models: OpenAI / Anthropic / Open / Local LLM's with one Streamlit Web App.

- LLM Support

- Ollama

- OpenAI - GPT 3.5 / GPT4 / GPT4o / GPT4o-mini

- Anthropic - Claude 3 (Opus / Sonnet) / Claude 3.5

- Groq API

Clone and Run 👇

Try the Project quickly with Python Venv's:

git clone https://github.com/JAlcocerT/Streamlit-MultiChat

python -m venv multichat_venv #create the venv

multichat_venv\Scripts\activate #activate venv (windows)

source multichat_venv/bin/activate #(linux)pip install -r requirements.txt #all at once

streamlit run Z_multichat.py- Make sure to have Ollama ready and running your desired model!

- Prepare the API Keys in any of:

- .streamlit/secrets.toml

- As Environment Variables

- Linux -

export OPENAI_API_KEY="YOUR_API_KEY" - CMD -

set OPENAI_API_KEY=YOUR_API_KEY - PS -

$env:OPENAI_API_KEY="YOUR_API_KEY" - In the Docker-Compose

- Linux -

- Through the Streamlit UI

- Alternatively - Use the Docker Image

docker pull ghcr.io/jalcocert/streamlit-multichat:latest #x86/ARM64Projects I got inspiration from / consolidated in this App were tested here: ./Z_Tests