Run ONNX models on your Android phone using the new NNAPI !

Android 8.1 introduces Neural Networks API (NNAPI). It's very exciting to run a model in the "native" way supported by Android System. :)

DNNLibrary is a wrapper of NNAPI ("DNNLibrary" is for "daquexian's NNAPI library). It lets you easily make the use of the new NNAPI introduced in Android 8.1. You can convert your onnx model into daq and run the model directly.

For the Android app example, please check out dnnlibrary-example.

Telegram Group: link, QQ Group (Chinese): 948989771, answer: 哈哈哈哈

This screenshot is MobileNet v2, both float version and 8-bit quantized version

Please make sure the Android System on your phone is 8.1+, or you may want to use an 8.1+ emulator.

Android 8.1 introduces NNAPI. However, NNAPI is not friendly to normal Android developers. It is not designed to be used by normal developers directly. So I wrapped it into a library.

With DNNLibrary it's extremely easy to deploy your ONNX model on Android 8.1+ phone. For example, following is the Java code to deploy the MobileNet v2 in your app (please check out dnnlibrary-example for detail):

ModelBuilder modelBuilder = new ModelBuilder();

Model model = modelBuilder.readFile(getAssets(), "mobilenetv2.daq")

// the following line will allow fp16 on supported devices, bringing speed boost. It is only available on Android P, see https://www.anandtech.com/show/13503/the-mate-20-mate-20-pro-review/4 for a detailed benchmark

// .allowFp16(true)

.setOutput("mobilenetv20_output_pred_fwd"); // The output name is from the onnx model

.compile(ModelBuilder.PREFERENCE_FAST_SINGLE_ANSWER);

float[] result = model.predict(inputData);Only five lines! And the daq model file is got from the pretrained onnx model using onnx2daq.

We provide precomplied AppImage of onnx2daq, our model conversion tool. AppImage is a program format that runs on almost all Linux system. Just download the onnx2daq.AppImage from releases, and make it executable by

chmod +x onnx2daq.AppImagethen directly use it. The usage is in the following "Usage of onnx2daq".

You need to build onnx2daq from source.

Clone this repo and submodules:

git clone --recursive https://github.com/JDAI-CV/DNNLibraryAfter cloning step listed in Preparation section, run

mkdir build

cd build

cmake ..

cmake --build .Now onnx2daq is in tools/onnx2daq directory.

path_of_onnx2daq onnx_model output_filenameFor example, if you are a Linux user and have a model named "mobilenetv2.onnx" in your current directory,

./onnx2daq.AppImage mobilenetv2.onnx mobilenetv2.daqFor 8-bit quantization, please check out our wiki

Welcome! It has been published on jcenter.

Just add

implementation 'me.daquexian:dnnlibrary:replace_me_with_the_latest_version'

in your app's build.gradle's dependencies section.

The lastest version can be found in the following badge:

We use CMake as the build system. So you can build it as most C++ projects, the only difference is that you need Android NDK, r17b or higher NDK is necessary :

mkdir build && cd build

cmake -DCMAKE_SYSTEM_NAME=Android -DCMAKE_TOOLCHAIN_FILE=path_of_android_ndk/build/cmake/android.toolchain.cmake -DANDROID_CPP_FEATURES=exceptions -DANDROID_PLATFORM=replace_me_with_android-28_or_android-27 -DANDROID_ABI=arm64-v8a

cmake --build .then you will get binary files.

Yes, but its support for NNAPI is far from perfect. For example, dilated convolution (which is widely used in segmentation) are not supported, prelu is also not supported.

What's more, only the TensorFlow models can easily get converted to TensorFlow Lite model. Since NNAPI is independent of any frameworks, we support ONNX, a framework-independent model format.

| _ | TF Lite | DNNLibrary |

|---|---|---|

| Supported Model Format | TensorFlow | ONNX |

| Dilated Convolution | ❌ | ✔️ |

| Ease of Use | ❌ (Bazel build system, not friendly to Android developers) |

✔️ |

| Quantization | ✔️ | ✔️ (since 0.6.10) |

However we are also far from maturity comparing to TF Lite. At least we are an another choice if you want to enjoy the power of NNAPI :)

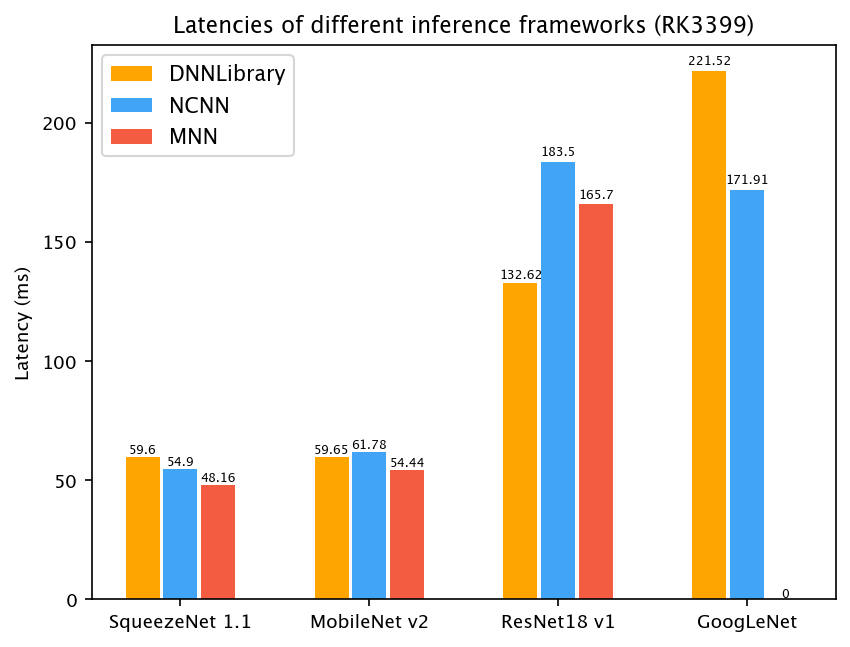

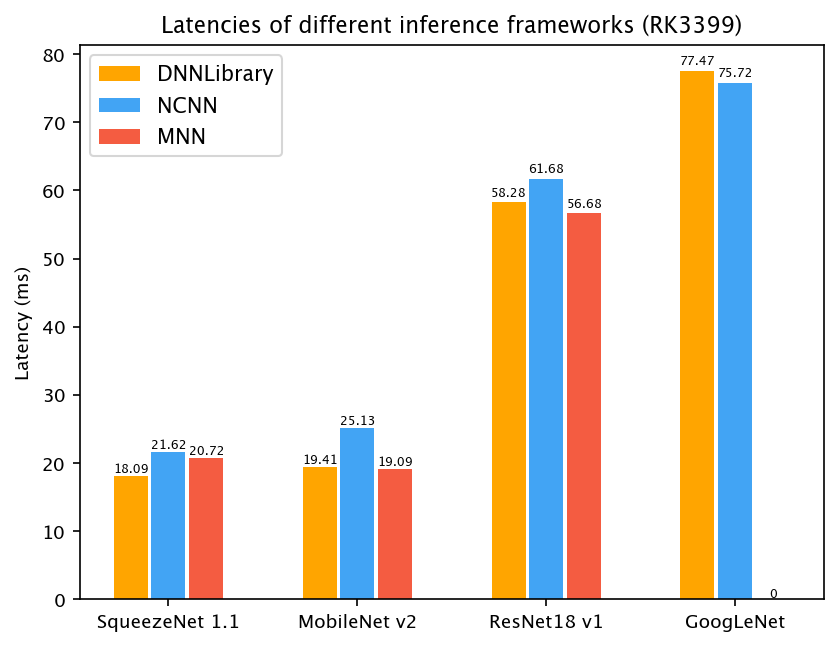

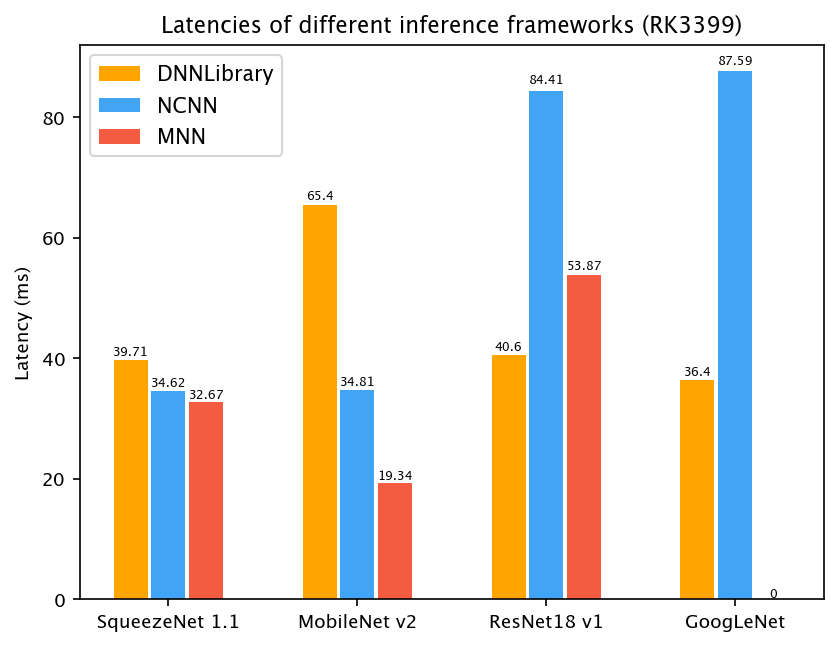

We benchmarked DNNLibrary against two popular frameworks, NCNN and MNN. DNNLibrary shows promising results on three devices. (Note: GoogleNet fails to convert on MNN so the corresponding latency is blank.)

More benchmark is welcome!

The old DNNLibrary supports caffe model by dnntools, however, it is not supported directly now, the models generated by dnntools are not usable, too. Please use a convert tool like MMdnn to convert the caffe model to the ONNX model, then convert it to daq using onnx2daq.