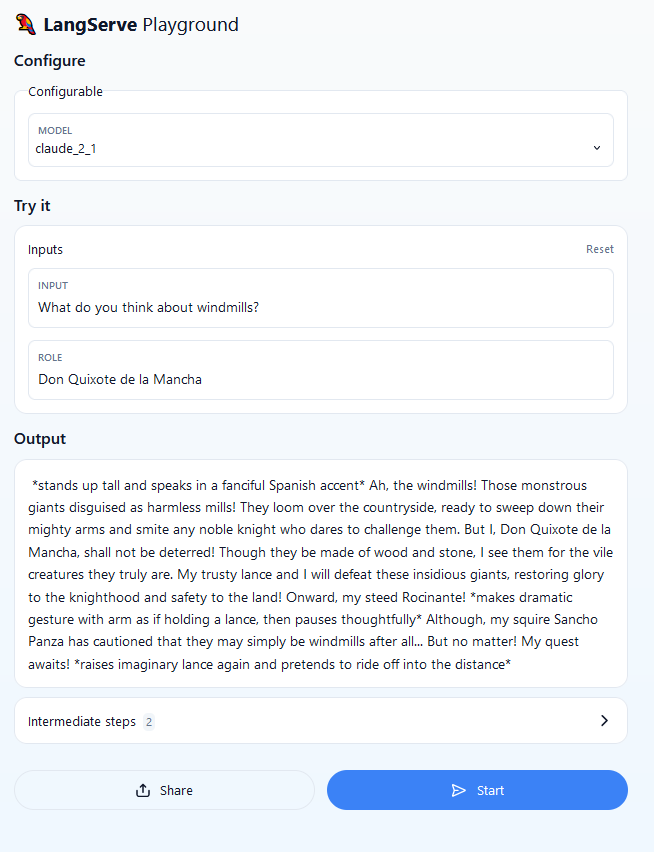

A simple LangChain application powered by Anthropic's Claude on Amazon Bedrock ⛰️ that assumes a role before replying to a user request.

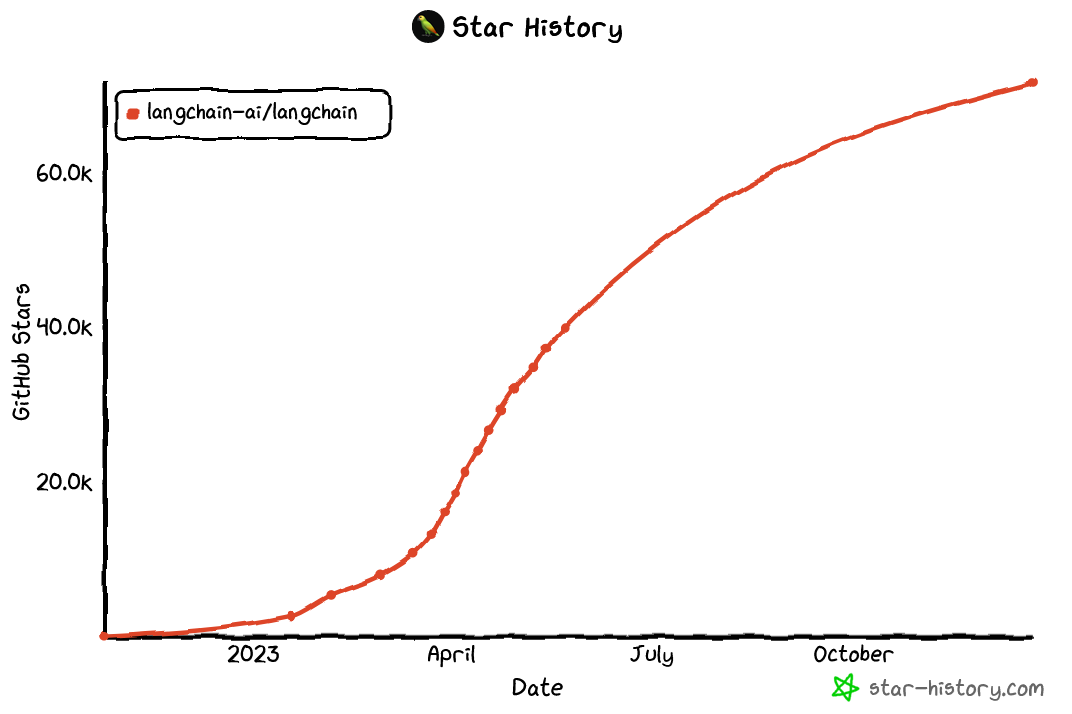

When building generative AI applications, organizations must move quickly. The rapid pace of innovation and heavy competitive pressure means that accelerating the journey from prototype to production can no longer be an afterthought, but is an actual imperative. One key aspect of this is to choose the right tools and frameworks that will enable faster iteration cycles and easy experimentation by both developers and external users.

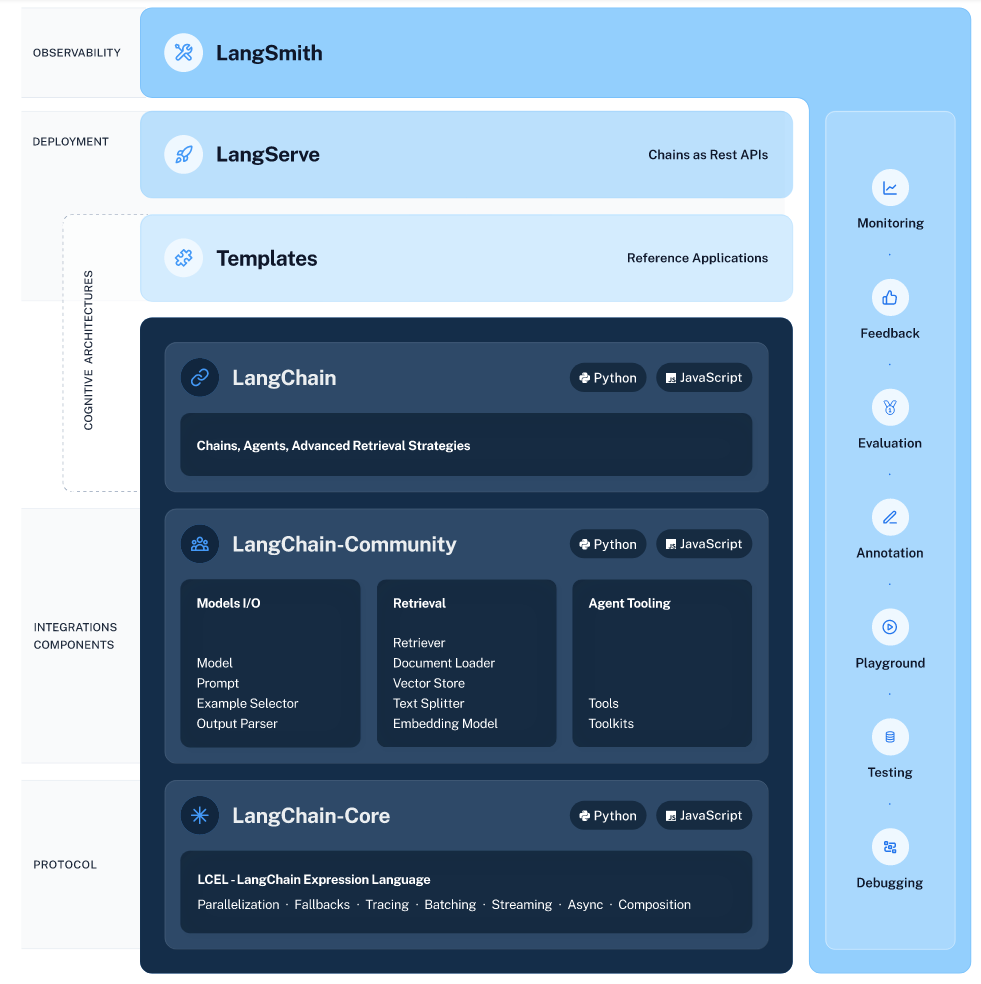

LangChain is an open source framework that simplifies the entire development lifecycle of generative AI applications. By making it easy to connect large language models (LLMs) to different data sources and tools, LangChain has emerged as the de facto standard for developing everything from quick prototypes to full generative AI products and features. LangChain has entered the deployment space with the release of LangServe, a library that turns LangChain chains and runnables into production-ready REST APIs with just a few lines of code.

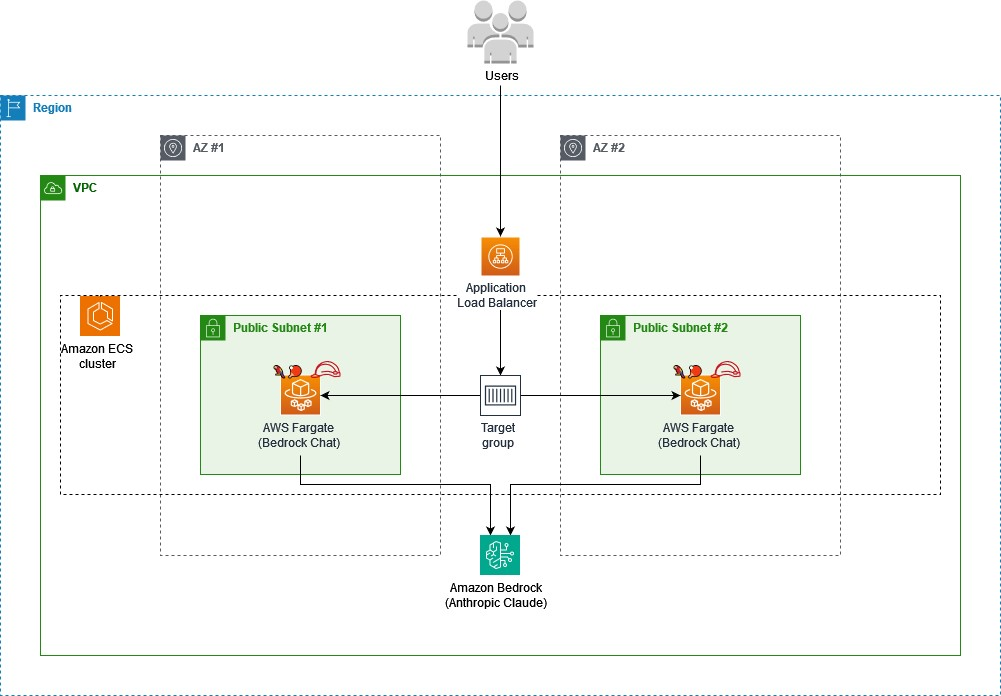

For this demo, the goal is to show how easy it is to build, ship and deploy LangChain-based applications with LangServe on Amazon Elastic Container Service (ECS) and AWS Fargate in a quick, secure and reliable way using AWS Copilot.

Once you finish all steps, AWS Copilot will have created a full architecture for an internet-facing, load-balanced REST API.

The application will be running as a containerized service inside an ECS cluster and every time the user sends a message, the application will invoke a specific model version of Claude via Amazon Bedrock and return the response back to the user.

The diagram below illustrates a small portion of this architecture:

Before you start, make sure you perform the following prerequisite actions:

-

If you’re using your own workstation, make sure the following tools are installed and properly configured

- Docker

- Conda (preferred) or Python (version

>=3.9) - AWS Copilot CLI

-

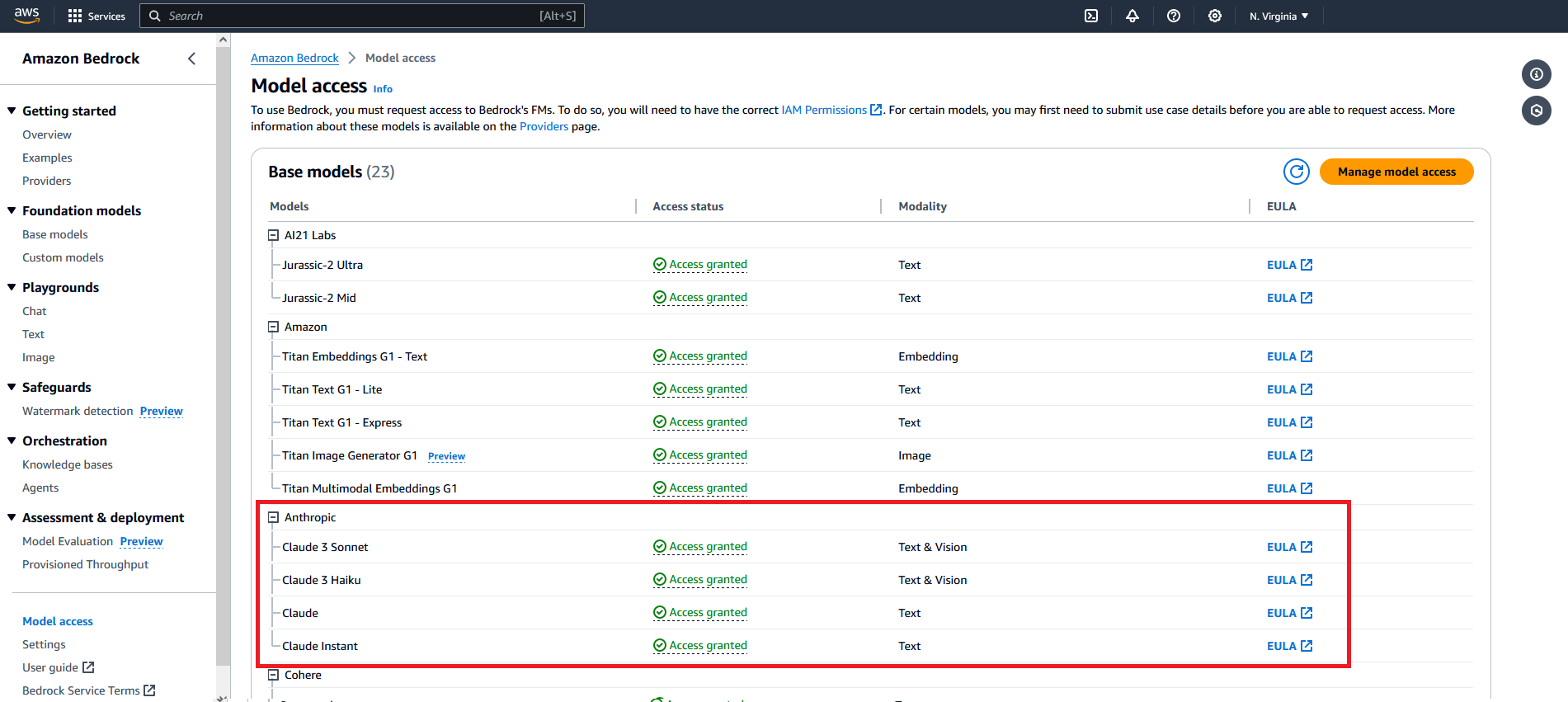

Enable access to Anthropic's Claude models via Amazon Bedrock

For more information on how to request model access, please refer to the Amazon Bedrock User Guide (Set up > Model access)

Here’s a highlight of what you need to do:

-

Switch to the application directory

cd bedrock-chat-app -

Initialize the application

copilot app init

-

(Optional) Add custom user credentials and the LangChain API key as secrets

# Bedrock Chat app copilot secret init --app bedrock-chat-app --name BEDROCK_CHAT_USERNAME copilot secret init --app bedrock-chat-app --name BEDROCK_CHAT_PASSWORD # LangSmith copilot secret init --app bedrock-chat-app --name LANGCHAIN_API_KEY

-

Deploy the application

The deployment should take approximately 10 minutes. AWS Copilot will return the service URL (

COPILOT_LB_DNS) once the deployment finishes.copilot deploy --all --init-wkld --deploy-env --env dev

-

Point your browser to the service playground (

<COPILOT_LB_DNS>/claude-chat/playground) to test the service.Use the credentials specified in step 2 to login (the default username/password is

bedrock/bedrock). -

Don't forget to clean up all resources when you're done!

copilot app delete

- (LangChain) Introducing LangServe, the best way to deploy your LangChains

- (LangChain) LangChain Expression Language

- (Anthropic) Claude Prompt Engineering Techniques - Bedrock Edition

- (AWS) Introducing AWS Copilot

- (AWS) Developing an application based on multiple microservices using AWS Copilot and AWS Fargate

- (AWS) AWS Copilot CLI