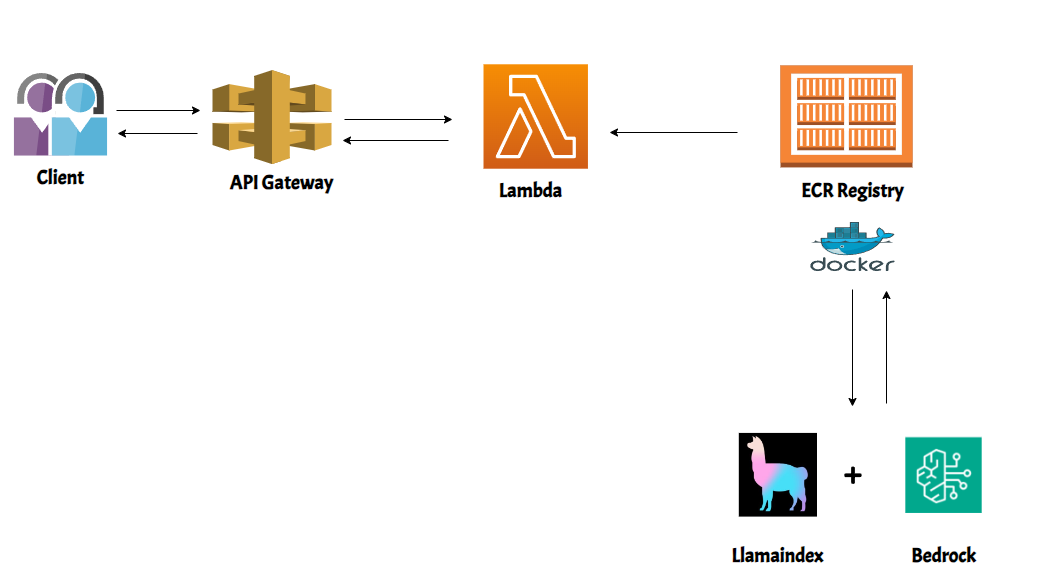

This project shows how to deploy a llamaindex chat assistant on AWS.

-

Create a repository on AWS ECR

aws ecr create-repository --repository-name llamaindex_chatassistant

-

Build the Docker Image

docker build -t my-llamaindex-chat-assistant-image . -

Authenticate Docker with AWS ECR

Authenticate your Docker CLI to the Amazon ECR registry where your repository is located. Replace [region_name] and [account_id] with your actual AWS region and account ID.

aws ecr get-login-password --region [region_name] | docker login --username AWS --password-stdin [account_id].dkr.ecr.[region_name].amazonaws.com -

Tag the Docker Image

Tag your Docker image so that it can be pushed to the ECR repository. Replace [region_name] and [account_id] with your AWS region and account ID.

docker tag my-llamaindex-chat-assistant-image:latest [account_id].dkr.ecr.[region_name].amazonaws.com/llamaindex_chatassistant:latest

-

Push the Docker Image to ECR

Finally, push the tagged image to your ECR repository:

docker push [account_id].dkr.ecr.[region_name].amazonaws.com/llamaindex_chatassistant:latest

-

Create the lambda function on the console and define your own event and test it!