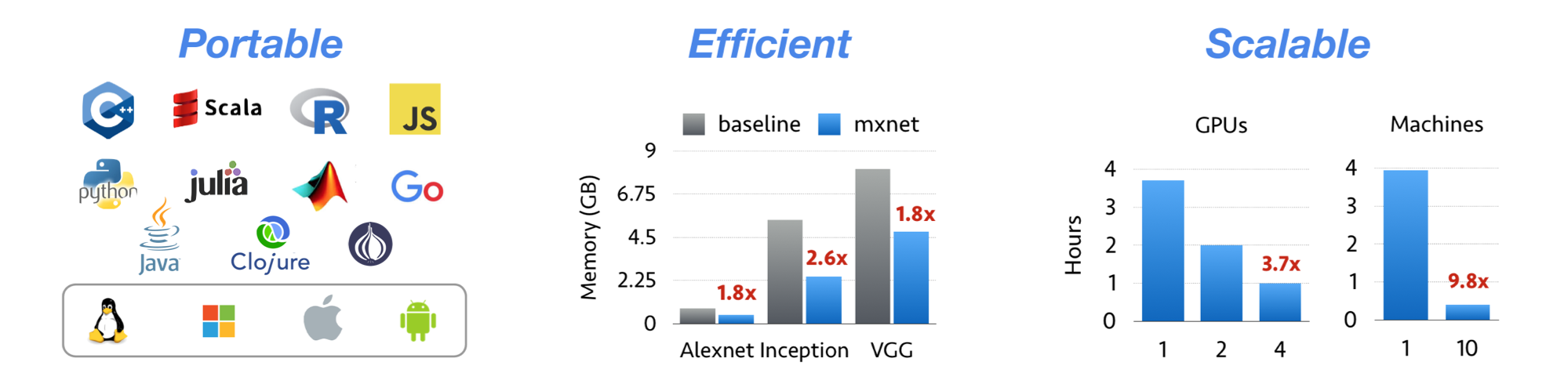

- Flexible and Efficient Library for Deep Learning

- Symbolic programming or Imperative programming

- Mixed programming available (

Symbolic + imperative)

- It is a tutorial that can be helpful to those

who are new to the MXNET Deep-Learning Framework

- The following LINK is a tutorial on the MXNET official homepage

Link: mxnet homapage tutorials

- Required library and very simple code

import mxnet as mx

import numpy as np

out=mx.nd.ones((3,3),mx.gpu(0))

print(mx.asnumpy(out))- The below code is the result of executing the above code

<NDArray 3x3 @gpu(0)>

[[ 1. 1. 1.]

[ 1. 1. 1.]

[ 1. 1. 1.]]

-

The following code is the most basic mxnet 'symbolic programming' technique using only 'Symbol.API', 'Symbol.bind Function', and Optimizer classes. It is very flexible. If you only understand the code below, you can implement whatever you want.

-

K-means Algorithm Using Random Data

I implemented 'k-means algorithm' for speed comparison. 1. 'kmeans.py' is implemented using partial symbol.API.(Why partial? Only the assignment part of k-means algorithm was implemented with symbol.API) Comparison between symbol and ndarray(see below 'NDArray.API' for more information) -> As the number of data increases, symbol is a little faster than ndarray. Comparison between cpu and gpu -> As the number of data increases, gpu-symbol is overwhelmingly faster than cpu-symbol.

-

- Modified Recurrent Neural Networks Cell by JG

Please refer to this code when you want to freely transform 'shape' or 'structure' of 'RNN Cell', 'LSTM Cell', 'GRU Cell'. For example, it can be used to implement a transformation structure such as 'sequence to sequence'.

- Modified Recurrent Neural Networks Cell by JG

-

Train flexible Fully Connected Neural Network with Sparse Symbols

-

-

The following code is a high-level interface Using 'Symbol.API' and 'Module.API'. It is fairly easy and quick to implement a formalized neural network. However, if you are designing a flexible neural net, do not use it.

-

Fully Connected Neural Network with LogisticRegressionOutput : Classifying MNIST data

-

Fully Connected Neural Network with SoftmaxOutput : Classifying MNIST data

-

Fully Connected Neural Network with SoftmaxOutput (flexible) : Classifying MNIST data

-

Convolutional Neural Networks with SoftmaxOutput : Classifying MNIST data

-

Convolutional Neural Networks with SoftmaxOutput (flexible) : Classifying MNIST data

-

Recurrent Neural Networks with SoftmaxOutput : Classifying MNIST data

-

Recurrent Neural Networks + LSTM with SoftmaxOutput : Classifying MNIST data

-

Recurrent Neural Networks + LSTM with SoftmaxOutput (flexible) : Classifying MNIST data

-

Recurrent Neural Networks + GRU with SoftmaxOutput : Classifying MNIST data

-

Recurrent Neural Networks + GRU with SoftmaxOutput (flexible) : Classifying MNIST data

-

Autoencoder Neural Networks with logisticRegressionOutput : Using MNIST data

-

Autoencoder Neural Networks with logisticRegressionOutput (flexible) : Using MNIST data

-

Fully Connected 'Sparse' Neural Network with SoftmaxOutput (flexible) : Classifying MNIST data

Train flexible Fully Connected Neural Network with Sparse Symbols

-

-

-

<linux> pip install graphviz(in anaconda Command Prompt) <window> 1. download 'graphviz-2.38.msi' at 'http://www.graphviz.org/Download_windows.php' 2. Install to 'C:\Program Files (x86)\' 3. add 'C:\Program Files (x86)\Graphviz2.38\bin' to the environment variable PATH

'''<sample code>''' import mxnet as mx '''neural network''' data = mx.sym.Variable('data') label = mx.sym.Variable('label') # first_hidden_layer affine1 = mx.sym.FullyConnected(data=data,name='fc1',num_hidden=50) hidden1 = mx.sym.Activation(data=affine1, name='sigmoid1', act_type="sigmoid") # two_hidden_layer affine2 = mx.sym.FullyConnected(data=hidden1, name='fc2', num_hidden=50) hidden2 = mx.sym.Activation(data=affine2, name='sigmoid2', act_type="sigmoid") # output_layer output_affine = mx.sym.FullyConnected(data=hidden2, name='fc3', num_hidden=10) output=mx.sym.SoftmaxOutput(data=output_affine,label=label) # Create network graph graph=mx.viz.plot_network(symbol=output) graph.view() # Show graphs and save them in pdf format.

-

MXNET with Tensorboard Only Available On Linux - Currently Only Works In Python 2.7

pip install tensorboard

'''Issue''' The '80's line of the tensorboard file in the path '/home/user/anaconda3/bin' should be modified as shown below.

<code> for mod in package_path: module_space = mod + '/tensorboard/tensorboard' + '.runfiles' if os.path.isdir(module_space): return module_space

-

If you want to see the results immediately,

write the following script in the terminal windowwhere the event file exists.tensorboard --logdir=tensorboard --logdir=./ --port=6006

-

-

-

-

Generative Adversarial Networks with fullyConnected Neural Network : Using MNIST data

-

Deep Convolution Generative Adversarial Network : Using ImageNet , CIFAR10 , MNIST data

<Code execution example> python main.py --state --epoch 100 --noise_size 100 --batch_size 200 --save_period 100 --dataset CIFAR10 --load_weights 100

-

to configure a network of flexible structure, use 'bind function' of Symbol.API and 'optimizer class'. - See the code for more information!!!

-

Dynamic Routing Between Capsules : Capsule Network only using Symbol.API

-

Dynamic Routing Between Capsules : Capsule Network using Module.API

-

The NDArray API is different from mxnet-symbolic coding. It is imperactive coding and focuses on NDArray of mxnet.

-

The following LINK is a tutorial on the gluon page official homepage

-

K-means Algorithm Using Random Data

I implemented 'k-means algorithm' in two ways for speed comparison. 1. 'kmeans_numpy.py' is implemented using mxnet-ndarray 2. 'kmeans.py' is implemented using numpy Comparison between ndarray and numpy -> As the number of data increases, ndarray is overwhelmingly faster than numpy. Using ndarray only Comparison between cpu and gpu -> As the number of data increases, gpu-ndarray is overwhelmingly faster than cpu-ndarray.

-

Multiclass logistic regression : Classifying MNIST , CIFAR10 , Fashion_MNIST data

-

Fully Neural Network : Classifying MNIST , CIFAR10 , Fashion_MNIST data

-

Convolution Neural Network : Classifying MNIST , CIFAR10 , Fashion_MNIST data

-

Autoencoder Neural Networks : Using MNIST and Fashion_MNIST data

-

Convolution Autoencoder Neural Networks : Using MNIST and Fashion_MNIST data

-

Recurrent Neural Network(RNN, LSTM, GRU) : Classifying Fashion_MNIST data

-

-

-

The Gluon package is different from mxnet-symbolic coding. It is imperactive coding and focuses on mxnet with NDArray and Gluon. - You can think of it as a deep learning framework that wraps NDARRAY.API easily for users to use.

-

The following LINK is a tutorial on the gluon page official homepage

-

K-means Algorithm Using Random Data

I implemented 'k-means algorithm' for speed comparison. 1. 'kmeans.py' is implemented using Gluon package. Comparison between gluon and ndarray(see above 'NDArray.API' for more information) -> As the number of data increases, Gluon is a little bit faster than ndarray. Comparison between cpu and gpu -> As the number of data increases, gpu-gluon is overwhelmingly faster than cpu-gluon.

-

Using nn.Sequential when writing your

High-Level Code-

Multiclass logistic regression : Classifying MNIST , CIFAR10 , Fashion_MNIST data

-

Fully Neural Network : Classifying MNIST , CIFAR10 , Fashion_MNIST data

-

Convolution Neural Network : Classifying MNIST , CIFAR10 , Fashion_MNIST data

-

Autoencoder Neural Networks : Using MNIST and Fashion_MNIST data

-

Convolution Autoencoder Neural Networks : Using MNIST and Fashion_MNIST data

-

Recurrent Neural Network(RNN, LSTM, GRU) : Classifying Fashion_MNIST data

'Recurrent Layers' in 'gluon' are the same as FusedRNNCell in 'symbol.API'. -> Because it is not flexible, Recurrent Layers is not used here.

-

-

Using Block when Designing a

Custom Layer- Flexible use of Gluon- Convolution Neural Network with Block or HybridBlock : Classifying MNIST , CIFAR10 , Fashion_MNIST data

It is less flexible than Block in network configuration.

- Convolution Neural Network with Block or HybridBlock : Classifying MNIST , CIFAR10 , Fashion_MNIST data

-

-

-

-

To configure a network of flexible structure, You should be able to set the object you want to differentiate. - See the code for more information!!! -

Neural Style with Gluon Package

To configure a network of flexible structure, You should be able to set the object you want to differentiate. - See the code for more information!!! -

Deep Convolution Generative Adversarial Networks Using CIFAR10 , FashionMNIST data

-

Deep Convolution Generative Adversarial Networks Targeting Using CIFAR10 , MNIST data

-

Predicting the yen exchange rate with LSTM or GRU

Finding 'xxxx' - JPY '100' is 'xxxx' KRW I used data from '2010.01.04' ~ '2017.11.25'

- os :

window 10.1 64bitandUbuntu linux 16.04.2 LTS only for tensorboard - python version(

3.6.1) :anaconda3 4.4.0 - IDE :

pycharm Community Edition 2017.2.2 or visual studio code

- mxnet-1.0.0

- numpy-1.12.1, matplotlib-2.0.2 , tensorboard-1.0.0a7(linux) , graphviz -> (

Visualization) - tqdm -> (

Extensible Progress Meter) - opencv-3.3.0.10 , struct , gzip , os , glob , threading -> (

Data preprocessing) - Pickle -> (

Data save and restore) - logging -> (

Observation during learning) - argparse -> (

Command line input from user) - urllib , requests -> (

Web crawling)