A novel robotic manipulation technique to scoop objects in various scenarios is implemented by this package. The work implies autonoumus picking of thin profile objects from the flat surface and in a dense clutter environment, plastic cards, domino blocks, Go stones for example, using two-finger parallel jaw gripper having one length-controllable digit. The technique presents the ability to carry out complete bin picking: from the first to the last one. The package includes gripper design used, pre-scoop planning, object detection and instance segmentation by Mask-RCNN, scoop-grasp manipulation package and collision check.

The video of the complete bin picking experiment via dig-grasping and scooping is available at https://youtu.be/A1oetxHKOyY.

- Universal Robot UR10

- Robotiq 140mm Adaptive parallel-jaw gripper

- RealSense Camera SR300

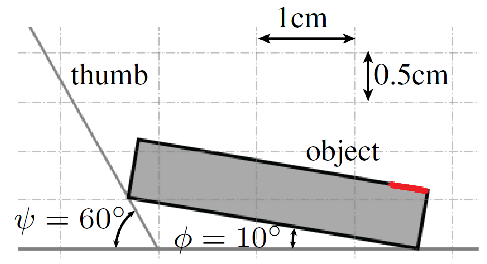

- Customized Gripper design comprises a variable-length thumb and a dual-material finger, for realizing finger length difference during scooping and dual material fingertip for the combination of dig-grasping and scooping.

This implementation requires the following dependencies (tested on Ubuntu 16.04 LTS):

- ROS Kinetic

- Urx for UR10 robot control

- robotiq_2finger_grippers: ROS driver for Robotiq Adaptive Grippers

- Mask R-CNN for instance segmentation (also see the dependencies therein).

- OpenCV 3.4.1 and Open3D 0.7.0.0

- PyBullet for collision check

Note: The online compiler Jupyter Notebook is needed to run our program.

Note: We have generated two separate python environments in anaconda, python2.7 for ROS Kinetic, URx, robotiq_2finger_grippers and python3.5 for Mask_R_CNN.

We have developed a pre-scoop planner in python to get the appropriate fingertip locations on the object face for successful scooping process.

To get the plan of Go stone, domino, triangular prism, run the following program respectively:

Scooping/Pre-Scoop Planning/Go_stone.py

Scooping/Pre-Scoop Planning/domino.py

Scooping/Pre-Scoop Planning/triangular.py

In your catkin workspace:

cd ~/catkin_ws/src

git clone https://github.com/HKUST-RML/Scooping.git

cd ..

catkin_make

source devel/setup.bash

Activate the force/torque sensor, robotiq 2-fingered gripper and RealSense Camera in three separate terminals:

roslaunch robotiq_ft_sensor gripper_sensor.launch

roslaunch robotiq_2f_gripper_control robotiq_action_server.launch comport:=/dev/ttyUSB0 stroke:=0.140

roslaunch realsense2_camera rs_camera.launch align_depth:=true

In each of the below experiment, two separate terminals are required. One for executing the program of instance segmentation/object detection (activate conda enviroment having python3.5) and another one for executing the program of scoop manipulation (activate conda environment having python2.7) in the sequence given below.

- Start Jupyter Notebook via

jupyter notebookin first terminal and runScooping/instance_segmentation/samples/stones/stone_detection_ros_both_hori_and_ver.ipynbfor instance segmentation and object pose detection. - Start Jupyter Notebook via

jupyter notebookin second terminal, and runScooping/scoop/src/Go_stone/Go_stone_variable_thumb_round_bowl_only_skim.ipynb.

- Start Jupyter Notebook via

jupyter notebookin first terminal and runScooping/instance_segmentation/samples/plastic_cards/plastic_cards_detection_ros.ipynb. - Start Jupyter Notebook via

jupyter notebookin second terminal, and runScooping/scoop/src/plastic_card/plastic_card_variable_thumb.ipynb.

- Start Jupyter Notebook via

jupyter notebookin first terminal and runScooping/instance_segmentation/samples/domino/domino_detection_ros_both_hori_and_ver.ipynbfor instance segmentation and object pose detection. - Start Jupyter Notebook via

jupyter notebookin second terminal, and runScooping/scoop/src/domino/domino_variable_thumb_round_bowl.ipynb.

- Start Jupyter Notebook via

jupyter notebookin first terminal and runScooping/instance_segmentation/samples/triangle/triangle_detection_ros.ipynbfor instance segmentation and object pose detection. - Start Jupyter Notebook via

jupyter notebookin second terminal, and runScooping/scoop/src/triangle/triangle_variable_thumb_round_bowl.ipynb.

- First check the requirement for dig-grasping.

- Start Jupyter Notebook via

jupyter notebookin first terminal and runScooping/instance_segmentation/samples/stones/stone_detection_ros_both_hori_and_ver.ipynbfor instance segmentation and object pose detection. - Start Jupyter Notebook via

jupyter notebookin second terminal, and runScooping/scoop/src/Go_stone/Go_stone_variable_thumb_round_bowl_diggrasp_and_scoop.ipynb.

For any technical issues, please contact: Tierui He (theae@connect.ust.hk), and Shoaib Aslam (saslamaa@connect.ust.hk).