📄Paper | 🏠️Homepage | 📥Download | 💎Demo | 🤖Benchmarks

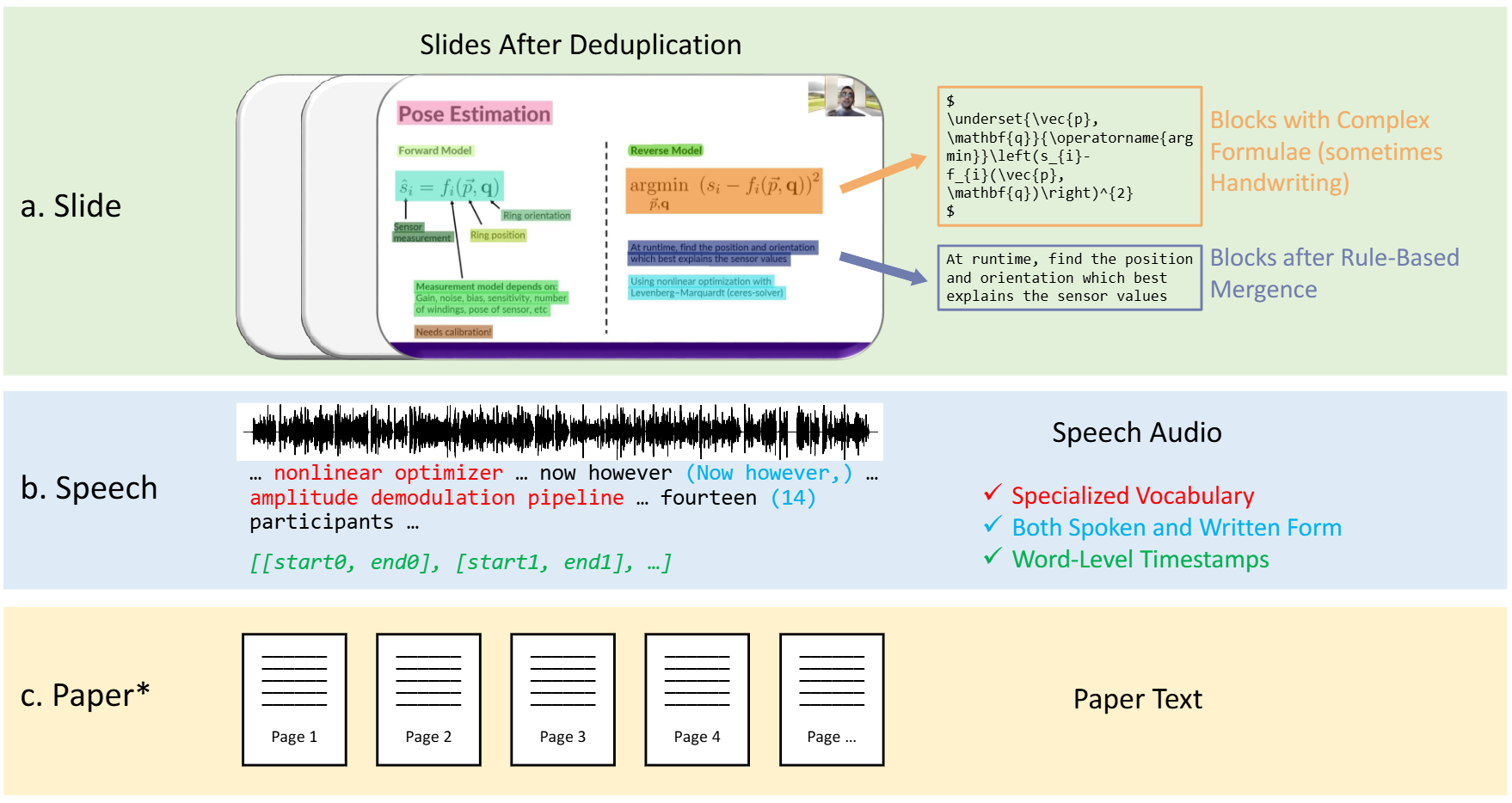

The overview of our 🎓M3AV dataset:

- The first component is slides annotated with simple and complex blocks. They will be merged following some rules.

- The second component is speech containing special vocabulary, spoken and written forms, and word-level timestamps.

- The third component is the paper corresponding to the video. The asterisk (*) denotes that only computer science videos have corresponding papers.

| Video Field | Video Name | Total Hours | Total Counts |

|---|---|---|---|

| Human-computer Interaction | CHI 2021 Paper Presentations (CHI) | 55.00 | 660 |

| Human-computer Interaction | UbiComp 2020 Presentations (Ubi) | 10.65 | 107 |

| Biomedical Sciences | NIH Director's Wednesday Afternoon Lectures (NIH) | 237.71 | 228 |

| Biomedical Sciences | Introduction to the Principles and Practice of Clinical Research (IPP) | 42.27 | 67 |

| Mathematics | Oxford Mathematics (MLS) | 27.23 | 51 |

We download various academic lectures ranging from Human-computer Interaction, and Biomedical Sciences to Mathematics as shown in the table above.

- [2024-08] 🤖All Benchmarks have been released!

- [2024-06] 🤖Benchmarks of LLaMA-2 and GPT-4 have been released!

- [2024-05] 🎉Our work has been accepted by ACL 2024 main conference!

- [2024-04] 🔥v1.0 has been released! We have further refined all speech data. Specifically, the training set adopts the text-normalised Whisper results, and the development/testing set employs a manual combination of Whisper and microsoft STT results.

The folder demo contains a sample for demonstration.