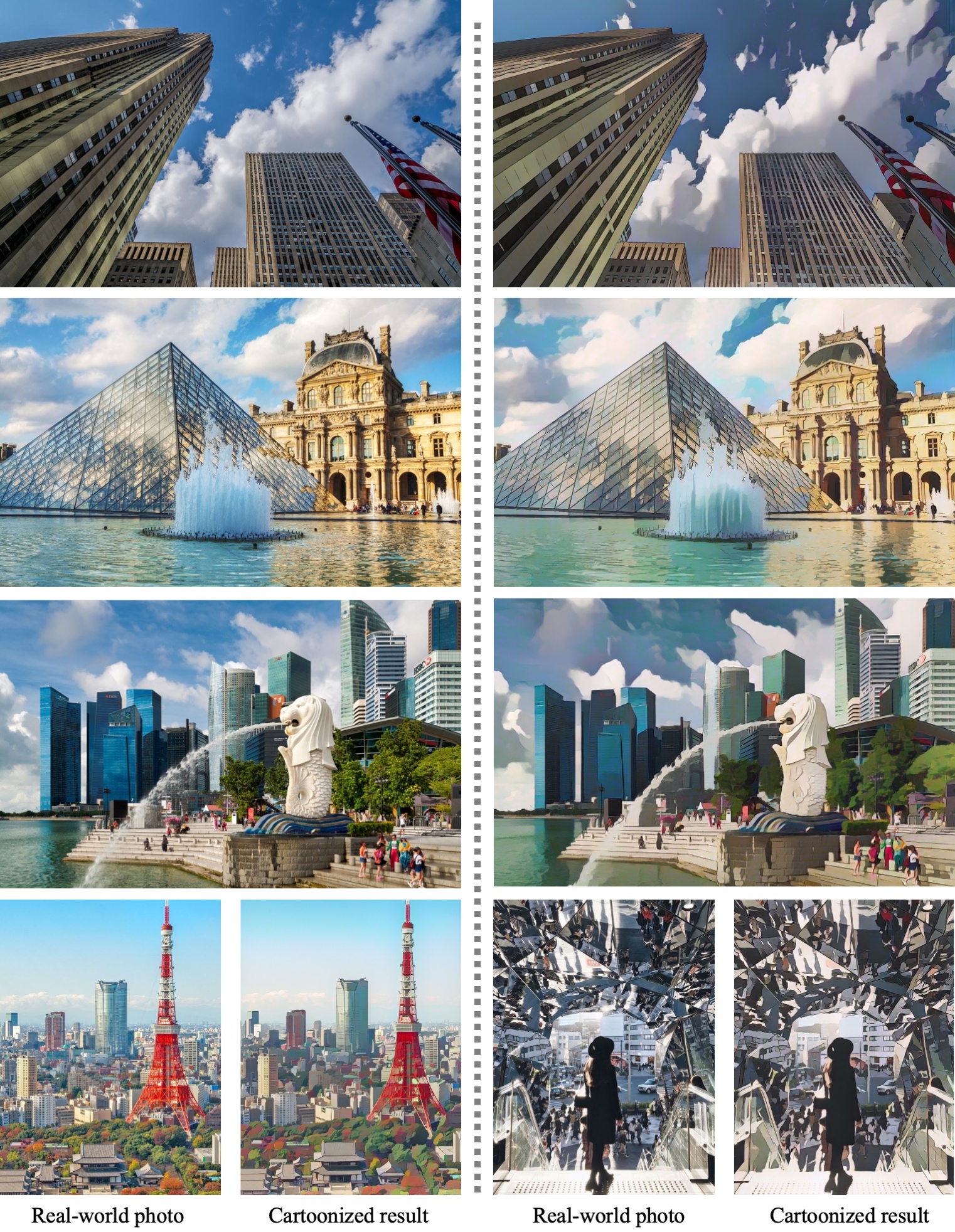

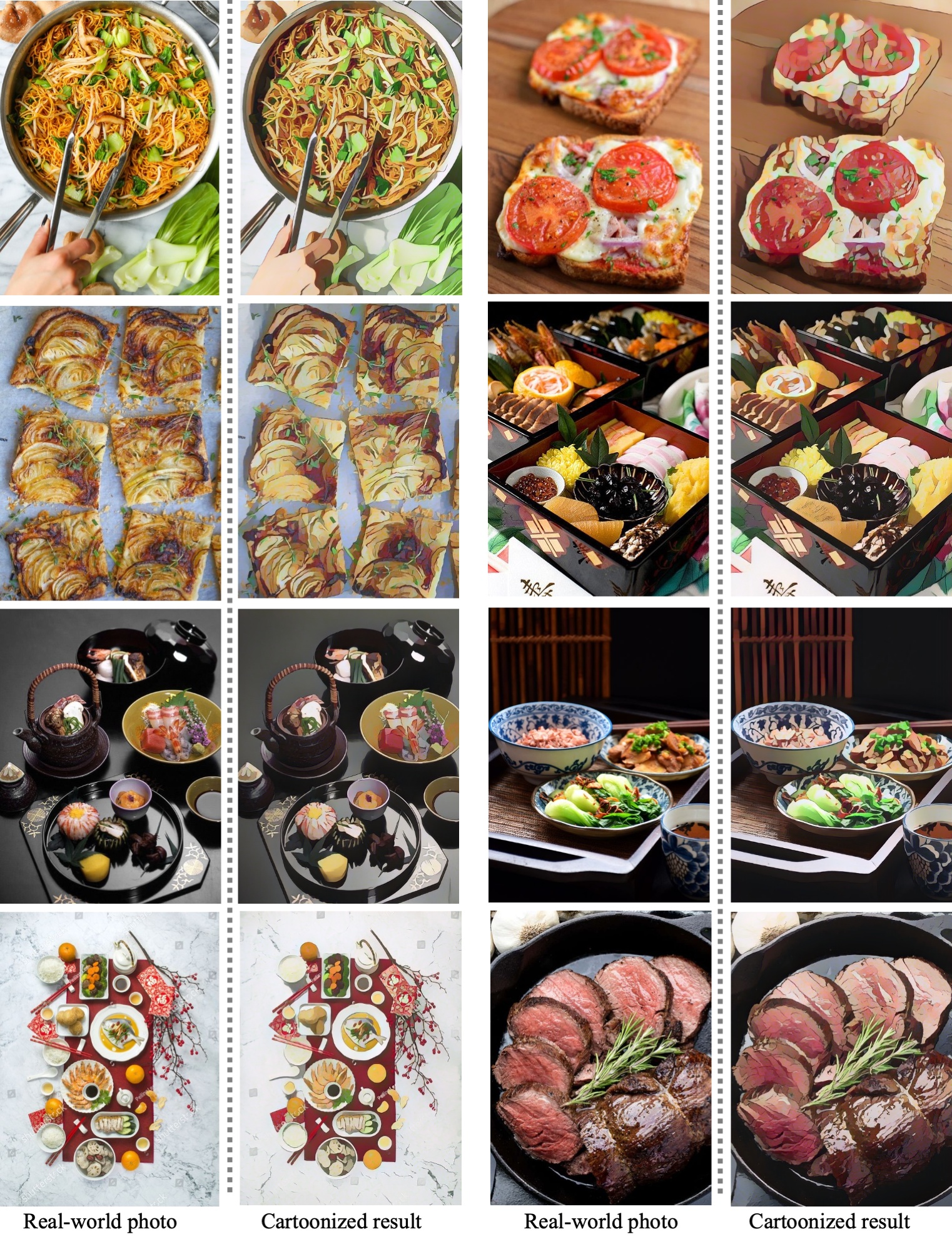

Tensorflow implementation for CVPR2020 paper “Learning to Cartoonize Using White-box Cartoon Representations”.

This repo in under construction, now inference code is available, training code will be updated soon

- Training code: Linux or Windows

- NVIDIA GPU + CUDA CuDNN for performance

- Inference code: Linux, Windows and MacOS

- Assume you already have NVIDIA GPU and CUDA CuDNN installed

- Install tensorflow-gpu, we tested 1.12.0 and 1.13.0rc0

- Install scikit-image==0.14.5, other versions may cause problems

- Store test images in /test_code/test_images

- Run /test_code/cartoonize.py

- Results will be saved in /test_code/cartoonized_images

- Place your training data in corresponding folders in /dataset

- Run pretrain.py, results will be saved in /pretrain folder

- Run train.py, results will be saved in /train_cartoon folder

- Codes are cleaned from production environment and untested

- There may be minor problems but should be easy to resolve

- Due to copyright issues, we cannot provide cartoon images used for training

- However, these training datasets are easy to prepare

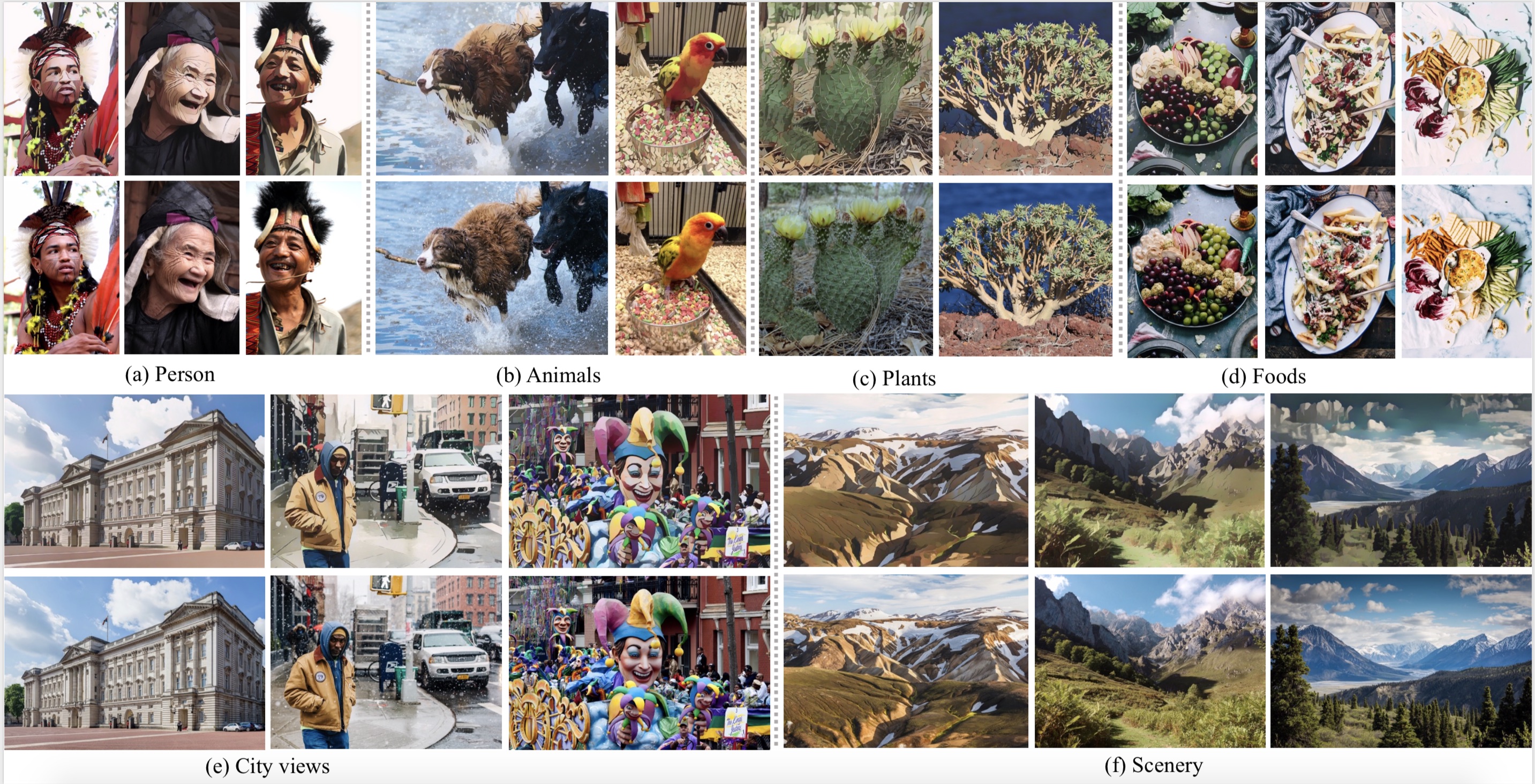

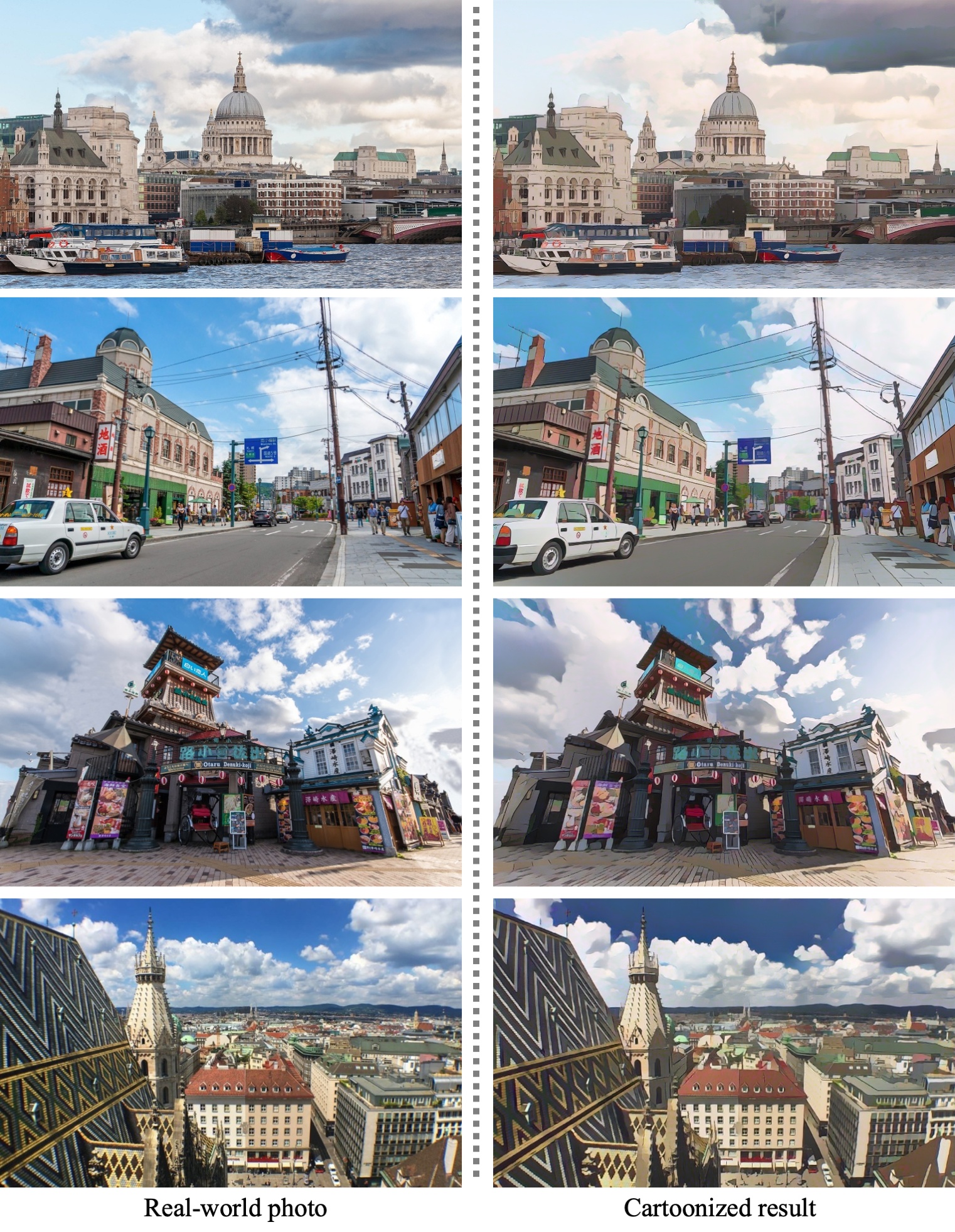

- Scenery images are collected from Shinkai Makoto, Miyazaki Hayao and Hosoda Mamoru films

- Clip films into frames and random crop and resize to 256x256

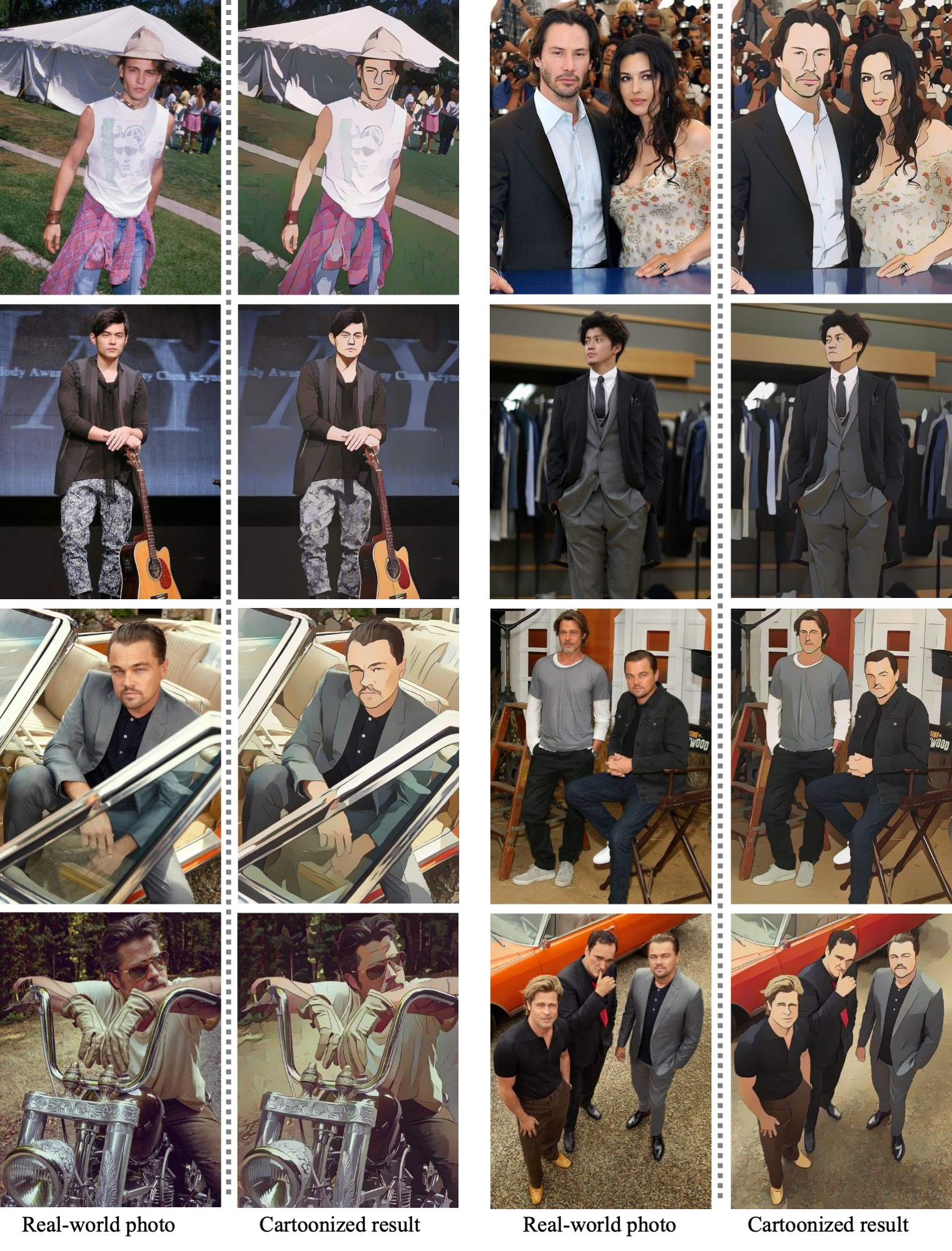

- Portrait images are from Kyoto animations and PA Works

- We use this repo(https://github.com/nagadomi/lbpcascade_animeface) to detect facial areas

- Manual data cleaning will greatly increace both datasets quality

If you use this code for your research, please cite our paper: