Paper | Supplementary | Demo

Tao Yang1, Peiran Ren1, Xuansong Xie1, Lei Zhang1,2

1DAMO Academy, Alibaba Group, Hangzhou, China

2Department of Computing, The Hong Kong Polytechnic University, Hong Kong, China

(2021-12-29) Add online demos

(2021-12-16) More models will be released including one-to-many FSRs. Stay tuned.

(2021-12-16) Release a simplified training code of GPEN. It differs from our implementation in the paper, but could achieve comparable performance. We strongly recommend to change the degradation model.

(2021-12-09) Add face parsing to better paste restored faces back.

(2021-12-09) GPEN can run on CPU now by simply discarding --use_cuda.

(2021-12-01) GPEN can now work on a Windows machine without compiling cuda codes. Please check it out. Thanks to Animadversio. Alternatively, you can try GPEN-Windows. Many thanks to Cioscos.

(2021-10-22) GPEN can now work with SR methods. A SR model trained by myself is provided. Replace it with your own model if necessary.

(2021-10-11) The Colab demo for GPEN is available now .

- Clone this repository:

git clone https://github.com/yangxy/GPEN.git

cd GPEN-

Download RetinaFace model and our pre-trained model (not our best model due to commercial issues) and put them into

weights/.RetinaFace-R50 | ParseNet-latest | model_ir_se50 | GPEN-BFR-512 | GPEN-BFR-512-D | GPEN-BFR-256 | GPEN-BFR-256-D | GPEN-Colorization-1024 | GPEN-Inpainting-1024 | GPEN-Seg2face-512 | rrdb_realesrnet_psnr

-

Restore face images:

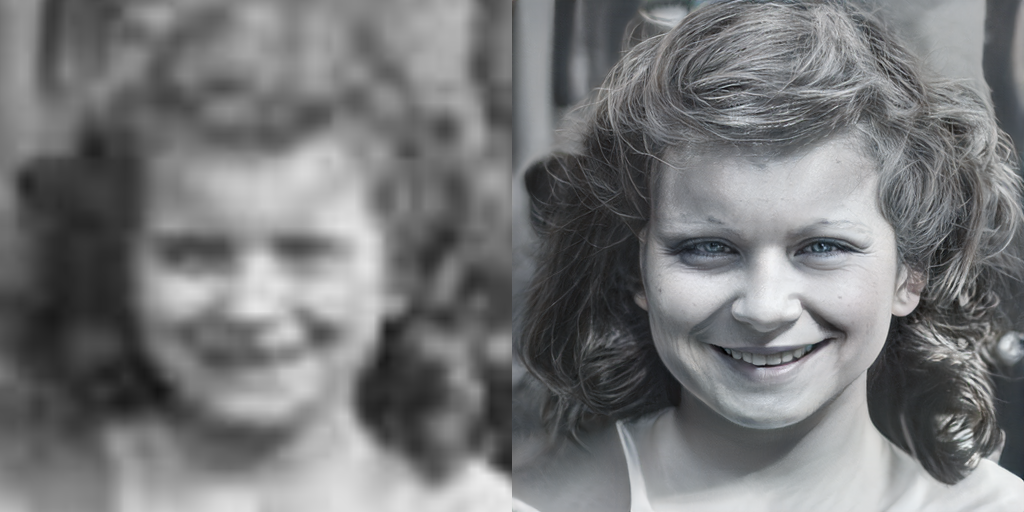

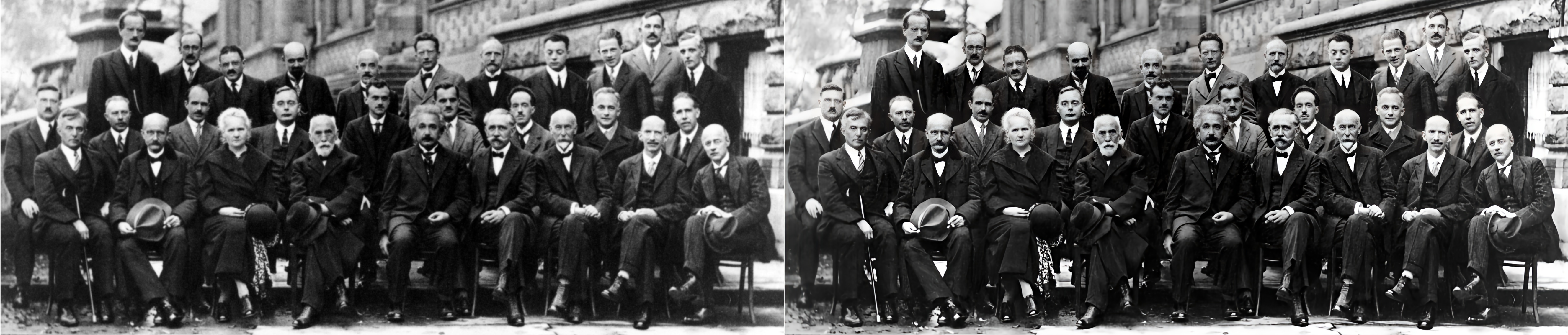

python face_enhancement.py --model GPEN-BFR-512 --size 512 --channel_multiplier 2 --narrow 1 --use_sr --use_cuda --indir examples/imgs --outdir examples/outs-BFR- Colorize faces:

python face_colorization.py- Complete faces:

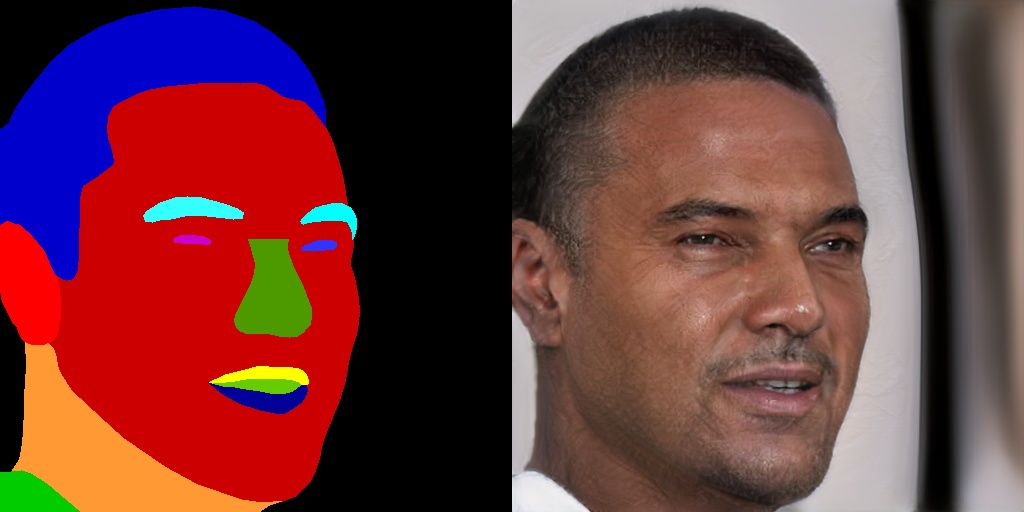

python face_inpainting.py- Synthesize faces:

python segmentation2face.py- Train GPEN for BFR with 4 GPUs:

CUDA_VISIBLE_DEVICES='0,1,2,3' python -m torch.distributed.launch --nproc_per_node=4 --master_port=4321 train_simple.py --size 1024 --channel_multiplier 2 --narrow 1 --ckpt weights --sample results --batch 2 --path your_path_of_croped+aligned_hq_faces (e.g., FFHQ)

When testing your own model, set --key g_ema.

If our work is useful for your research, please consider citing:

@inproceedings{Yang2021GPEN,

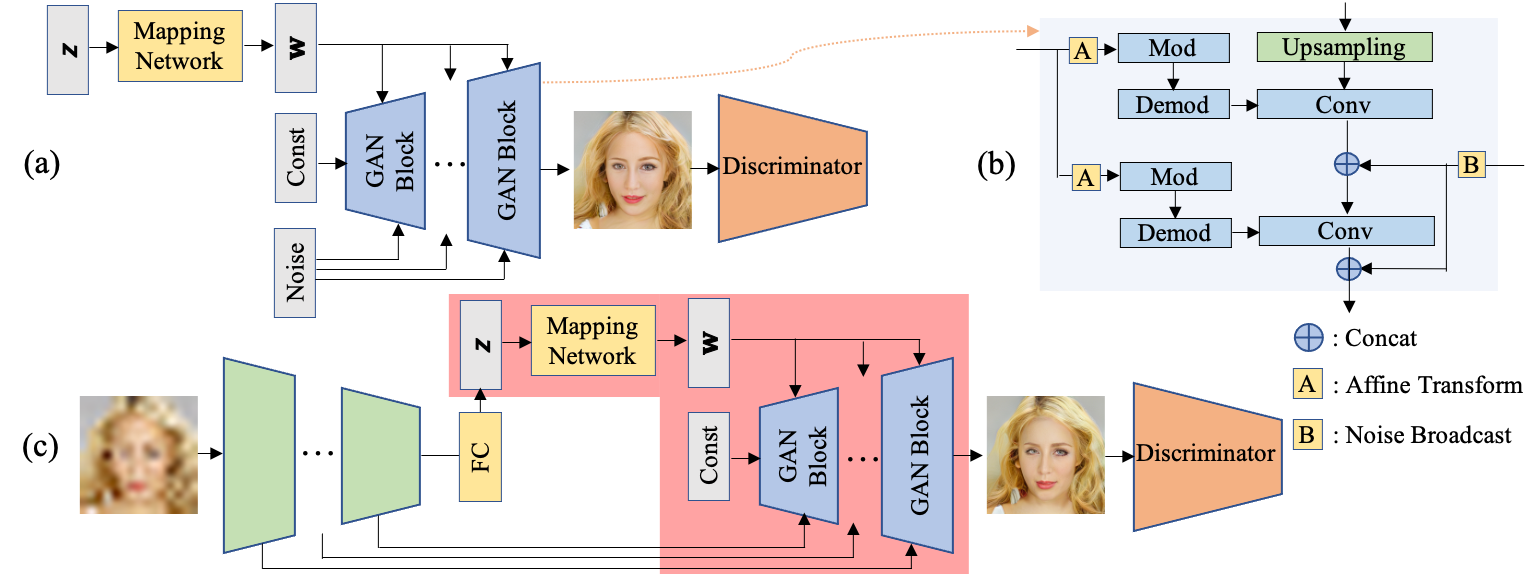

title={GAN Prior Embedded Network for Blind Face Restoration in the Wild},

author={Tao Yang, Peiran Ren, Xuansong Xie, and Lei Zhang},

booktitle={IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

year={2021}

}

© Alibaba, 2021. For academic and non-commercial use only.

We borrow some codes from Pytorch_Retinaface, stylegan2-pytorch, Real-ESRGAN, and GFPGAN.

If you have any questions or suggestions about this paper, feel free to reach me at yangtao9009@gmail.com.