A hands-on RAG (Retrieval-Augmented Generation) application that transforms PDF documents into queryable knowledge bases

Perfect for learning RAG, LangChain, and LLM-powered document interactions

This is a complete RAG application that demonstrates how to build an intelligent system that:

- Uploads and processes PDF documents

- Extracts and chunks text intelligently

- Creates semantic embeddings for document search

- Answers questions using retrieved context from PDFs

- Returns human-friendly answers based on document content

Perfect for students learning:

- 🤖 Retrieval-Augmented Generation (RAG)

- 🔗 LangChain framework

- 💬 LLM prompt engineering

- 📄 PDF processing and text extraction

- 🔍 Vector embeddings and similarity search

- 🌐 Building full-stack AI applications

| Feature | Description |

|---|---|

| 📄 PDF Processing | Automatic text extraction and intelligent chunking |

| 🧠 Semantic Search | Powered by OpenAI embeddings and FAISS vector store |

| 💬 Context-Aware Answers | Uses GPT models with retrieved context from documents |

| 🎨 Dual Interface | Both Streamlit web UI and FastAPI REST API |

| 🔄 RAG Pipeline | Complete RAG implementation with LangChain |

| ⚡ Real-time Processing | Upload and query documents instantly |

| 🚀 Production Ready | Modular architecture, error handling, and best practices |

┌─────────────────┐

│ PDF Upload │ User uploads a PDF document

└────────┬────────┘

│

▼

┌─────────────────────────────────────┐

│ PDF Processing Pipeline │

│ ┌───────────────────────────────┐ │

│ │ 1. Extract text from PDF │ │

│ └──────────────┬────────────────┘ │

│ │ │

│ ┌──────────────▼────────────────┐ │

│ │ 2. Split into chunks │ │

│ └──────────────┬────────────────┘ │

│ │ │

│ ┌──────────────▼────────────────┐ │

│ │ 3. Create embeddings │ │

│ │ and build vector store │ │

│ └──────────────┬────────────────┘ │

└─────────────────┼────────────────────┘

│

▼

┌────────────────┐

│ User Query │ "What is the main topic?"

└────────┬───────┘

│

▼

┌─────────────────────────────────────┐

│ LangChain RAG Pipeline │

│ ┌───────────────────────────────┐ │

│ │ 1. Embed query and search │ │

│ │ for relevant chunks │ │

│ └──────────────┬────────────────┘ │

│ │ │

│ ┌──────────────▼────────────────┐ │

│ │ 2. Retrieve top-k chunks │ │

│ └──────────────┬────────────────┘ │

│ │ │

│ ┌──────────────▼────────────────┐ │

│ │ 3. LLM generates answer │ │

│ │ using retrieved context │ │

│ └──────────────┬────────────────┘ │

└─────────────────┼────────────────────┘

│

▼

┌────────────────┐

│ User-friendly │

│ Answer │

└────────────────┘

| Category | Technology | Purpose |

|---|---|---|

| 🤖 AI/ML | LangChain | RAG pipeline orchestration |

| OpenAI GPT-4o-mini | LLM for answer generation | |

| Sentence Transformers | Local embeddings (all-MiniLM-L6-v2) | |

| 🌐 Backend | FastAPI | REST API server |

| 💻 Frontend | Streamlit | Interactive web interface |

| 🗄️ Vector Store | FAISS | Efficient similarity search |

| 📄 PDF Processing | PyPDF | PDF text extraction |

| ⚙️ Tools | uv | Fast Python package manager |

| Python 3.10+ | Programming language |

rag-pdf-python/

├── backend/

│ ├── __init__.py

│ └── main.py # 🚀 FastAPI REST endpoints

│

├── frontend/

│ ├── __init__.py

│ └── app.py # 🎨 Streamlit web interface

│

├── shared/

│ ├── __init__.py

│ ├── config.py # ⚙️ Configuration & environment variables

│ ├── pdf_processor.py # 📄 PDF extraction & chunking

│ ├── vector_store.py # 🔍 FAISS vector store management

│ └── rag.py # 🧠 RAG query engine

│

├── uploads/ # 📁 Uploaded PDFs directory (auto-created)

├── pyproject.toml # 📋 Dependencies & project config

├── uv.lock # 🔒 Dependency lock file

└── README.md

- Python 3.10+ installed

- OpenAI API Key (Get one here)

- uv package manager (we'll install it if needed)

curl -LsSf https://astral.sh/uv/install.sh | shgit clone https://github.com/JaimeLucena/rag-pdf-python.git

cd rag-pdf-pythonuv syncThis will create a virtual environment and install all required packages.

Create a .env file in the root directory:

OPENAI_API_KEY=sk-your-api-key-here

OPENAI_MODEL=gpt-4-turbo-preview

EMBEDDING_MODEL=text-embedding-3-small

CHUNK_SIZE=1000

CHUNK_OVERLAP=200💡 Tip: Never commit your

.envfile! It's already in.gitignore

Launch the FastAPI backend server:

uv run uvicorn backend.main:app --host localhost --port 8000 --reloadThe API will be available at http://localhost:8000

- 📚 Interactive docs: http://localhost:8000/docs

- 📖 ReDoc: http://localhost:8000/redoc

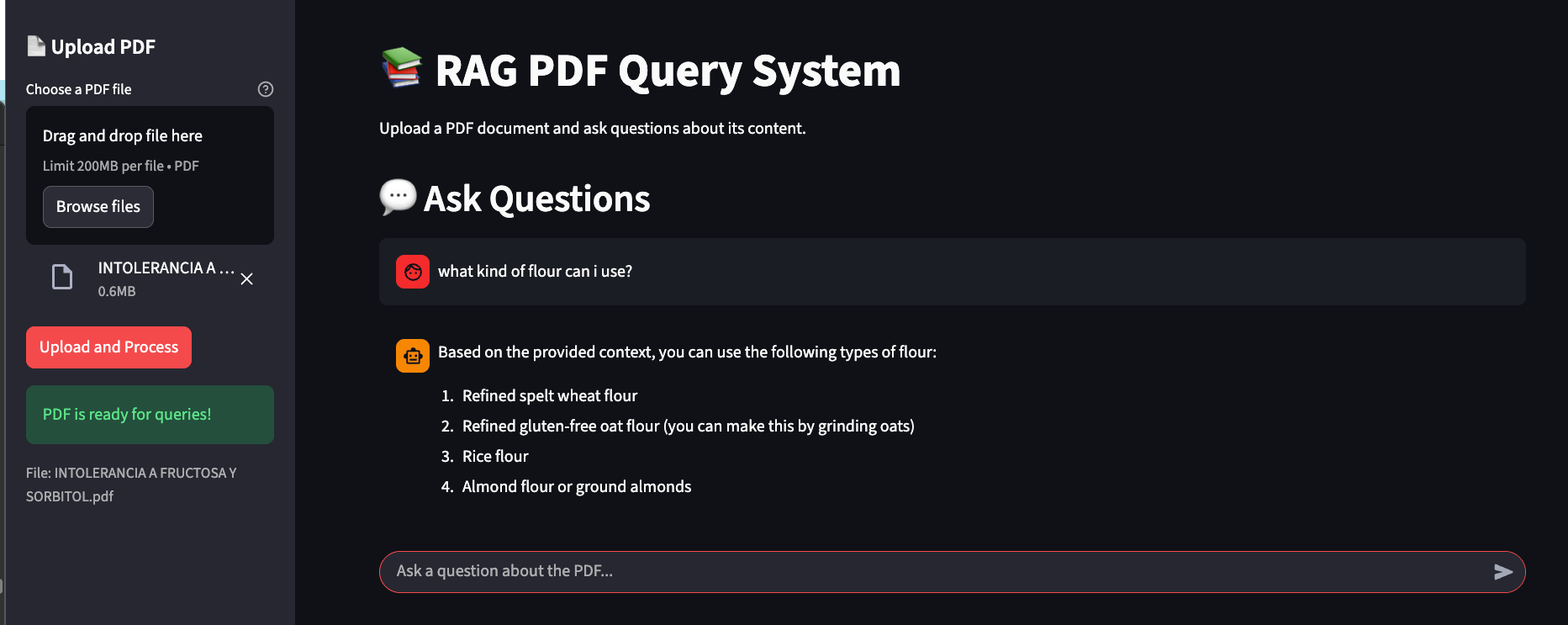

In a new terminal, launch the Streamlit web interface:

uv run streamlit run frontend/app.pyThe web UI will automatically open in your browser at http://localhost:8501

Features:

- 💬 Chat interface for natural language queries

- 📄 Drag & drop PDF upload

- 🎨 Clean, modern UI

- 📝 Chat history

- ✅ Real-time processing status

You can also interact with the API directly using HTTP requests:

curl -X POST "http://localhost:8000/upload" \

-H "Content-Type: multipart/form-data" \

-F "file=@document.pdf"Response:

{

"message": "PDF uploaded and processed successfully",

"chunks": 42,

"filename": "document.pdf"

}curl -X POST "http://localhost:8000/query" \

-H "Content-Type: application/json" \

-d '{"question": "What is the main topic of this document?"}'Response:

{

"answer": "The main topic of this document is..."

}curl http://localhost:8000/healthResponse:

{

"status": "healthy"

}Try asking these questions after uploading a PDF:

"What is this document about?""Summarize the main points""What are the key findings?""List the main topics"

"What is mentioned about [topic]?""Who are the authors?""What are the conclusions?""What methodology was used?"

"Explain the process described in the document""What are the recommendations?""What data or statistics are mentioned?""What are the limitations discussed?"

-

PDF Upload → User uploads a PDF document

document.pdf uploaded -

Text Extraction → Extract all text from PDF pages

Extracted 15,000 characters from 10 pages -

Chunking → Split text into overlapping chunks

Created 42 chunks of ~1000 characters each -

Embedding → Create vector embeddings for each chunk

Generated 42 embeddings using sentence transformers -

Vector Store → Build FAISS index for similarity search

FAISS index created with 42 vectors -

Query Processing → User asks a question

"What is the main topic?" -

Retrieval → Find most relevant chunks

Retrieved top 5 chunks with highest similarity -

Generation → LLM generates answer using context

"Based on the document, the main topic is..."

-

shared/pdf_processor.py: PDF text extraction and chunking- Extracts text from PDF pages

- Intelligent chunking with sentence boundaries

- Configurable chunk size and overlap

-

shared/vector_store.py: Vector embeddings and search- FAISS index for fast similarity search

- Sentence transformer embeddings

- Top-k retrieval

-

shared/rag.py: RAG query engine- Combines retrieval and generation

- Context-aware prompt engineering

- OpenAI GPT integration

You can customize the application behavior through environment variables:

| Variable | Description | Default |

|---|---|---|

OPENAI_API_KEY |

Your OpenAI API key | Required |

OPENAI_MODEL |

GPT model for answers | gpt-4-turbo-preview |

EMBEDDING_MODEL |

Model for embeddings | text-embedding-3-small |

CHUNK_SIZE |

Text chunk size | 1000 |

CHUNK_OVERLAP |

Overlap between chunks | 200 |

By exploring this project, you'll learn:

✅ RAG Fundamentals

- How to combine retrieval (vector search) with generation (LLM)

- Building end-to-end RAG pipelines

- Context-aware answer generation

✅ LangChain Patterns

- Creating custom chains

- Prompt engineering

- LLM integration

✅ Vector Embeddings

- Creating document embeddings

- Similarity search with FAISS

- Retrieval strategies

✅ PDF Processing

- Text extraction from PDFs

- Intelligent text chunking

- Handling different document formats

✅ Full-Stack AI Apps

- Building APIs for AI services

- Creating interactive UIs

- Managing state and sessions

✅ Best Practices

- Modular code organization

- Environment configuration

- Error handling

- Type hints and documentation

uv run pytestuv run ruff format .

uv run ruff check .Q: Why FAISS instead of other vector databases?

A: FAISS is perfect for learning - it's fast, in-memory, and requires no setup. For production with persistence, consider Pinecone, Weaviate, or Qdrant.

Q: Can I use a different LLM?

A: Yes! LangChain supports many providers. Just change the LLM initialization in shared/rag.py and update your API key.

Q: How do I persist the vector store?

A: The VectorStore class has save() and load() methods. You can modify the backend to persist embeddings between sessions.

Q: Is this production-ready?

A: This is a learning project. For production, add authentication, rate limiting, logging, monitoring, and persistent vector storage.

Q: What PDF formats are supported?

A: Currently supports standard PDFs with extractable text. Scanned PDFs (images) would require OCR preprocessing.

- LangChain Documentation

- FastAPI Documentation

- Streamlit Documentation

- OpenAI API Reference

- FAISS Documentation

- RAG Paper (Original)

MIT License - see LICENSE file for details

Built with ❤️ for students learning AI and generative models.

Happy Learning! 🚀

Made with ❤️ for the AI learning community

⭐ Star this repo if you found it helpful!