Towards Highly Realistic Artistic Style Transfer via Stable Diffusion with Step-aware and Layer-aware Prompt Inversion (IJCAI2024)

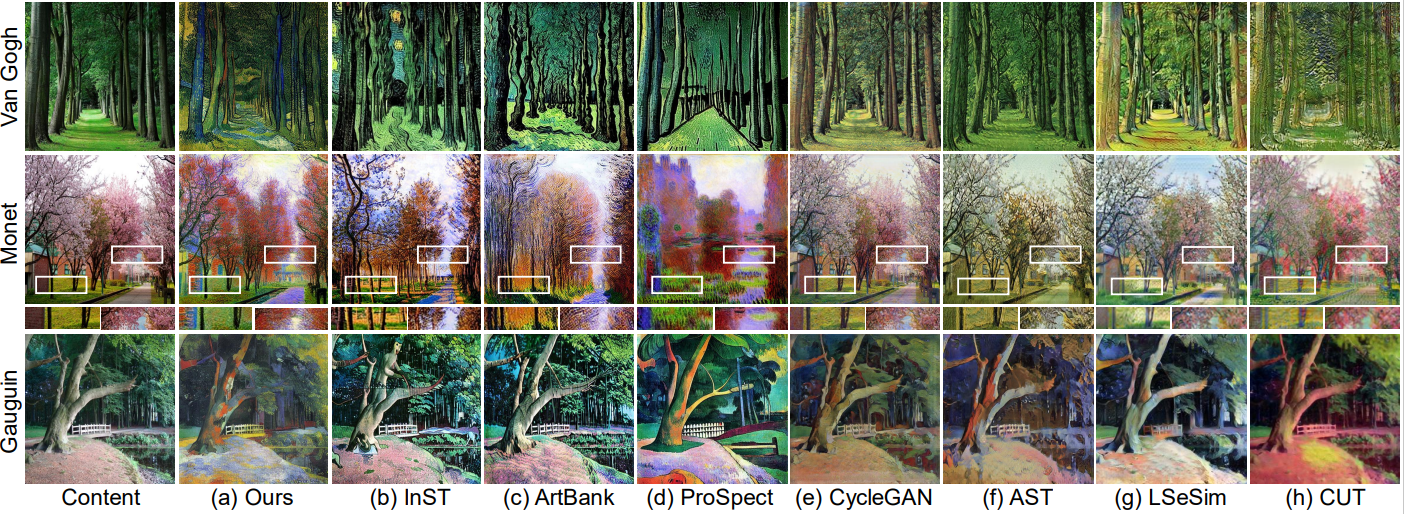

Artistic style transfer aims to transfer the learned artistic style onto an arbitrary content image, generating artistic stylized images. Existing generative adversarial network-based methods fail to generate highly realistic stylized images and always introduce obvious artifacts and disharmonious patterns. Recently, large-scale pre-trained diffusion models opened up a new way for generating highly realistic artistic stylized images. However, diffusion model-based methods generally fail to preserve the content structure of input content images well, introducing some undesired content structure and style patterns. To address the above problems, we propose a novel pre-trained diffusion-based artistic style transfer method, called LSAST, which can generate highly realistic artistic stylized images while preserving the content structure of input content images well, without bringing obvious artifacts and disharmonious style patterns. Specifically, we introduce a Step-aware and Layer-aware Prompt Space, a set of learnable prompts, which can learn the style information from the collection of artworks and dynamically adjusts the input images' content structure and style pattern. To train our prompt space, we propose a novel inversion method, called Step-ware and Layer-aware Prompt Inversion, which allows the prompt space to learn the style information of the artworks collection. In addition, we inject a pre-trained conditional branch of ControlNet into our LSAST, which further improved our framework's ability to maintain content structure. Extensive experiments demonstrate that our proposed method can generate more highly realistic artistic stylized images than the state-of-the-art artistic style transfer methods.

For details see the paper

For packages, see environment.yaml.

conda env create -f environment.yaml

conda activate ldmClone the repo

git clone https://github.com/Jamie-Cheung/LSASTTrain LSAST:

Firstly, you can download the pretrained v1-5-pruned.ckpt (stable diffusion 1.5) from https://huggingface.co/runwayml/stable-diffusion-v1-5/tree/main/ and save it at ./models/sd1.5/v1-5-pruned.ckpt.

You can find Artowkrs from https://drive.google.com/drive/folders/1_2jykbjVCF6SqJisvIt5-4fAFzVAj-F0?usp=drive_link

python main.py --base configs/stable-diffusion/v1-finetune.yaml

-t

--actual_resume ./models/sd1.5/v1-5-pruned.ckpt

-n <run_name>

--gpus 0,

--data_root /path/to/directory/with/images

python main.py --base configs/stable-diffusion/v1-finetune.yaml -t --actual_resume ./models/sd1.5/v1-5-pruned.ckpt -n test --gpus 0, --data_root ./Artworks/paul-gauguinSee configs/stable-diffusion/v1-finetune.yaml for more options

Download the pretrained [Controlnet] from https://huggingface.co/lllyasviel/ControlNet-v1-1/tree/main and save control_v11p_sd15_canny.pth as ./models/controlnet/control_v11p_sd15_canny.pth.

Download the pretrained model [LSAST] (https://drive.google.com/drive/folders/1_2jykbjVCF6SqJisvIt5-4fAFzVAj-F0?usp=drive_link)

You only need to change the line 184 with the pretrained model, then you can:

To generate new images, run test.py

python test.py@article{zhang2024towards,

title={Towards Highly Realistic Artistic Style Transfer via Stable Diffusion with Step-aware and Layer-aware Prompt},

author={Zhang, Zhanjie and Zhang, Quanwei and Lin, Huaizhong and Xing, Wei and Mo, Juncheng and Huang, Shuaicheng and Xie, Jinheng and Li, Guangyuan and Luan, Junsheng and Zhao, Lei and others},

journal={arXiv preprint arXiv:2404.11474},

year={2024}

}Please feel free to open an issue or contact us personally if you have questions, need help, or need explanations. Write to one of the following email addresses:

cszzj@zju.edu.cn(Preferred) or my co-contributing author cszqw@zju.edu.cn