This project has been implemented with the gymnasium Framework: https://gymnasium.farama.org/environments/atari/freeway/

The final selected model was v_20.2

-

Clone the Repository:

git clone https://github.com/JanMuehlnikel/Atari-Freeway-Reinforcement-Learning cd your-repo -

Create and Activate a New Conda Environment:

conda create --name FreewayEnv python=3.10.14 conda activate FreewayEnv

-

Install

pipin the New Conda Environment: @@ -27,39 +27,13 @@ This project has been implemented with the gymnasium Framework: https://gymnasiupip install -r requirements.txt

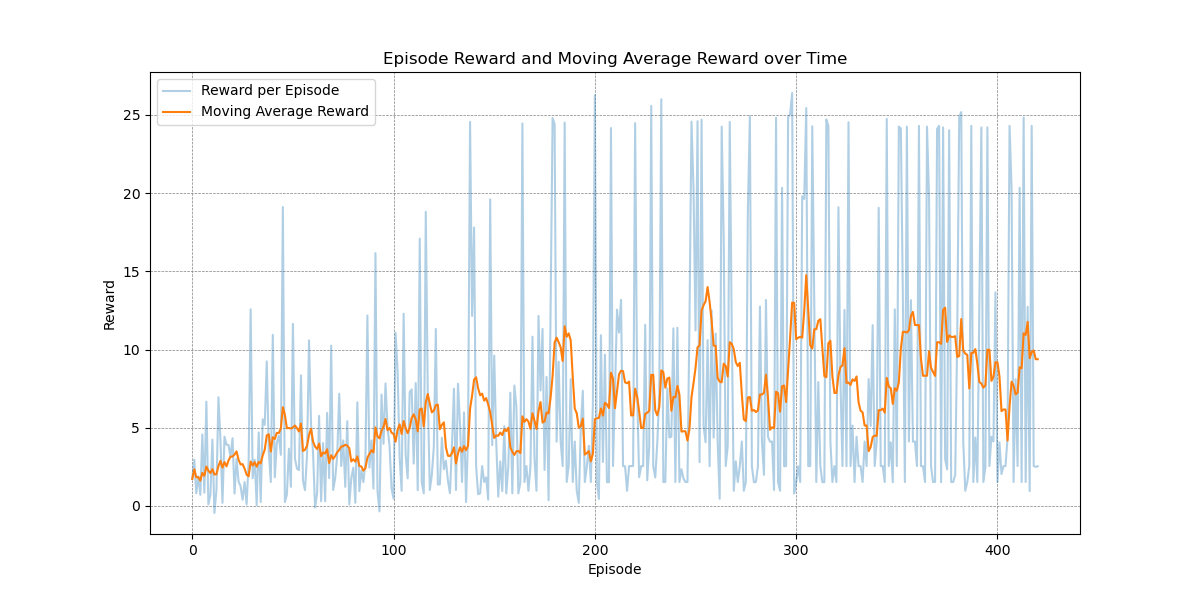

This project implements a reinforcement learning agent using a Deep Q-Network (DQN). Despite our efforts, the agent was only able to learn the baseline method, which involved simply moving up.

- The baseline method for the agent was to only move up.

- Algorithm: Deep Q-Network (DQN)

- Baseline Method: Move up

- Reward Structure: The reward might have been too high for moving actions and too low for crashing, affecting the learning process.

- Network Complexity: The neural network used might have been too simple to accurately approximate the Q-values, limiting the agent's ability to learn more complex behaviors.

- Training Environment: Training was conducted on a CPU with a limited training period, which may have constrained the agent's learning capability.

Several factors might have contributed to the agent's limited learning:

- Adjusting Rewards: Modifying the reward structure to balance rewards for moving and penalties for crashing.

- Network Architecture: Using a more complex neural network to better approximate Q-values.

- Training Resources: Utilizing a GPU for training and extending the training period to improve learning efficiency.

These adjustments could potentially enhance the agent's ability to learn beyond the baseline method.