This repository is the official implementation of the paper: PyEPO: A PyTorch-based End-to-End Predict-then-Optimize Library for Linear and Integer Programming

Citation:

@article{tang2022pyepo,

title={PyEPO: A PyTorch-based End-to-End Predict-then-Optimize Library for Linear and Integer Programming},

author={Tang, Bo and Khalil, Elias B},

journal={arXiv preprint arXiv:2206.14234},

year={2022}

}

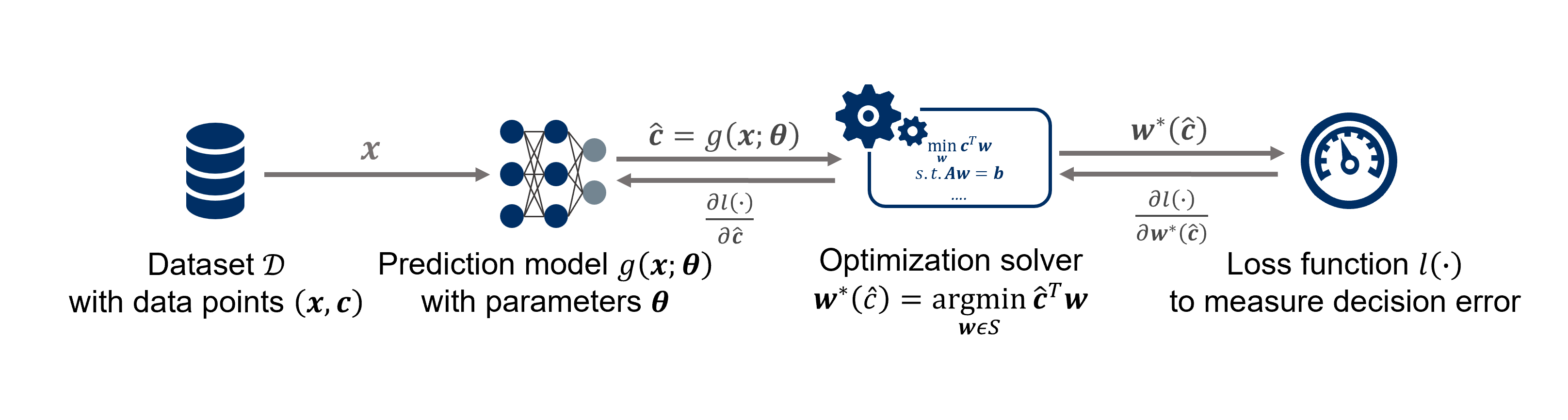

PyEPO (PyTorch-based End-to-End Predict-then-Optimize Tool) is a Python-based, open-source software that supports modeling and solving predict-then-optimize problems with the linear objective function. The core capability of PyEPO is to build optimization models with GurobiPy, Pyomo, or any other solvers and algorithms, then embed the optimization model into an artificial neural network for the end-to-end training. For this purpose, PyEPO implements smart predict-then-optimize+ loss [1], differentiable Black-Box optimizer [3], differentiable perturbed optimizer, Fenchel-Young loss with Perturbation [4], noise-contrastive estimation [5] and learning to rank losses [6] as PyTorch autograd modules.

The official PyEPO docs can be found at https://khalil-research.github.io/PyEPO.

- 01 Optimization Model: Build up optimization solver

- 02 Optimization Dataset: Generate synthetic data and use optDataset

- 03 Training and Testing: Train and test end-to-end predict-then-optimize for different approaches

- 04 2D knapsack Solution Visualization: Visualize solutions for knapsack problem

- 05 Warcraft Shortest Path: Use the Warcraft terrains dateset to train shortest path

To reproduce the experiments in original paper, please use the code and follow the instruction in this branch.

- Implement SPO+ [1], DBB [3], DPO [4], PFYL [4], NCE [5] and LTR [6].

- Support Gurobi and Pyomo API

- Support Parallel computing for optimization solver

- Support solution caching [5] to speed up training

You can download PyEPO from our GitHub repository.

git clone https://github.com/khalil-research/PyEPO.gitAnd install it.

pip install PyEPO/pkg/.On Windows system, there is missing freeze_support to run multiprocessing directly from __main__. When processes is not 1, try if __name__ == "__main__": instead of Jupyter notebook or a PY file.

#!/usr/bin/env python

# coding: utf-8

import gurobipy as gp

from gurobipy import GRB

import numpy as np

import pyepo

from pyepo.model.grb import optGrbModel

import torch

from torch import nn

from torch.utils.data import DataLoader

# optimization model

class myModel(optGrbModel):

def __init__(self, weights):

self.weights = np.array(weights)

self.num_item = len(weights[0])

super().__init__()

def _getModel(self):

# ceate a model

m = gp.Model()

# varibles

x = m.addVars(self.num_item, name="x", vtype=GRB.BINARY)

# model sense

m.modelSense = GRB.MAXIMIZE

# constraints

m.addConstr(gp.quicksum([self.weights[0,i] * x[i] for i in range(self.num_item)]) <= 7)

m.addConstr(gp.quicksum([self.weights[1,i] * x[i] for i in range(self.num_item)]) <= 8)

m.addConstr(gp.quicksum([self.weights[2,i] * x[i] for i in range(self.num_item)]) <= 9)

return m, x

# prediction model

class LinearRegression(nn.Module):

def __init__(self):

super(LinearRegression, self).__init__()

self.linear = nn.Linear(num_feat, num_item)

def forward(self, x):

out = self.linear(x)

return out

if __name__ == "__main__":

# generate data

num_data = 1000 # number of data

num_feat = 5 # size of feature

num_item = 10 # number of items

weights, x, c = pyepo.data.knapsack.genData(num_data, num_feat, num_item,

dim=3, deg=4, noise_width=0.5, seed=135)

# init optimization model

optmodel = myModel(weights)

# init prediction model

predmodel = LinearRegression()

# set optimizer

optimizer = torch.optim.Adam(predmodel.parameters(), lr=1e-2)

# init SPO+ loss

spop = pyepo.func.SPOPlus(optmodel, processes=1)

# build dataset

dataset = pyepo.data.dataset.optDataset(optmodel, x, c)

# get data loader

dataloader = DataLoader(dataset, batch_size=32, shuffle=True)

# training

num_epochs = 10

for epoch in range(num_epochs):

for data in dataloader:

x, c, w, z = data

# forward pass

cp = predmodel(x)

loss = spop(cp, c, w, z).mean()

# backward pass

optimizer.zero_grad()

loss.backward()

optimizer.step()

# eval

regret = pyepo.metric.regret(predmodel, optmodel, dataloader)

print("Regret on Training Set: {:.4f}".format(regret))- [1] Elmachtoub, A. N., & Grigas, P. (2021). Smart “predict, then optimize”. Management Science.

- [2] Mandi, J., Stuckey, P. J., & Guns, T. (2020). Smart predict-and-optimize for hard combinatorial optimization problems. In Proceedings of the AAAI Conference on Artificial Intelligence.

- [3] Vlastelica, M., Paulus, A., Musil, V., Martius, G., & Rolínek, M. (2019). Differentiation of blackbox combinatorial solvers. arXiv preprint arXiv:1912.02175.

- [4] Berthet, Q., Blondel, M., Teboul, O., Cuturi, M., Vert, J. P., & Bach, F. (2020). Learning with differentiable pertubed optimizers. Advances in neural information processing systems, 33, 9508-9519.

- [5] Mulamba, M., Mandi, J., Diligenti, M., Lombardi, M., Bucarey, V., & Guns, T. (2021). Contrastive losses and solution caching for predict-and-optimize. Proceedings of the Thirtieth International Joint Conference on Artificial Intelligence.

- [6] Mandi, J., Bucarey, V., Mulamba, M., & Guns, T. (2022). Decision-focused learning: through the lens of learning to rank. Proceedings of the 39th International Conference on Machine Learning.