JRM Launcher is a tool designed to manage and launch Job Resource Manager (JRM) instances across various computing environments, with a focus on facilitating complex network connections in distributed computing setups.

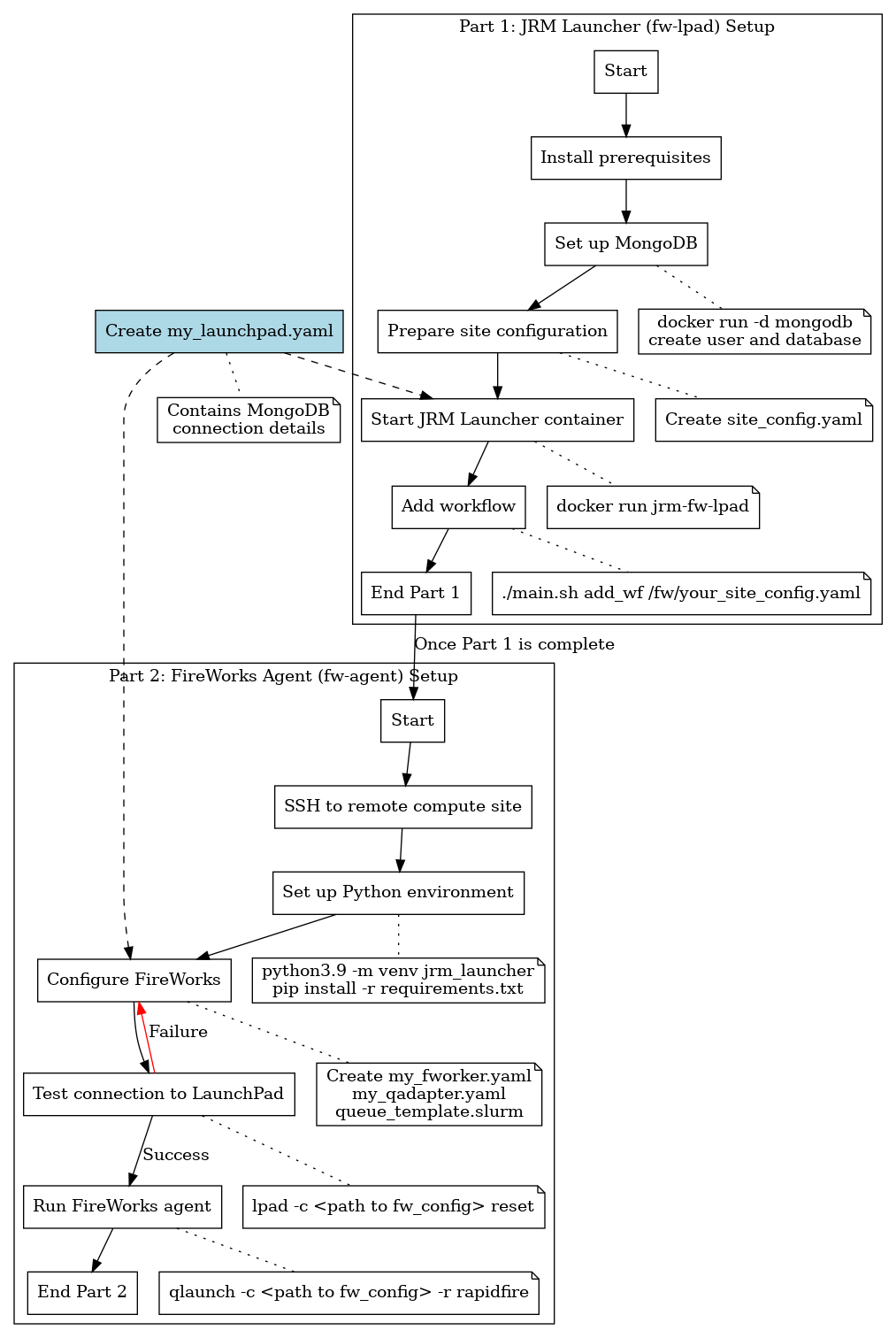

The following flow chart provides an overview of the JRM Launcher deployment process:

This diagram illustrates the key steps involved in setting up and deploying the JRM Launcher, including the setup of both the fw-lpad and fw-agent components, as well as the workflow management process.

For more detailed instructions on setting up the JRM Launcher, please refer to the @fw-lpad/readme.md.

-

Install prerequisites:

- Have valid NERSC account, ORNL user account, or other remote computing site account

- MongoDB (for storing workflow of JRM launches)

- Kubernetes control plane installed

- Valid kubeconfig file for the Kubernetes cluster

- Docker

- Python 3.9 (for developers)

-

Set up MongoDB for storing Fireworks workflows:

# Create and start a MongoDB container docker run -d -p 27017:27017 --name mongodb-container \ -v $HOME/JIRIAF/mongodb/data:/data/db mongo:latest # Wait for MongoDB to start (about 10 seconds), then create a new database and user docker exec -it mongodb-container mongosh --eval ' db.getSiblingDB("jiriaf").createUser({ user: "jiriaf", pwd: "jiriaf", roles: [{role: "readWrite", db: "jiriaf"}] }) '

-

Prepare the site configuration file:

- Use the template in

fw-lpad/FireWorks/jrm_launcher/site_config_template.yaml - Create a configuration file for your specific site (e.g., perlmutter_config.yaml or ornl_config.yaml)

Example configurations:

slurm: nodes: 1 constraint: cpu walltime: 00:10:00 qos: debug account: m3792 reservation: # 100G jrm: nodename: jrm-perlmutter site: perlmutter control_plane_ip: jiriaf2302 apiserver_port: 38687 kubeconfig: /global/homes/j/jlabtsai/run-vk/kubeconfig/jiriaf2302 image: docker:jlabtsai/vk-cmd:main vkubelet_pod_ips: - 172.17.0.1 custom_metrics_ports: [2221, 1776, 8088, 2222] config_class: ssh: remote_proxy: jlabtsai@perlmutter.nersc.gov remote: jlabtsai@128.55.64.13 ssh_key: /root/.ssh/nersc password: build_script:

slurm: nodes: 1 constraint: ejfat walltime: 00:10:00 qos: normal account: csc266 reservation: #ejfat_demo jrm: nodename: jrm-ornl site: ornl control_plane_ip: jiriaf2302 apiserver_port: 38687 kubeconfig: /ccsopen/home/jlabtsai/run-vk/kubeconfig/jiriaf2302 image: docker:jlabtsai/vk-cmd:main vkubelet_pod_ips: - 172.17.0.1 custom_metrics_ports: [2221, 1776, 8088, 2222] config_class: ssh: remote_proxy: remote: 172.30.161.5 ssh_key: password: < user password in base64 > build_script: /root/build-ssh-ornl.sh

- Use the template in

-

Prepare necessary files and directories:

- Create a directory for logs

- Create a

port_table.yamlfile - Ensure you have the necessary SSH key (e.g., for NERSC access)

- Create a

my_launchpad.yamlfile with the MongoDB connection details:host: localhost logdir: <path to logs> mongoclient_kwargs: {} name: jiriaf password: jiriaf port: 27017 strm_lvl: INFO uri_mode: false user_indices: [] username: jiriaf wf_user_indices: []

-

Copy the kubeconfig file to the remote site:

scp /path/to/local/kubeconfig user@remote:/path/to/remote/kubeconfig

-

Start the JRM Launcher container:

export logs=/path/to/your/logs/directory docker run --name=jrm-fw-lpad -itd --rm --net=host \ -v ./your_site_config.yaml:/fw/your_site_config.yaml \ -v $logs:/fw/logs \ -v `pwd`/port_table.yaml:/fw/port_table.yaml \ -v $HOME/.ssh/nersc:/root/.ssh/nersc \ -v `pwd`/my_launchpad.yaml:/fw/util/my_launchpad.yaml \ jlabtsai/jrm-fw-lpad:main

-

Verify the container is running:

docker ps

-

Log into the container:

docker exec -it jrm-fw-lpad /bin/bash -

Initialize the launchpad: Inside the container run

lpad -l /fw/util/my_launchpad.yaml reset

-

Add a workflow:

./main.sh add_wf /fw/your_site_config.yaml- Note the workflow ID provided for future reference

For more detailed instructions on setting up the FireWorks Agent, please refer to the @fw-agent/readme.md.

-

SSH into the remote compute site

-

Create a new directory for your FireWorks agent:

mkdir fw-agent cd fw-agent -

Copy the

requirements.txtfile to this directory (you may need to transfer it from your local machine) -

Create a Python virtual environment and activate it:

python3.9 -m venv jrm_launcher source jrm_launcher/bin/activate -

Install the required packages:

pip install -r requirements.txt

-

Create the

fw_configdirectory and necessary configuration files:mkdir fw_config cd fw_config -

Create and configure the following files in the

fw_configdirectory:my_fworker.yaml:# For Perlmutter: category: perlmutter name: perlmutter query: '{}' # For ORNL: # category: ornl # name: ornl # query: '{}'

my_qadapter.yaml:_fw_name: CommonAdapter _fw_q_type: SLURM _fw_template_file: <path to queue_template.yaml> rocket_launch: rlaunch -c <path to fw_config> singleshot nodes: walltime: constraint: account: job_name: logdir: <path to logs> pre_rocket: post_rocket:

my_launchpad.yaml:host: localhost logdir: <path to logs> mongoclient_kwargs: {} name: jiriaf password: jiriaf port: 27017 strm_lvl: INFO uri_mode: false user_indices: [] username: jiriaf wf_user_indices: []

queue_template.yaml:#!/bin/bash -l #SBATCH --nodes=$${nodes} #SBATCH --ntasks=$${ntasks} #SBATCH --ntasks-per-node=$${ntasks_per_node} #SBATCH --cpus-per-task=$${cpus_per_task} #SBATCH --mem=$${mem} #SBATCH --gres=$${gres} #SBATCH --qos=$${qos} #SBATCH --time=$${walltime} #SBATCH --partition=$${queue} #SBATCH --account=$${account} #SBATCH --job-name=$${job_name} #SBATCH --license=$${license} #SBATCH --output=$${job_name}-%j.out #SBATCH --error=$${job_name}-%j.error #SBATCH --constraint=$${constraint} #SBATCH --reservation=$${reservation} $${pre_rocket} cd $${launch_dir} $${rocket_launch} $${post_rocket}

-

Test the connection to the LaunchPad database:

lpad -c <path to fw_config> reset

If prompted "Are you sure? This will RESET your LaunchPad. (Y/N)", type 'N' to cancel

-

Run the FireWorks agent:

qlaunch -c <path to fw_config> -r rapidfire

Use the following commands on the fw-lpad machine to manage workflows and connections:

- Delete a workflow:

./main.sh delete_wf <workflow_id>

- Delete ports:

./main.sh delete_ports <start_port> <end_port>

- Connect to database:

./main.sh connect db /fw/your_site_config.yaml

- Connect to API server:

./main.sh connect apiserver 35679 /fw/your_site_config.yaml

- Connect to metrics server:

./main.sh connect metrics 10001 vk-node-1 /fw/your_site_config.yaml

- Connect to custom metrics:

./main.sh connect custom_metrics 20001 8080 vk-node-1 /fw/your_site_config.yaml

- Check logs in the

LOG_PATHdirectory for SSH connection issues - Ensure all configuration files are correctly formatted and contain required fields

- Verify that necessary ports are available and not blocked by firewalls

- For fw-agent issues:

- Ensure the FireWorks LaunchPad is accessible from the remote compute site

- Verify that the Python environment has all necessary dependencies installed

- Consult the FireWorks documentation for more detailed configuration and usage information

For more detailed troubleshooting information, please refer to the @fw-lpad/readme.md and @fw-agent/readme.md files.

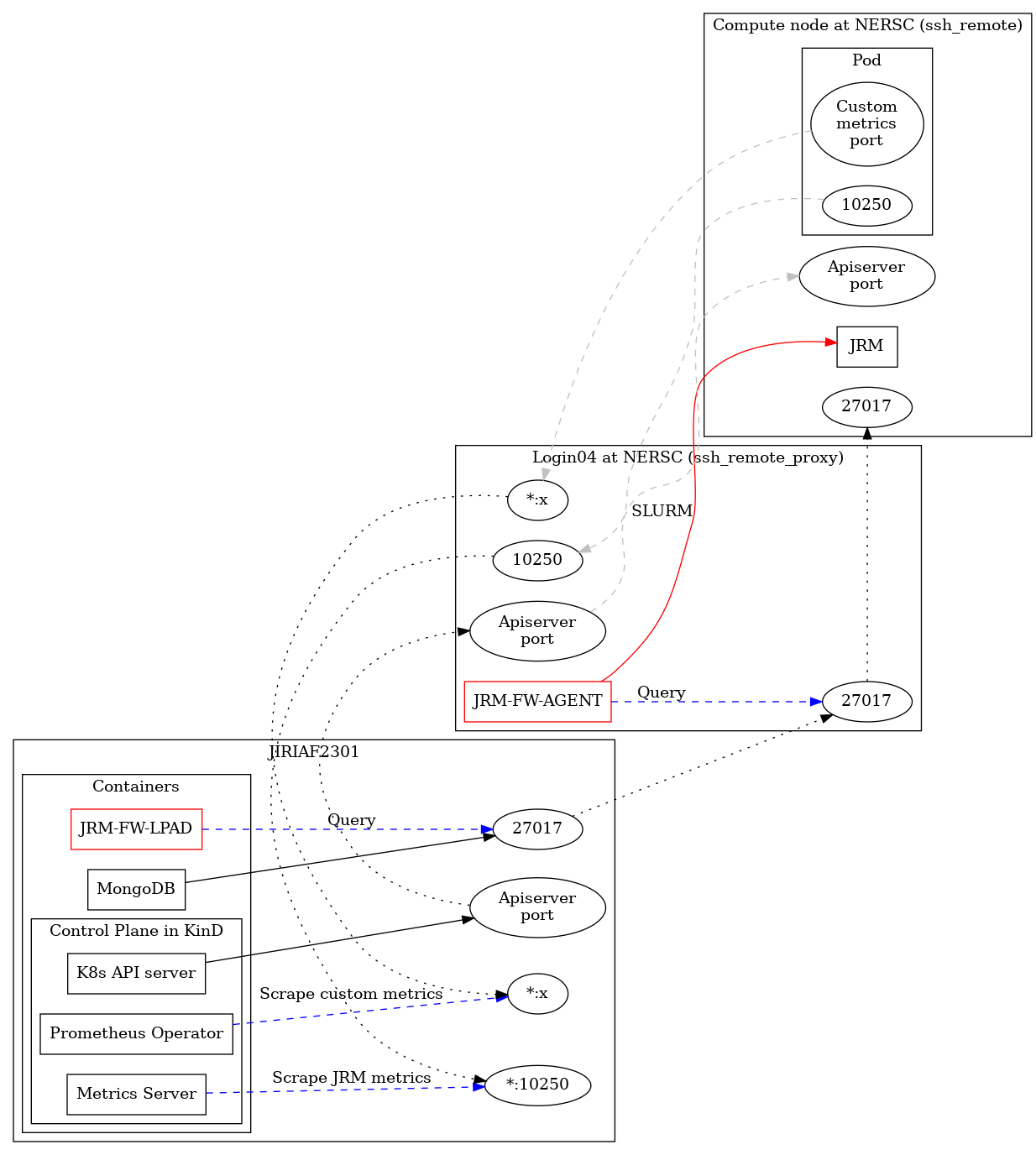

The core functionality of JRM Launcher revolves around managing network connections between different components of a distributed computing environment. The network architecture is visually represented in the jrm-network-flowchart.png file included in this repository.

This diagram illustrates the key components and connections managed by JRM Launcher (JRM-FW), including:

- SSH connections to remote servers

- Port forwarding for various services

- Connections to databases, API servers, and metrics servers

- Workflow management across different computing nodes

JRM Launcher acts as a central management tool, orchestrating these connections to ensure smooth operation of distributed workflows and efficient resource utilization.

- Workflow management

- Flexible connectivity to various services

- Site-specific configurations

- SSH integration and port forwarding

- Port management for workflows

- Extensibility to support new computing environments

JRM Launcher is designed to be easily extensible to support various computing environments. For information on how to add support for new environments, refer to the "Customization" section in the fw-lpad readme file.

By leveraging JRM Launcher, you can simplify the management of complex network connections in distributed computing environments, allowing you to focus on your workflows rather than infrastructure management.