Spleeter Web is a web application for isolating or removing the vocal, accompaniment, bass, and/or drum components of any song. For example, you can use it to isolate the vocals of a track, or you can use it remove the vocals to get an instrumental version of a song.

It supports a number of different source separation models: Spleeter (4stems-model and 5stems-model), Demucs, CrossNet-Open-Unmix, and D3Net.

The app uses Django for the backend API and React for the frontend. Celery is used for the task queue. Docker images are available, including ones with GPU support.

- Features

- Demo site

- Getting started with Docker

- Getting started without Docker

- Configuration

- Using cloud storage

- Deployment

- Common issues & FAQs

- Credits

- License

- Supports Spleeter, Demucs, CrossNet-Open-Unmix (X-UMX), and D3Net source separation models

- Each model supports a different set of user-configurable parameters in the UI

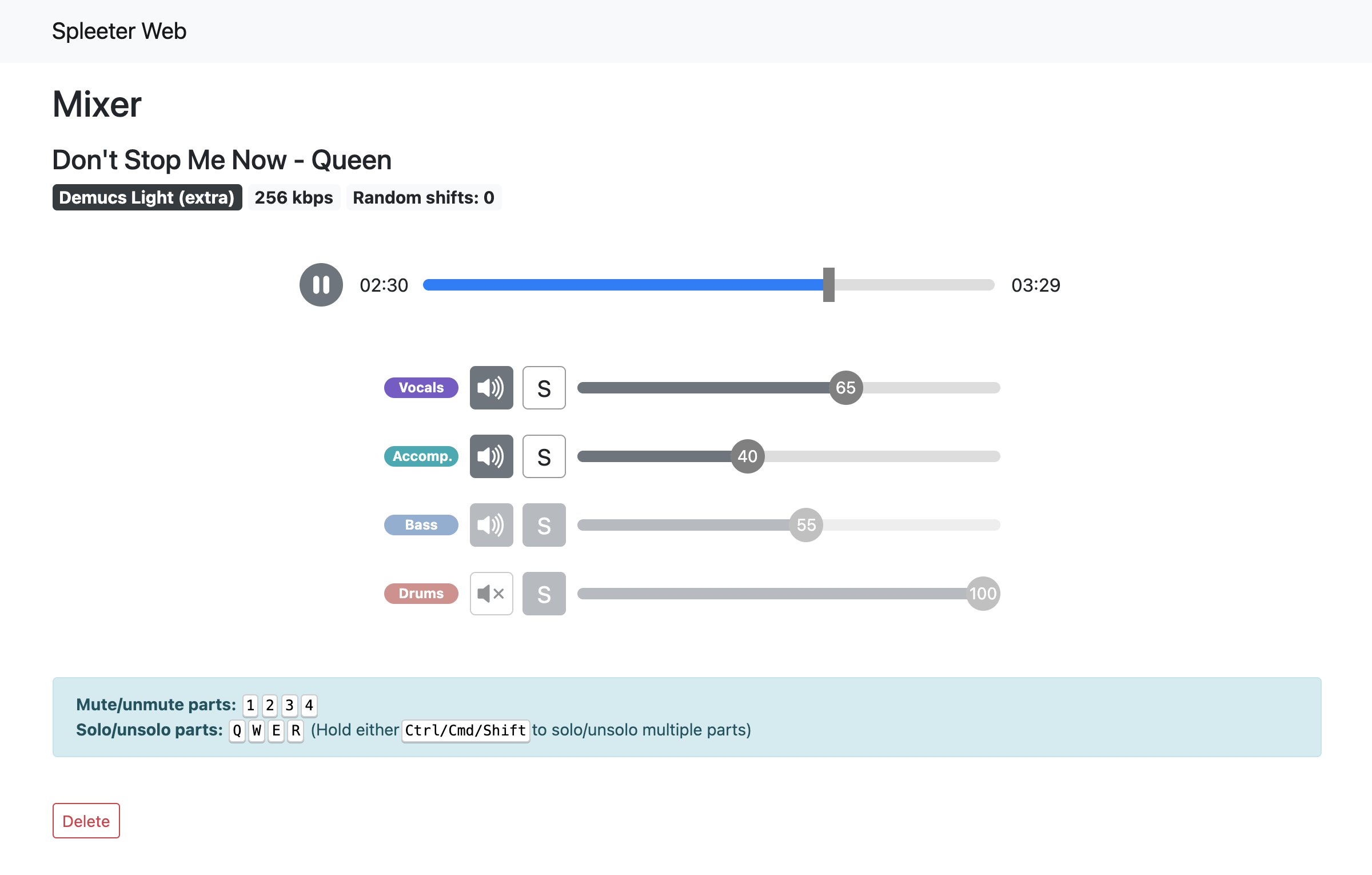

- Dynamic Mixes let you export and play back in realtime your own custom mix of the different components

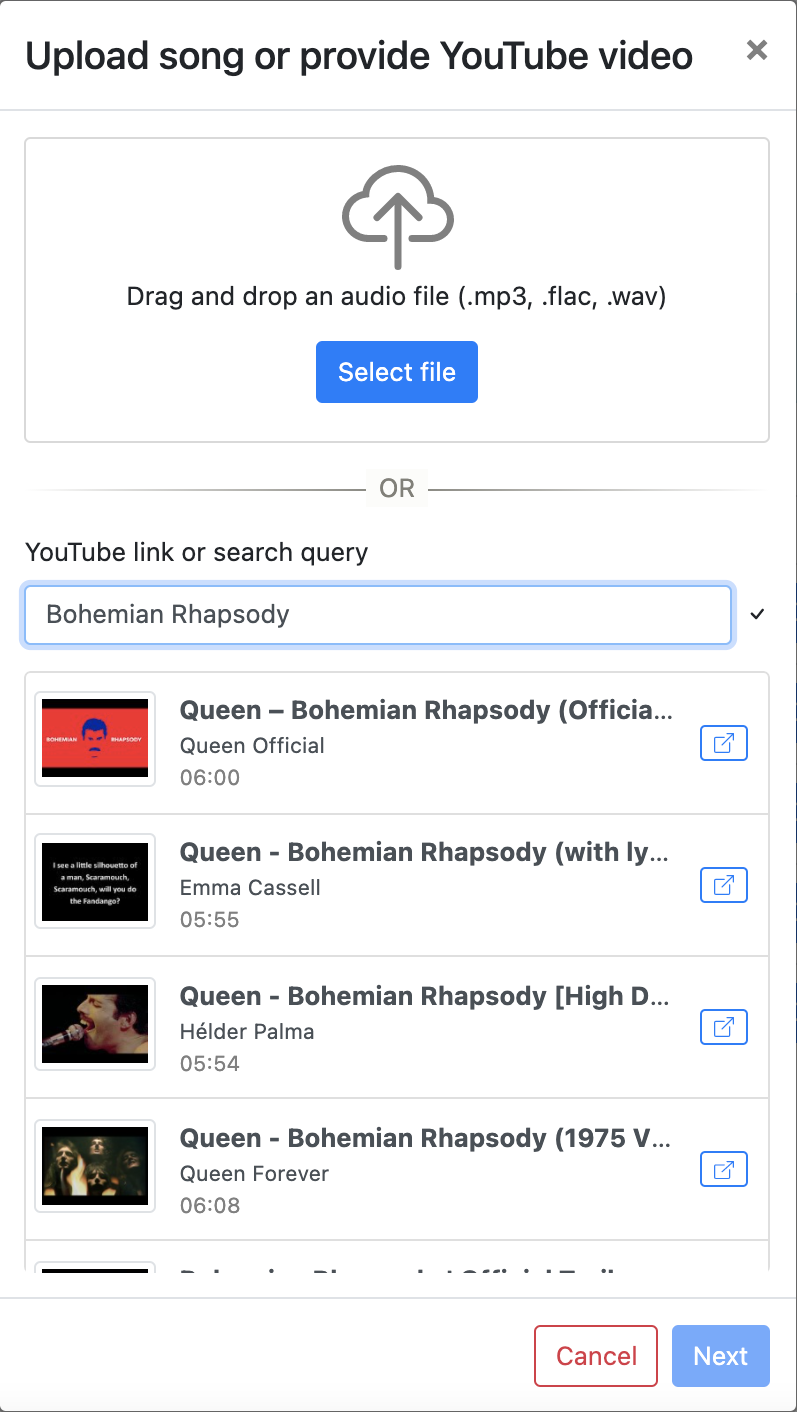

- Import tracks by uploading an audio file or by a YouTube link

- Built-in YouTube search functionality (YouTube Data API key required)

- Supports lossy (MP3) and lossless (FLAC, WAV) output formats

- Persistent audio library with ability to stream and download your source tracks and mixes

- Customize number of background workers working on audio separation and YouTube imports

- Supports third-party storage backends like S3 and Azure Blob Storage

- Clean and responsive UI

- Support for GPU separation

- Fully Dockerized

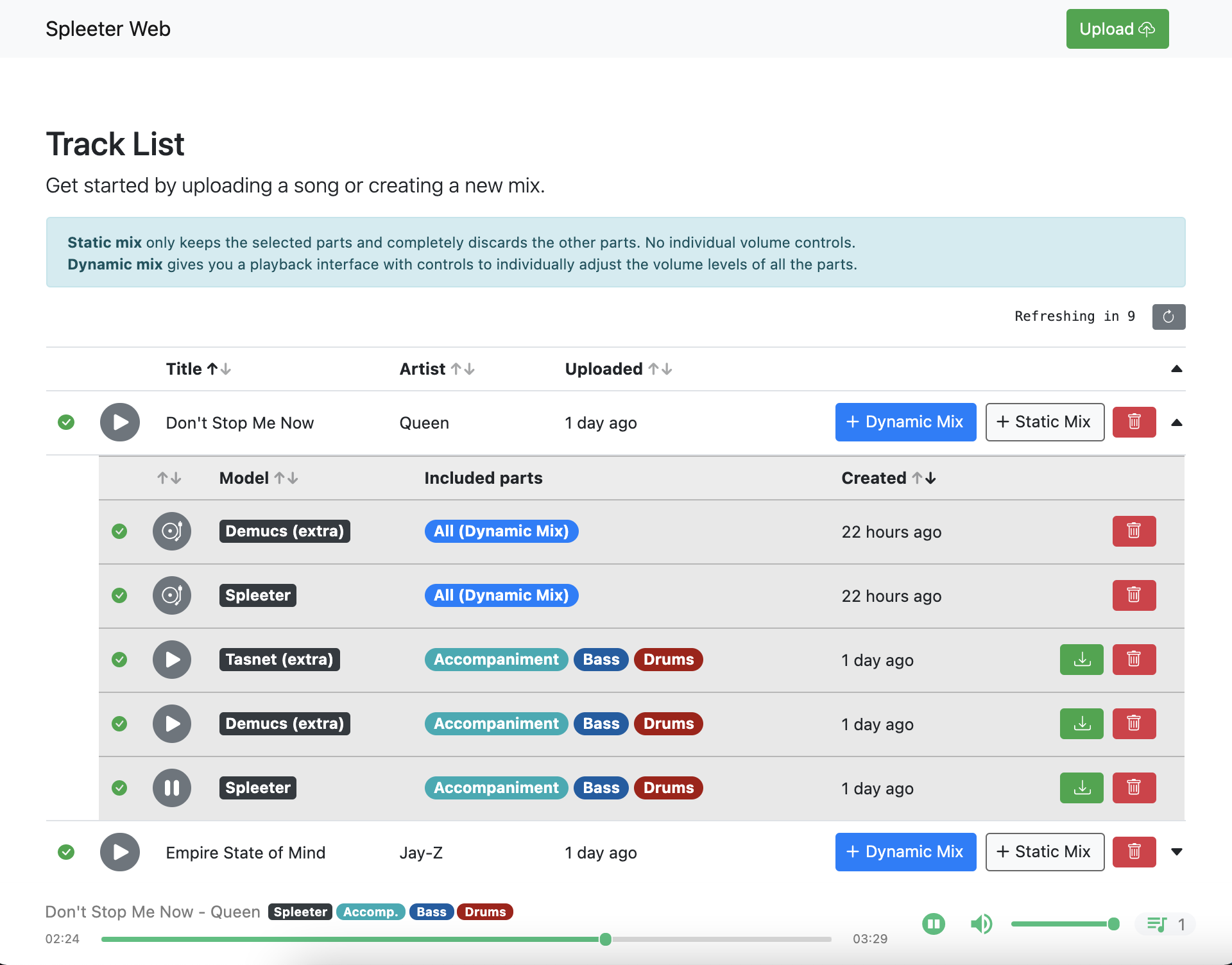

Homepage

Upload modal

Mixer

- 4 GB+ of memory (source separation is memory-intensive)

- Docker and Docker Compose

-

Clone repo:

$ git clone https://github.com/JeffreyCA/spleeter-web.git $ cd spleeter-web -

(Optional) Set the YouTube Data API key (for YouTube search functionality):

You can skip this step, but you would not be able to import songs by searching with a query. You would still be able to import songs via YouTube links though.

Create an

.envfile at the project root with the following contents:YOUTUBE_API_KEY=<YouTube Data API key> -

(Optional) Setup for GPU support: Source separation can be accelerated with a GPU (however only NVIDIA GPUs are supported).

-

Install NVIDIA drivers for your GPU.

-

Install the NVIDIA Container Toolkit. If on Windows, refer to this.

-

Verify Docker works with your GPU by running

sudo docker run --rm --gpus all nvidia/cuda:11.8.0-base-ubuntu20.04 nvidia-smi

-

-

Download and run prebuilt Docker images:

Note: On Apple Silicon and other AArch64 systems, the Docker images need to be built from source.

# CPU separation spleeter-web$ docker-compose -f docker-compose.yml -f docker-compose.prod.yml -f docker-compose.prod.selfhost.yml up # GPU separation spleeter-web$ docker-compose -f docker-compose.gpu.yml -f docker-compose.prod.yml -f docker-compose.prod.selfhost.yml up

Alternatively, you can build the Docker images from source:

# CPU separation spleeter-web$ docker-compose -f docker-compose.yml -f docker-compose.build.yml -f docker-compose.prod.yml -f docker-compose.prod.selfhost.yml up --build # GPU separation spleeter-web$ docker-compose -f docker-compose.gpu.yml -f docker-compose.build.gpu.yml -f docker-compose.prod.yml -f docker-compose.prod.selfhost.yml up --build

-

Launch Spleeter Web

Navigate to http://127.0.0.1:80 in your browser. Uploaded tracks and generated mixes will appear in

media/uploadsandmedia/separaterespectively on your host machine.

If you are on Windows, it's recommended to follow the Docker instructions above. Celery is not well-supported on Windows.

- x86-64 arch (For AArch64 systems, use Docker)

- 4 GB+ of memory (source separation is memory-intensive)

- Python 3.8+ (link)

- Node.js 16+ (link)

- Redis (link)

- ffmpeg and ffprobe (link)

- On macOS, you can install it using Homebrew or MacPorts

- On Windows, you can follow this guide

-

Set environment variables

Make sure these variables are set in every terminal session prior to running the commands below.

# Unix/macOS: (env) spleeter-web$ export YOUTUBE_API_KEY=<api key> # Windows: (env) spleeter-web$ set YOUTUBE_API_KEY=<api key>

-

Create Python virtual environment

spleeter-web$ python -m venv env # Unix/macOS: spleeter-web$ source env/bin/activate # Windows: spleeter-web$ .\env\Scripts\activate

-

Install Python dependencies

(env) spleeter-web$ pip install -r requirements.txt (env) spleeter-web$ pip install -r requirements-spleeter.txt --no-dependencies

-

Install Node dependencies

spleeter-web$ cd frontend spleeter-web/frontend$ npm install -

Ensure Redis server is running on

localhost:6379(needed for Celery)You can run it on a different host or port, but make sure to update

CELERY_BROKER_URLandCELERY_RESULT_BACKENDinsettings.py. It must be follow the format:redis://host:port/db. -

Apply migrations

(env) spleeter-web$ python manage.py migrate

-

Build frontend

spleeter-web$ npm run build --prefix frontend

-

Start backend in separate terminal

(env) spleeter-web$ python manage.py collectstatic && python manage.py runserver 127.0.0.1:8000 -

Start Celery workers in separate terminal

Unix/macOS:

# Start fast worker (env) spleeter-web$ celery -A api worker -l INFO -Q fast_queue -c 3 # Start slow worker (env) spleeter-web$ celery -A api worker -l INFO -Q slow_queue -c 1

This launches two Celery workers: one processes fast tasks like YouTube imports and the other processes slow tasks like source separation. The one working on fast tasks can work on 3 tasks concurrently, while the one working on slow tasks only handles a single task at a time (since it's memory-intensive). Feel free to adjust these values to your fitting.

Windows:

You'll first need to install

gevent. Note however that you will not be able to abort in-progress tasks if using Celery on Windows.(env) spleeter-web$ pip install gevent

# Start fast worker (env) spleeter-web$ celery -A api worker -l INFO -Q fast_queue -c 3 --pool=gevent # Start slow worker (env) spleeter-web$ celery -A api worker -l INFO -Q slow_queue -c 1 --pool=gevent

-

Launch Spleeter Web

Navigate to http://127.0.0.1:8000 in your browser. Uploaded and mixed tracks will appear in

media/uploadsandmedia/separaterespectively.

| Settings file | Description |

|---|---|

django_react/settings.py |

The base Django settings used when launched in non-Docker context. |

django_react/settings_dev.py |

Contains the override settings used when run in development mode (i.e. DJANGO_DEVELOPMENT is set). |

django_react/settings_docker.py |

The base Django settings used when launched using Docker. |

django_react/settings_docker_dev.py |

Contains the override settings used when run in development mode using Docker (i.e. docker-compose.dev.yml). |

Here is a list of all the environment variables you can use to further customize Spleeter Web:

| Name | Description |

|---|---|

CPU_SEPARATION |

No need to set this if using Docker. Otherwise, set to 1 if you want CPU separation and 0 if you want GPU separation. |

DJANGO_DEVELOPMENT |

Set to true if you want to run development build, which uses settings_dev.py/settings_docker_dev.py and runs Webpack in dev mode. |

ALLOW_ALL_HOSTS |

Set to 1 if you want Django to allow all hosts, overriding any APP_HOST value. This effectively sets the Django setting ALLOWED_HOSTS to [*]. There are security risks associated with doing this. Default: 0 |

APP_HOST |

Domain name(s) or public IP(s) of server. To specify multiple hosts, separate them by a comma (,). |

API_HOST |

Hostname of API server (for nginx). |

DEFAULT_FILE_STORAGE |

Whether to use local filesystem or cloud-based storage for storing uploads and separated files. FILE or AWS or AZURE. |

AWS_ACCESS_KEY_ID |

AWS access key. Used when DEFAULT_FILE_STORAGE is set to AWS. |

AWS_SECRET_ACCESS_KEY |

AWS secret access key. Used when DEFAULT_FILE_STORAGE is set to AWS. |

AWS_STORAGE_BUCKET_NAME |

AWS S3 storage bucket name. Used when DEFAULT_FILE_STORAGE is set to AWS. |

AWS_S3_CUSTOM_DOMAIN |

Custom domain, such as for a CDN. Used when DEFAULT_FILE_STORAGE is set to AWS. |

AWS_S3_REGION_NAME |

S3 region (e.g. us-east-1). Used when DEFAULT_FILE_STORAGE is set to AWS. |

AWS_S3_SIGNATURE_VERSION |

Default signature version used for generating presigned urls. To be able to access your s3 objects in all regions through presigned urls, set this to s3v4. Used when DEFAULT_FILE_STORAGE is set to AWS. |

AZURE_ACCOUNT_KEY |

Azure Blob account key. Used when DEFAULT_FILE_STORAGE is set to AZURE. |

AZURE_ACCOUNT_NAME |

Azure Blob account name. Used when DEFAULT_FILE_STORAGE is set to AZURE. |

AZURE_CONTAINER |

Azure Blob container name. Used when DEFAULT_FILE_STORAGE is set to AZURE. |

AZURE_CUSTOM_DOMAIN |

Custom domain, such as for a CDN. Used when DEFAULT_FILE_STORAGE is set to AZURE. |

CELERY_BROKER_URL |

Broker URL for Celery (e.g. redis://localhost:6379/0). |

CELERY_RESULT_BACKEND |

Result backend for Celery (e.g. redis://localhost:6379/0). |

CELERY_FAST_QUEUE_CONCURRENCY |

Number of concurrent YouTube import tasks Celery can process. Docker only. |

CELERY_SLOW_QUEUE_CONCURRENCY |

Number of concurrent source separation tasks Celery can process. Docker only. |

CERTBOT_DOMAIN |

Domain for creating HTTPS certs using Let's Encrypt's Certbot. Docker only. |

CERTBOT_EMAIL |

Email address for creating HTTPS certs using Let's Encrypt's Certbot. Docker only. |

D3NET_OPENVINO |

Set to 1 to use OpenVINO for D3Net CPU separation. Requires Intel CPU. |

DEMUCS_SEGMENT_SIZE |

Length of each split for GPU separation. Default is 40, which requires a around 7 GB of GPU memory. For GPUs with 2-4 GB of memory, experiment with lower values (minimum is 10). Also recommended to set PYTORCH_NO_CUDA_MEMORY_CACHING=1. |

D3NET_OPENVINO_THREADS |

Set to the number of CPU threads for D3Net OpenVINO separation. Default: # of CPUs on the machine. Requires Intel CPU. |

DEV_WEBSERVER_PORT |

Port that development webserver is mapped to on host machine. Docker only. |

ENABLE_CROSS_ORIGIN_HEADERS |

Set to 1 to set Cross-Origin-Embedder-Policy and Cross-Origin-Opener-Policy headers which are required for exporting Dynamic Mixes in-browser. |

NGINX_PORT |

Port that Nginx is mapped to on host machine for HTTP. Docker only. |

NGINX_PORT_SSL |

Port that Nginx is mapped to on host machine for HTTPS. Docker only. |

PYTORCH_NO_CUDA_MEMORY_CACHING |

Set to 1 to disable Pytorch caching for GPU separation. May help with Demucs separation on lower memory GPUs. Also see DEMUCS_SEGMENT_SIZE. |

UPLOAD_FILE_SIZE_LIMIT |

Maximum allowed upload file size (in megabytes). Default is 100. |

YOUTUBE_API_KEY |

YouTube Data API key. |

YOUTUBE_LENGTH_LIMIT |

Maximum allowed YouTube track length (in minutes). Default is 30. |

YOUTUBEDL_SOURCE_ADDR |

Client-side IP address for yt-dlp to bind to. If you are facing 403 Forbidden errors, try setting this to 0.0.0.0 to force all connections through IPv4. |

YOUTUBEDL_VERBOSE |

Set to 1 to enable verbose logging for yt-dlp. |

By default, Spleeter Web uses the local filesystem to store uploaded files and mixes. It uses django-storages, so you can also configure it to use other storage backends like Azure Storage or AWS S3.

You can set the environment variable DEFAULT_FILE_STORAGE (.env if using Docker) to either FILE (for local storage), AWS (S3 storage), or AZURE (Azure Storage).

Then, depending on which backend you're using, set these additional variables:

AWS S3:

AWS_ACCESS_KEY_IDAWS_SECRET_ACCESS_KEYAWS_STORAGE_BUCKET_NAME

Azure Storage:

AZURE_ACCOUNT_KEYAZURE_ACCOUNT_NAMEAZURE_CONTAINER

To play back a dynamic mix, you may need to configure your storage service's CORS settings to allow the Access-Control-Allow-Origin header.

If you have ENABLE_CROSS_ORIGIN_HEADERS set, then you'll need to additionally set the Cross-Origin-Resource-Policy response headers of audio files to cross-origin. See this for more details.

Spleeter Web can be deployed on a VPS or a cloud server such as Azure VMs, AWS EC2, DigitalOcean, etc. Deploying to cloud container services like ECS is not yet supported out of the box.

-

Clone this git repo

$ git clone https://github.com/JeffreyCA/spleeter-web.git $ cd spleeter-web -

(Optional) If self-hosting, update

docker-compose.prod.selfhost.ymland replace./mediawith the path where media files should be stored on the server. -

In

spleeter-web, create an.envfile with the production environment variablesExample

.envfile:APP_HOST=<domain name(s) or public IP(s) of server> DEFAULT_FILE_STORAGE=<FILE or AWS or AZURE> # Optional (default = FILE) CELERY_FAST_QUEUE_CONCURRENCY=<concurrency count> # Optional (default = 3) CELERY_SLOW_QUEUE_CONCURRENCY=<concurrency count> # Optional (default = 1) YOUTUBE_API_KEY=<youtube api key> # OptionalSee Environment Variables for all the available variables. You can also set these directly in the

docker-compose.*.ymlfiles. -

Build and start production containers

For GPU separation, replace

docker-compose.ymlanddocker-compose.build.ymlbelow fordocker-compose.gpu.ymlanddocker-compose.build.gpu.ymlrespectively.If you are self-hosting media files:

# Use prebuilt images spleeter-web$ sudo docker-compose -f docker-compose.yml -f docker-compose.prod.yml -f docker-compose.prod.selfhost.yml up -d # Or build from source spleeter-web$ sudo docker-compose -f docker-compose.yml -f docker-compose.build.yml -f docker-compose.prod.yml -f docker-compose.prod.selfhost.yml up --build -d

Otherwise if using a storage provider:

# Use prebuilt images spleeter-web$ sudo docker-compose -f docker-compose.yml -f docker-compose.prod.yml up -d # Or build from source spleeter-web$ sudo docker-compose -f docker-compose.yml -f docker-compose.build.yml -f docker-compose.prod.yml up --build -d

-

Access Spleeter Web at whatever you set

APP_HOSTto. Note that it will be running on port 80, not 8000. You can change this by settingNGINX_PORTandNGINX_PORT_SSL.

Enabling HTTPS allows you to export Dynamic Mixes from your browser. To enable HTTPS, set both CERTBOT_DOMAIN and CERTBOT_EMAIL to your domain name and CERTBOT_EMAIL to your email in .env and include -f docker-compose.https.yml in your docker-compose up command.

Special thanks to my Sponsors:

And especially to all the researchers and devs behind all the source separation models:

And additional thanks to these wonderful projects:

Turntable icon made from Icon Fonts is licensed by CC BY 3.0.