A PyTorch implementation of DGCNN based on AAAI 2018 paper An End-to-End Deep Learning Architecture for Graph Classification.

conda install pytorch torchvision -c pytorch

- PyTorchNet

pip install git+https://github.com/pytorch/tnt.git@master

pip install torch-scatter

pip install torch-sparse

pip install torch-cluster

pip install torch-spline-conv (optional)

pip install torch-geometric

The datasets are collected from graph kernel datasets.

The code will download and extract them into data directory automatically. The 10fold_idx files are collected from

pytorch_DGCNN.

python -m visdom.server -logging_level WARNING & python train.py --data_type PTC_MR --num_epochs 200

optional arguments:

--data_type dataset type [default value is 'DD'](choices:['DD', 'PTC_MR', 'NCI1', 'PROTEINS', 'IMDB-BINARY', 'IMDB-MULTI', 'MUTAG', 'COLLAB'])

--batch_size train batch size [default value is 50]

--num_epochs train epochs number [default value is 100]

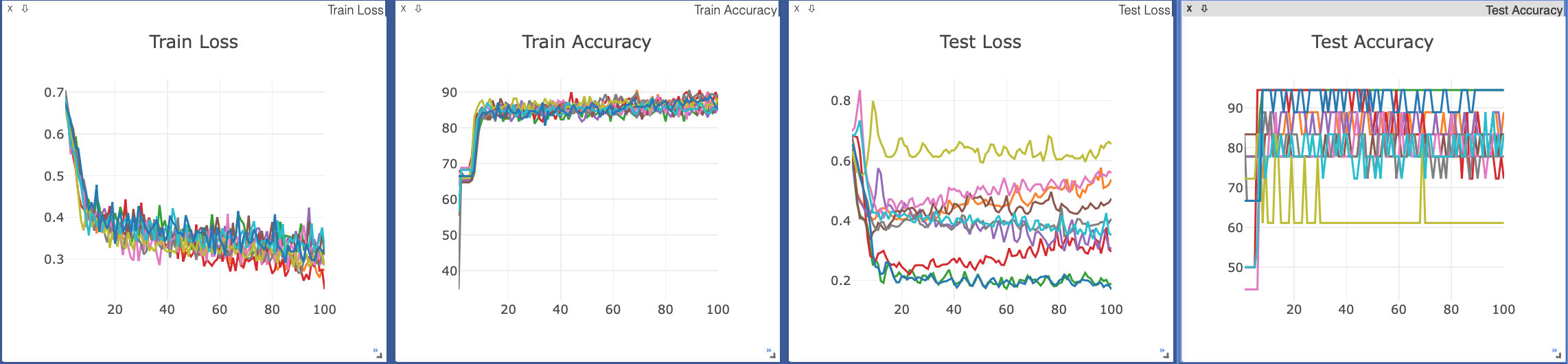

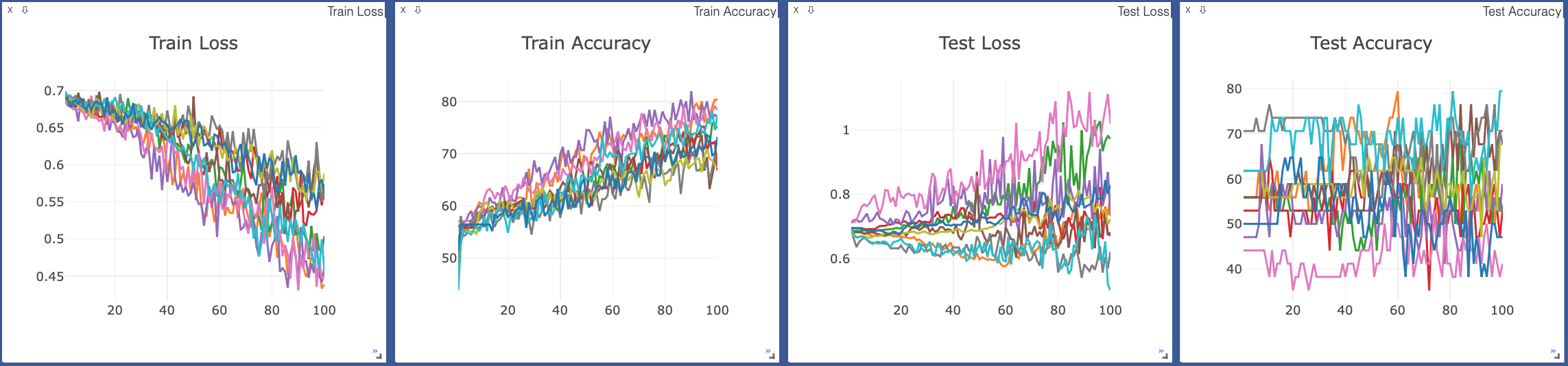

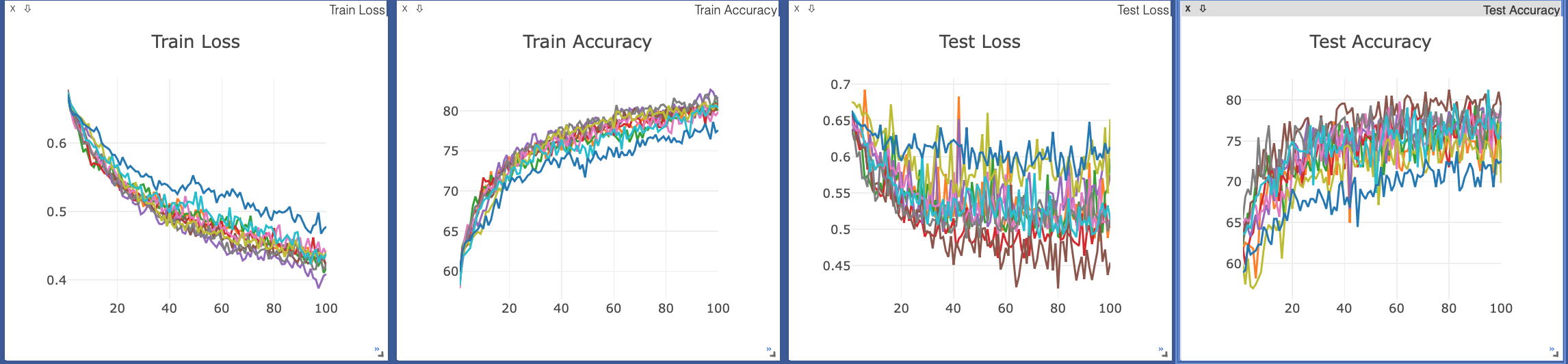

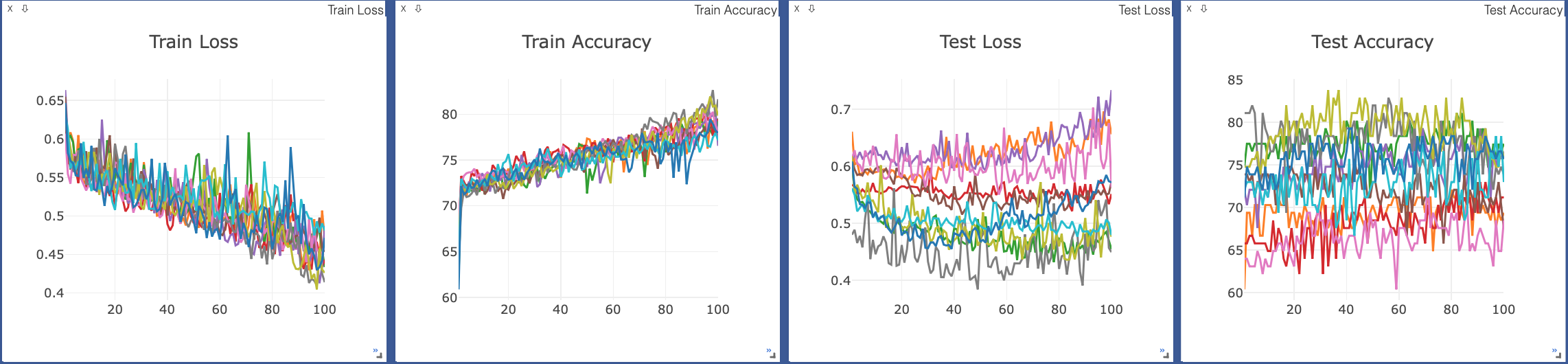

Visdom now can be accessed by going to 127.0.0.1:8097/env/$data_type in your browser, $data_type means the dataset type which you are training.

Default PyTorch Adam optimizer hyper-parameters were used without learning rate scheduling. The model was trained with 100 epochs and batch size of 50 on a NVIDIA GTX 1070 GPU.

Here is tiny difference between this code and official paper. X is defined as a concatenated matrix of vertex labels、 vertex attributes and normalized node degrees.

| Dataset | MUTAG | PTC | NCI1 | PROTEINS | D&D | COLLAB | IMDB-B | IMDB-M |

|---|---|---|---|---|---|---|---|---|

| Num. of Graphs | 188 | 344 | 4,110 | 1,113 | 1,178 | 5,000 | 1,000 | 1,500 |

| Num. of Classes | 2 | 2 | 2 | 2 | 2 | 3 | 2 | 3 |

| Node Attr. (Dim.) | 8 | 19 | 38 | 5 | 90 | 1 | 1 | 1 |

| Num. of Parameters | 52,035 | 52,387 | 52,995 | 51,939 | 54,659 | 51,940 | 51,811 | 51,940 |

| DGCNN (official) | 85.83±1.66 | 58.59±2.47 | 74.44±0.47 | 75.54±0.94 | 79.37±0.94 | 73.76±0.49 | 70.03±0.86 | 47.83±0.85 |

| DGCNN (ours) | 81.67±9.64 | 59.12±11.27 | 75.72±3.13 | 72.88±3.38 | 68.80±5.37 | 70.52±2.00 | 71.50±4.48 | 46.47±5.22 |

| Training Time | 4.48s | 6.77s | 61.04s | 21.15s | 64.71s | 202.65s | 15.55s | 21.90s |

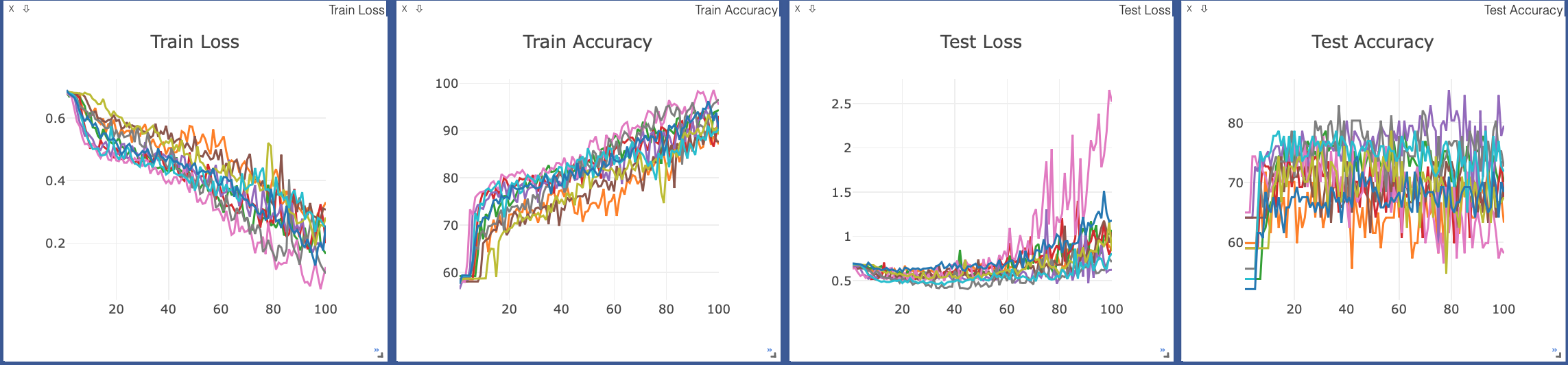

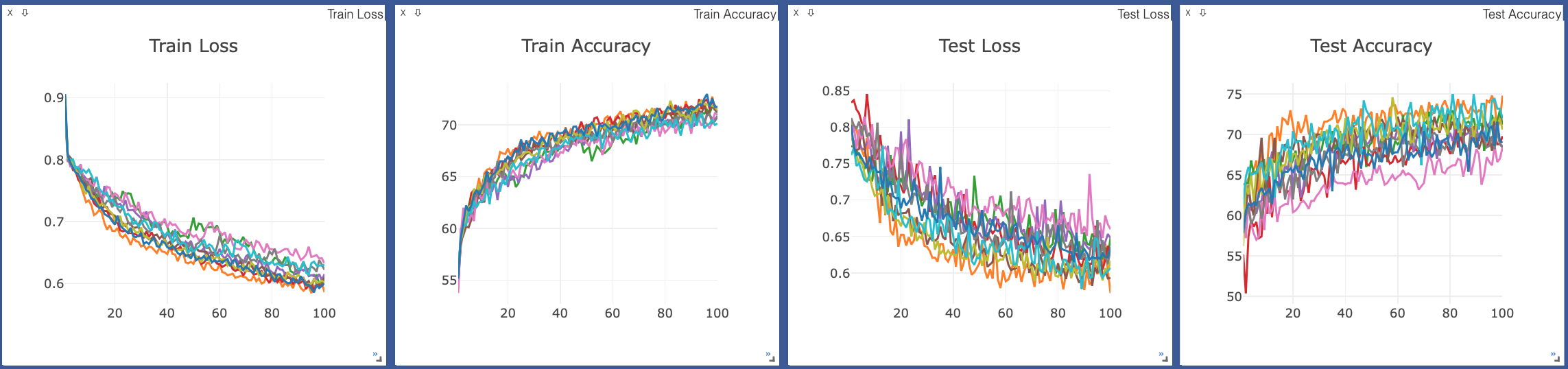

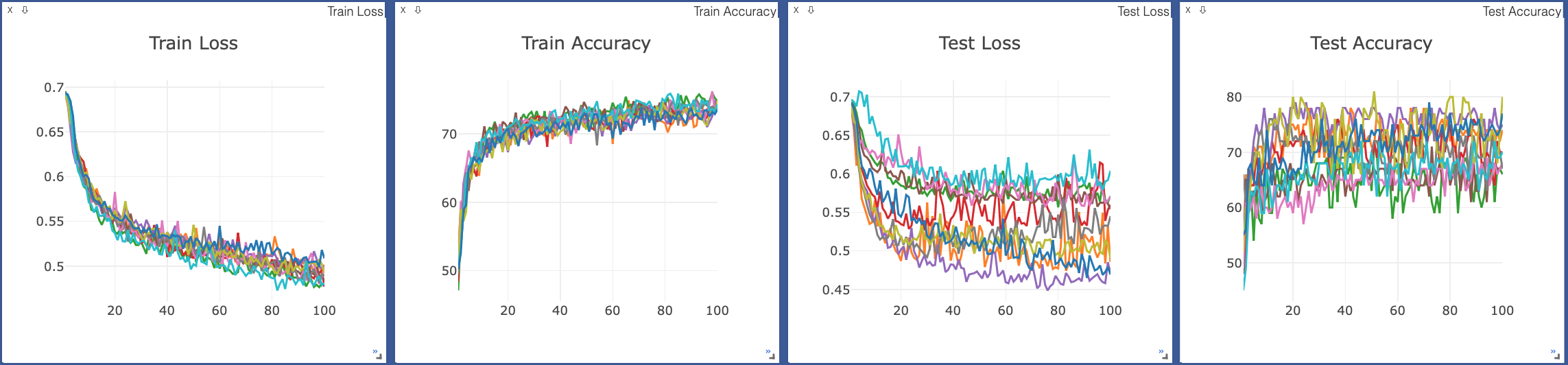

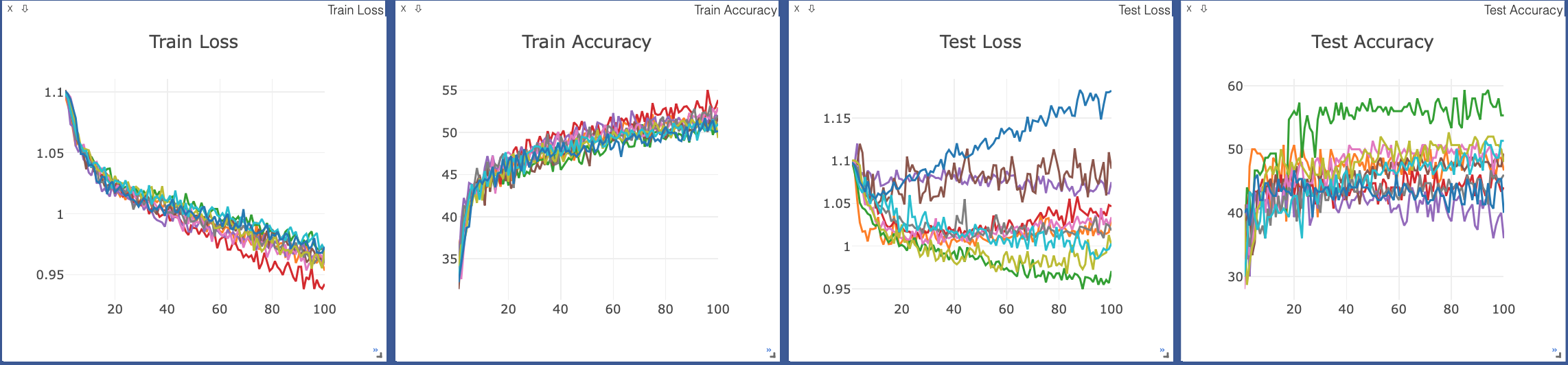

The train loss、accuracy, test loss、accuracy are showed on visdom.