This repository contains the implementation of GPT-2 built from scratch. It also runs a backend service with a single endpoint which accepts user input with a prefix sentence and returns a complete sentence by calling the trained GPT-2 model.

- Python 3.10 or higher

- PyTorch

- Other dependencies as listed in

requirements.txt

-

Clone the repository:

git clone https://github.com/your-username/your-repo-name.git cd your-repo-name -

Install the dependencies:

pip install -r requirements.txt

-

Run the file:

- To train a GPT-2 model:

python main.py

- To start a backend server on your local computer:

python backend_server.py

- To train a GPT-2 model:

You can also run the project in a Docker container:

-

Pull the Docker base image:

docker pull robd003/python3.10:latest

-

Build the Docker image:

sudo docker build -t gpt-2 -f Dockerfile . -

Run a Docker container:

- For training:

sudo docker run -p 5001:5001 -it --name gpt-2 -v $(pwd)/log:/app/log gpt-2 training /bin/bash - For service:

sudo docker run -p 5001:5001 -it --name gpt-2 -v $(pwd)/log:/app/log gpt-2 service /bin/bash

This command creates a Docker container which maps the container's port 5001 to your local host's port 5001. The container is named

gpt-2and created using the built Docker imagegpt-2. It also mounts your locallogdirectory to the container's/app/logdirectory, and opens a bash shell in the container. - For training:

The dataset used for training is the Tiny Shakespeare dataset. The dataset is stored in the input.txt file.

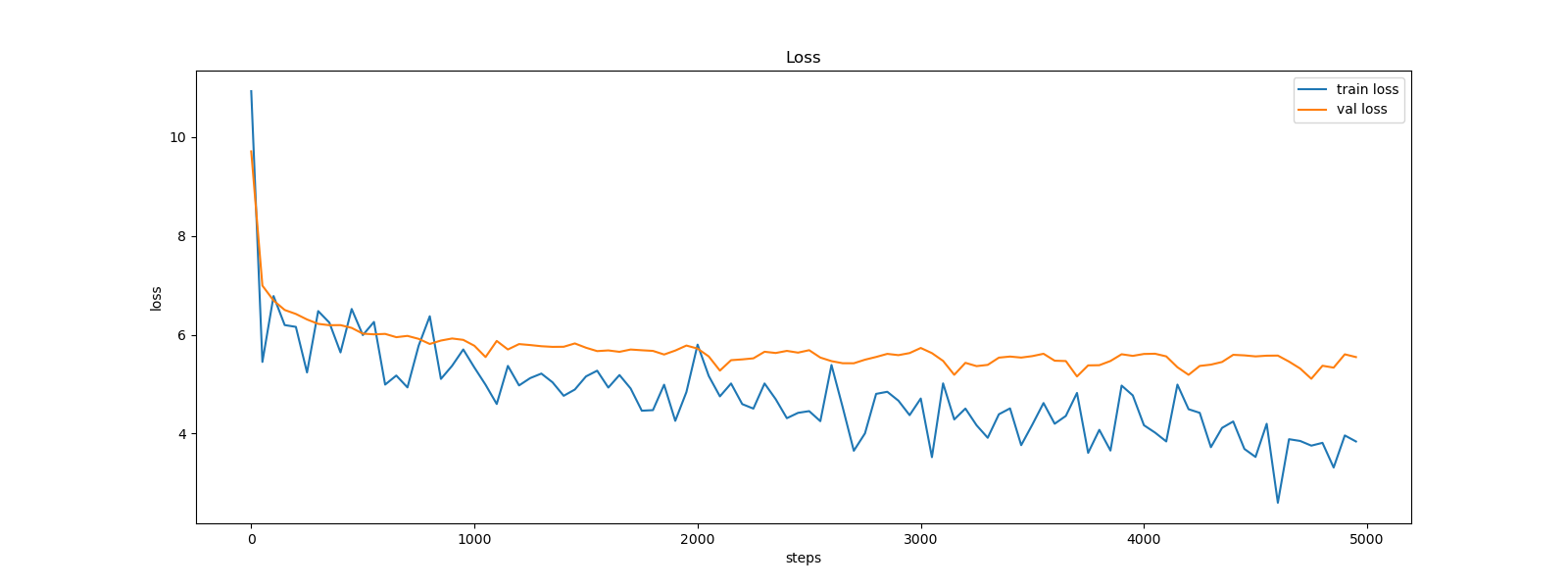

Training logs can be found in the log.txt file under the log folder. The following figure shows the training and validation loss changes with the number of steps:

Testing loss: 5.865208148956299

The backend service exposes an API that listens on port 5001 of your localhost. This service provides a single endpoint for generating text completions using the trained GPT-2 model.

To interact with the service, you can use the following curl command to send a POST request:

curl -X POST "http://127.0.0.1:5001/complete-sentence" \

-H "Content-Type: application/json" \

-d '{"text": "The quick brown fox"}'In this request, "text" is the prefix, and the service will generate a complete sentence based on the prefix using the trained GPT-2 model. An example response might look like this:

{

"completed_sentence": "The quick brown foxil!\nIn that hath this one little thousand time,\nI call'd new rest of much need a word,\nTo take that you love it made great two world will be love for a enemy.\nAnd to the"

} The following are sample outputs generated by the model given the input "The course of true love never did run smooth." with a maximum of 50 tokens:

The course of true love never did run smooth.

So love I should come to speak, your lord!

KING RICHARD II: Nay, my name and love me with me crown, I come, or heart, and

The course of true love never did run smooth.

KING RICHARD II: What that my sovereign-desets, The time shall fly on him that be your love the state upon yourlook'er tears I love thou

The course of true love never did run smooth.

BENVOLIO: My Lord of England's heart and thine eyes: So far I Lord'd good hands so graces onbrokely so love'er, I

The course of true love never did run smooth.

RICHARD: He would, he's a king, which did come; The king was a men I say that heart will be with youeech' woe, I

The course of true love never did run smooth.

KING RICHARD II: What say I see the king?

RATCLIFF: Your news, my lord: HowEN: go should lie: My

This project is inspired by Andrej Karpathy's tutorial. You can watch the detailed explanation in his YouTube video.