MVM3Det: A Novel Framework for Multi-view Monocular 3D Object Detection[arXiv].

We propose a novel multi-view framework for occlusion free 3D detection and MVM3D dataset for multi-view detection in occlusion scenarios.

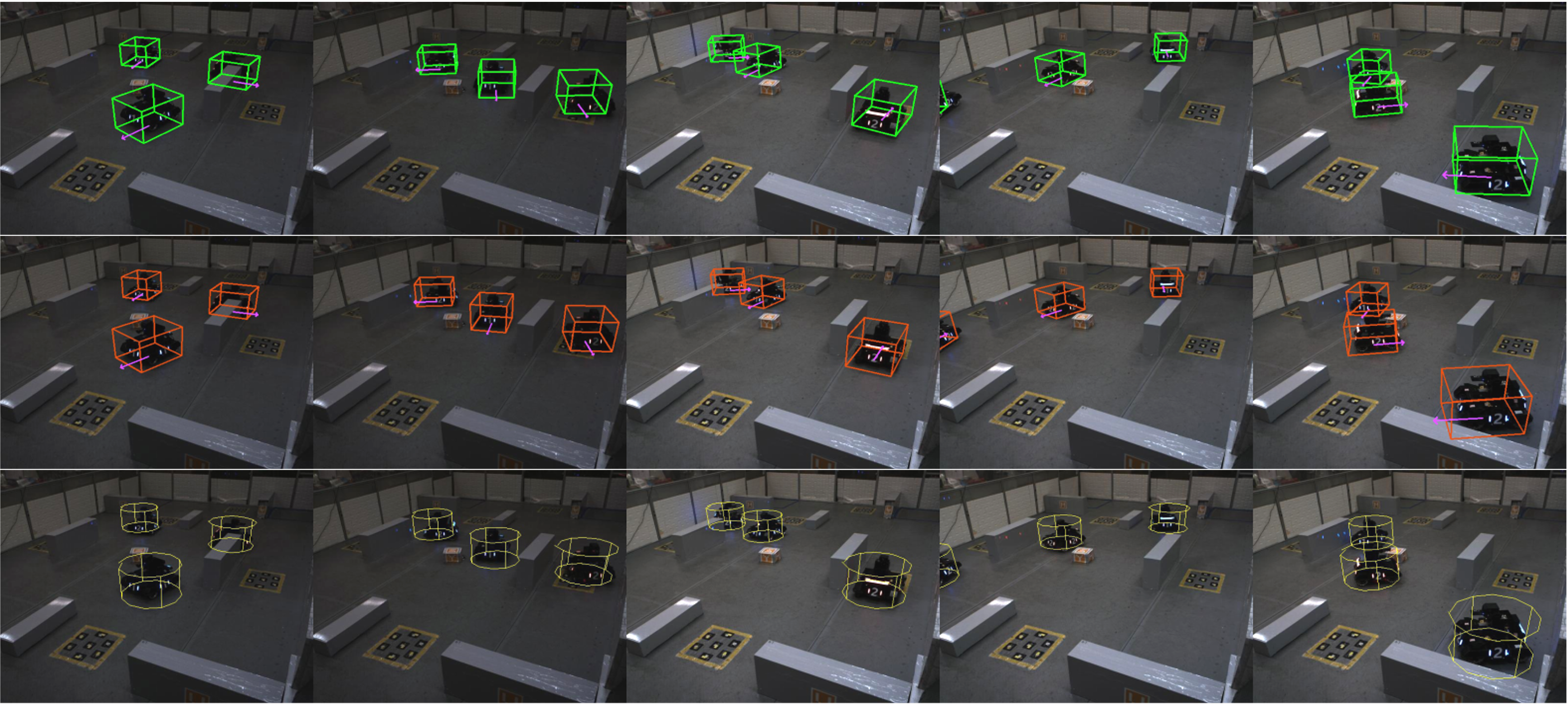

Our results are shown in row No.1, row 2 shows ground truth results, and row No. 3 shows MVDet results.

- MVM3Det code

- Code Preparations

- Training(In progress...)

- Inference

- Credit

- MVM3D dataset

- Label Information

- Downloads

- Toolkits(In progress...)

- Evaluation Metrics

- Clone this repository into your local folder.

- Prepare MVM3D dataset, please refer to Downloads for detailed instructions.

- Open

/code/EX_CONST.pyand modify the variable calleddata_rootasdata_root = '/your_dataset_path/MVM3D'

In progress...

Please make sure you have finished Code Preparations.

Best MVM3Det model for MVM3D dataset could be obtained in BaiduNetDisk (pwd: 09t1), download ppn.pth and mbon.pth models to folder /codes/pretrained_models.

cd codes

python infer.py

The inference program should automatically returns similar results to 95.9% MODA, 49.0% AP(IoU = 0.5) and 45.5% AOS(IoU=0.5) reported in the paper.

Our code mainly refers to these two repos: MVDet and simple-faster-rcnn-pytorch and 3D-BoundingBox. Some of the origin codes still exist in our implementation.

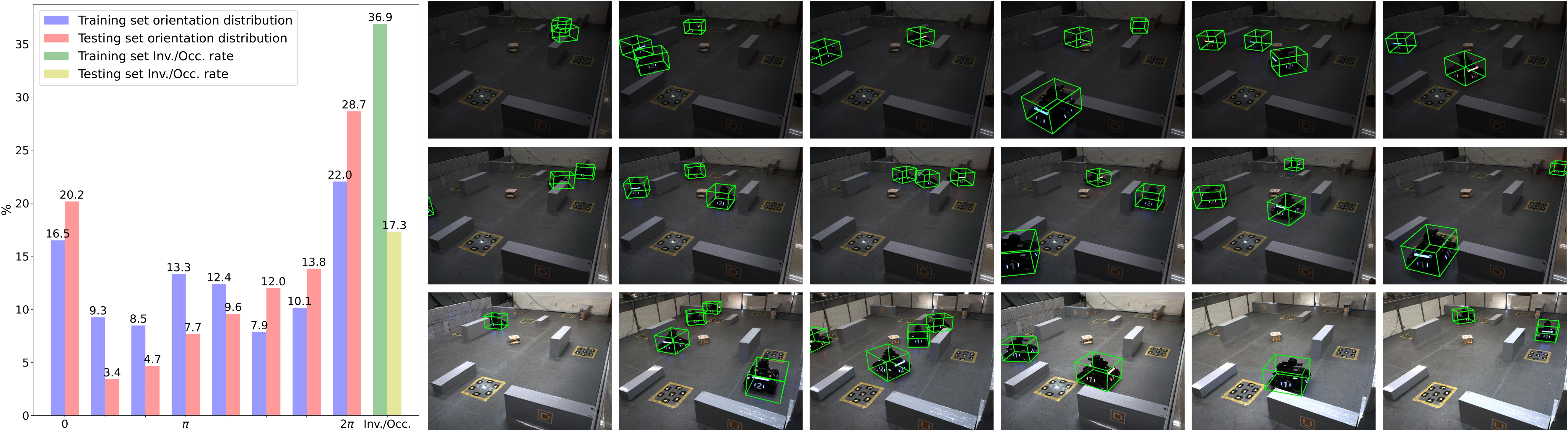

The MVM3D dataset is designed for multiview 3D detection in occlusion scenarios. Currently, monocular 3D detection dataset and multiview monocular detection dataset have thrived and emerged in recent years. However, less algorithms and datasets focus on 3D detection with occlusions. Inspired by WildTrack, MultiviewX and RoboMaster Univeristy AI Challenge, we develop a multiview monocular sentry detection dataset, MVM3D dataset.

The dataset is based on IEEE ICRA 2021 RoboMaster AI Challenge, including battle robot cars as detection targets and block obstacles as occlusion. The battleground is defined as a 4.49 meter by 8 meter plane ground, containing 9 fixed blocks as obstacles. The images are captured by 2 syncronized cameras, and the resolution of each image is 640 by 480, the image exposure time varies from 15000 microseconds to 30000 microseconds. The frame rate is 10 and each frame contains 1 to 4 robot cars as targets.

In progress...

- Images from left and right cameras.

- Ground truth label for:

- Robot car world coordinates, denoted as [x, y].

- Robot car orientations, calculated in radius.

- Robot car classification labels, denoted as [0, 1, 2, 3].

- 2D/3D/BEV bounding boxes.

- Robot car 3D measurements.

- Camera calibration matrix, including intrinsics and extrinsics.

Part of the data could be downloaded from Baidu Netdisk (Pwd: 0wp5) and OneDrive.

Extract the downloaded zip file called MVM3D.zip into /your_dataset_path/.

The dataset folder structure should be like this:

your_dataset_path

└─ MVM3D

Note that this is NOT the final version of this dataset, more images and annotations are in progress.

Some sample images and annotations are provided in sample_datasets folder.

By running the following command:

cd sample_dataset

python visualize.pyThe program are suppose to visualize the ground truth per-view 2D/3D boxes, birds-eye-view robot locations and orientations.

For localization performance, we use the same evaluation metrics in MultiviewX and WildTrack multiview pedestrian detection datasets, which are MODA, MODP, Precision and Recall. The evaluation toolkit could be referenced from here.

For 3D detection metrics, we use AP, AOS and OS introduced in KITTI benchmark. The evaluation toolkit could be referenced from here.