[News]: Just fixed the ontology problem, now the code should be able to run.

Code for EMNLP 2018 paper "XL-NBT: A Cross-lingual Neural Belief Tracking Framework"

In this paper, we propose the interesting cross-lingual state tracking problem and design a simple yet very efficient algorithm to tackle it. This repository contains all the experiment codes for both En->De and En->It transfer scenarios under two different parallel resource cases.

For more details, please check the latest version of the paper: https://128.84.21.199/abs/1808.06244.

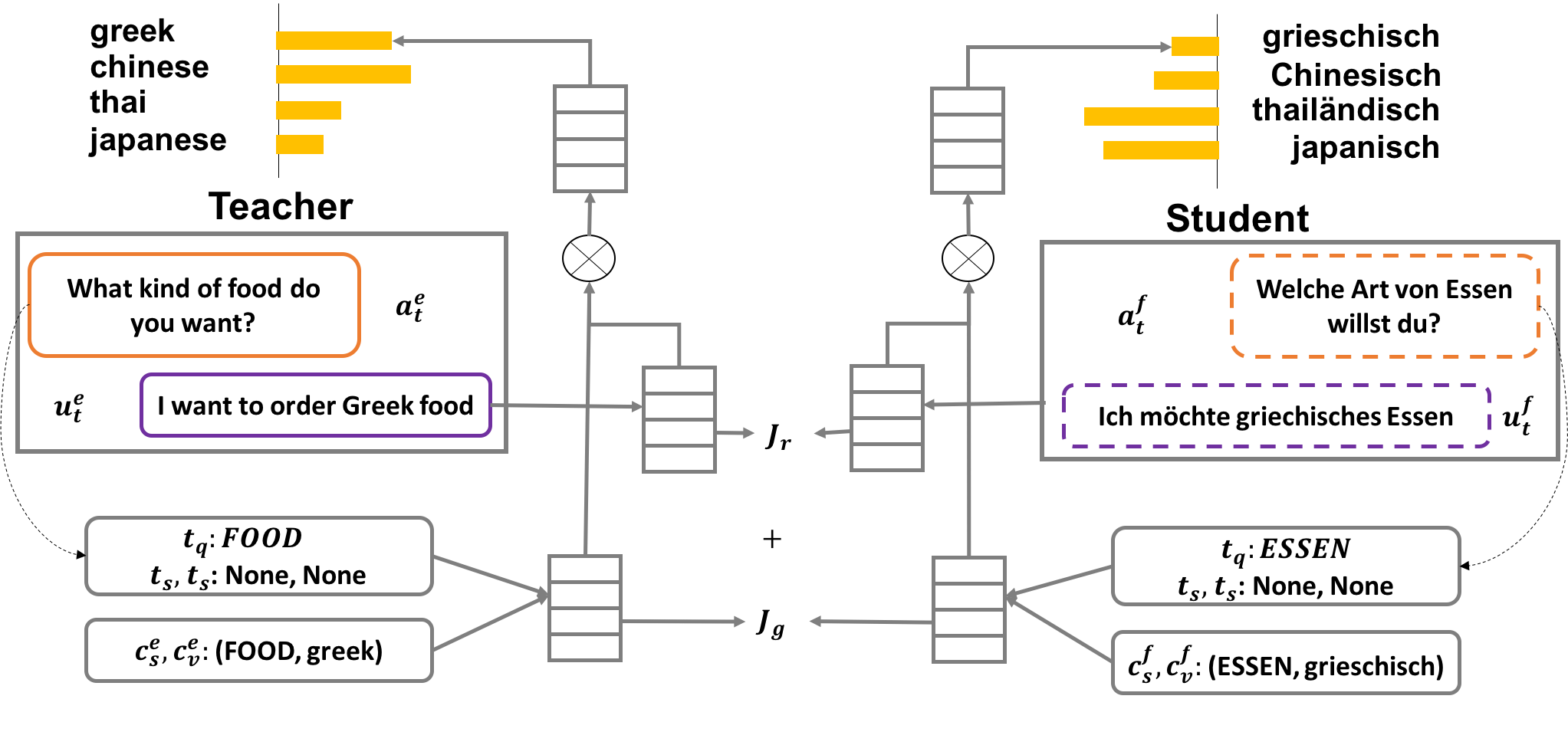

The general architecture of the model is displayed as below:

We decompose the transfer into two components, language understanding level transfer and ontology transfer.

- Python 2.7

- TensorFlow 1.4

- cuda & cudnn

- code/ contains all the python modules to support the algorithm

- config/ contains all the parameter setting

- data/ contains the dialog data for different languages and also the parallel translation data for XL-NBT-C

- word-vectors/ contains the pre-trained multi-lingual word embedding and two bilingual dictionary for XL-NBT-D

- models/ stores the saved models during training

- train.sh sets up all the supervised learning and transfer learning

[train]

batch_size=256

batches_per_epoch=64

max_iteration=100000

restore=True

alpha=1.0

[model]

id=student-dict-bilingual

restore_id=teacher-bilingual

dataset_name=woz

language=english

foreign_language=german

value_specific_decoder=False

learn_belief_state_update=True

tau=0.1

Clone this github repository recursively:

git clone https://github.com/wenhuchen/Cross-Lingual-NBT.git ./

Please Download the Bilingual embedding and put them into word-vectors/ folder

We use cross entropy loss to train the teacher model, please set "id=teacher-bilingual" and "restore=False" in the config file.

./train.sh train

Here, we already upload the pre-trained teacher models into the models/ folder, you can skip this step and jump to step 3 or 4 depending on differnt transfer scenarios.

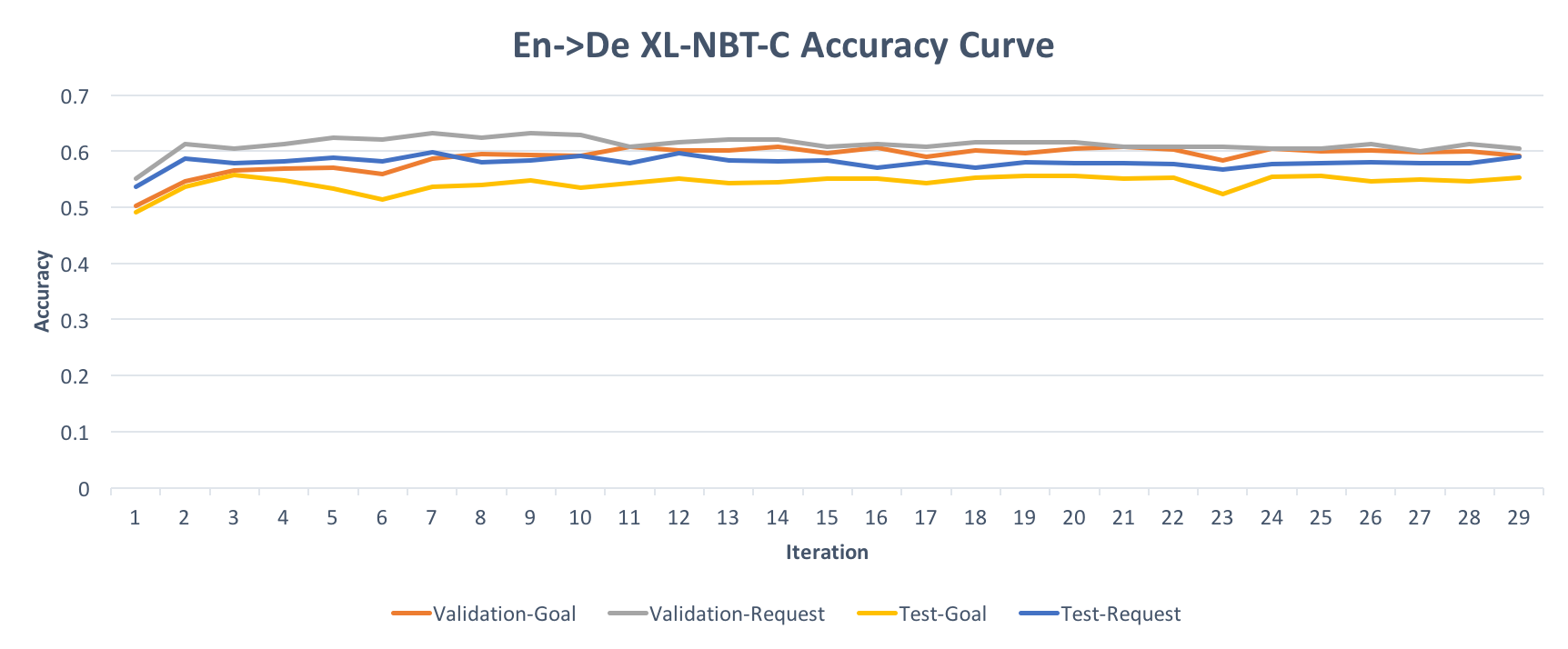

We use teacher-student framework to transfer knowledge across language boundary, please set "restore_id=teacher-bilingual" and "restore=True" in the config file, and "id=student-corpus-bilingual".

./train.sh corpus_transfer

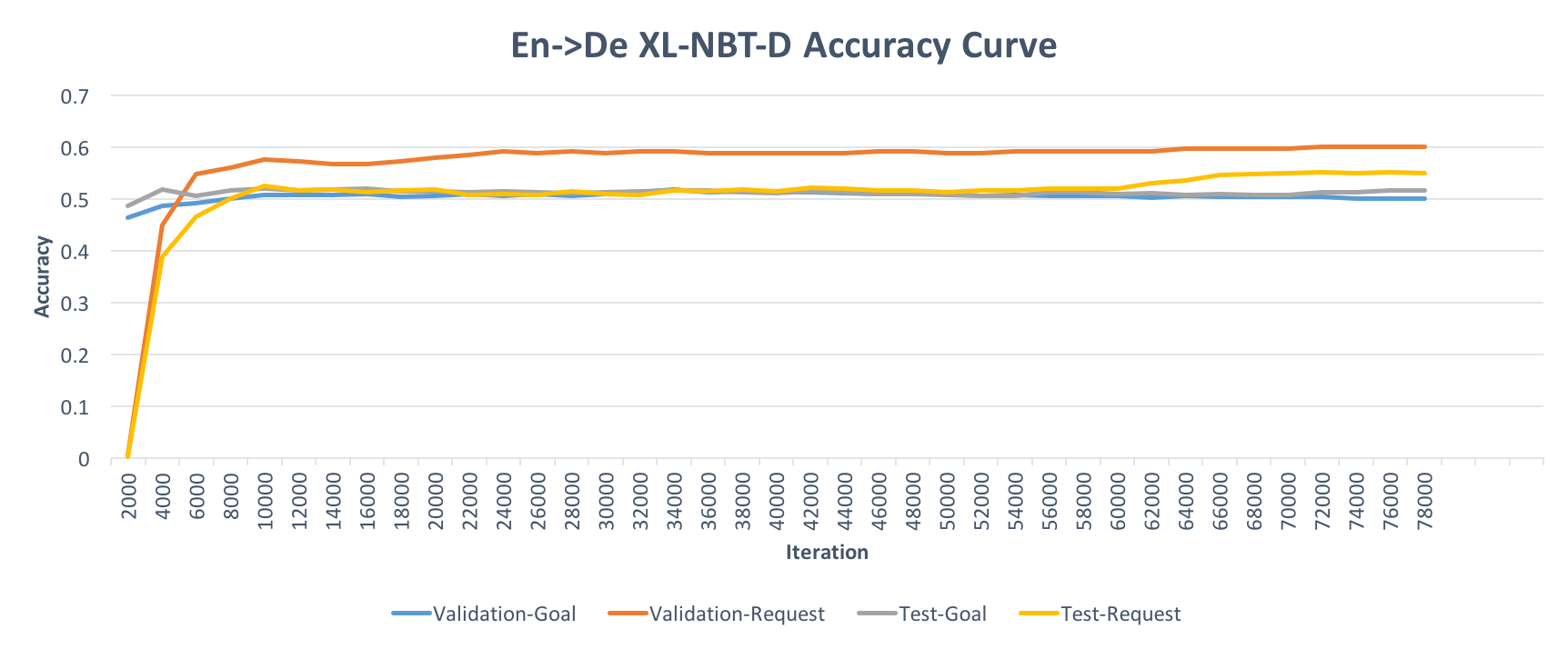

We use teacher-student framework to transfer knowledge across language boundary, please set "restore_id=teacher-bilingual" and "restore=True" in the config file, and "id=student-dict-bilingual".

./train.sh dict_transfer

If you find this paper useful, please this paper with the following bibtex

@inproceedings{chen2018xl,

title={XL-NBT: A Cross-lingual Neural Belief Tracking Framework},

author={Chen, Wenhu and Chen, Jianshu and Su, Yu and Wang, Xin and Yu, Dong and Yan, Xifeng and Wang, William Yang},

booktitle={Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing},

pages={414--424},

year={2018}

}