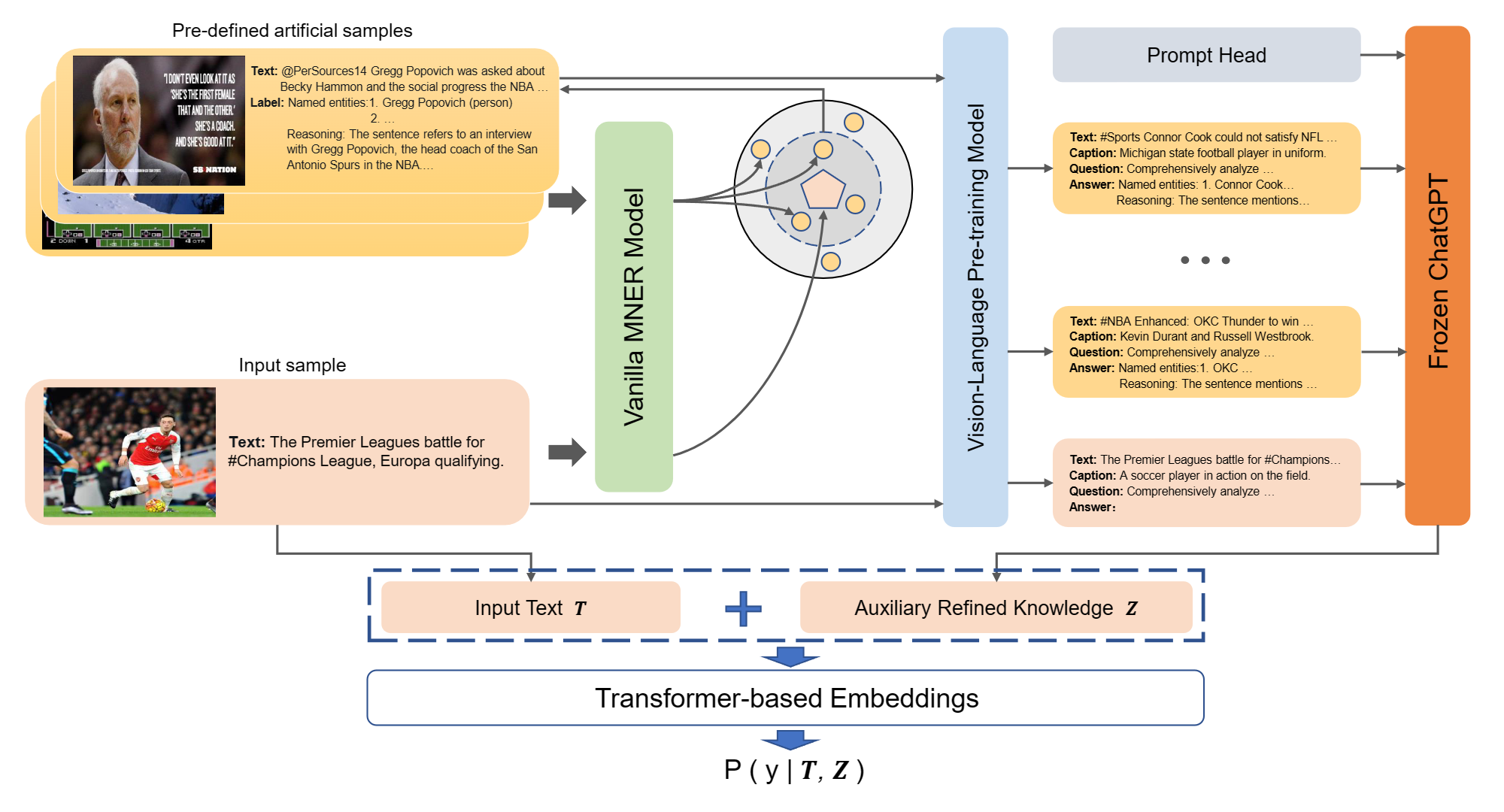

Here are code and dataset for our Findings of EMNLP 2023 paper: Prompting ChatGPT in MNER: Enhanced Multimodal Named Entity Recognition with Auxiliary Refined Knowledge

- 📆 [Aug. 2024] Twitter-SMNER dataset has been released.

- 📆 [Jun. 2024] A new research has been released. We propose a new Segmented Multimodal Named Entity Recognition (SMNER) task and construct the corresponding Twitter-SMNER dataset. Code and Twitter-SMNER dataset coming soon~

- 📆 [May. 2024] RiVEG (the sequel to PGIM about GMNER) has been accepted to ACL 2024 Findings.

- 📆 [Oct. 2023] PGIM has been accepted to EMNLP 2023 Findings.

To ease the code running, you can find our pre-processed datasets at here. And the predefined artificial samples are here.

python == 3.7

torch == 1.13.1

transformers == 4.30.2

modelscope == 1.7.1

PGIM is based on AdaSeq, AdaSeq project is based on Python version >= 3.7 and PyTorch version >= 1.8.

git clone https://github.com/modelscope/adaseq.git

cd adaseq

pip install -r requirements.txt -f https://modelscope.oss-cn-beijing.aliyuncs.com/releases/repo.html

-adaseq

---|examples

-----|PGIM

-------|twitter-15-txt.yaml

-------|twitter-17-txt.yaml

-adaseq

---|.git

---|.github

---|adaseq <-- (Use our adaseq replace it)

---|docs

---|examples

---|scripts

---|tests

---|tools

-For Baseline:

python -m scripts.train -c examples/PGIM/twitter-15.yaml

python -m scripts.train -c examples/PGIM/twitter-17.yaml

-For PGIM:

python -m scripts.train -c examples/PGIM/twitter-15-PGIM.yaml

python -m scripts.train -c examples/PGIM/twitter-17-PGIM.yaml

If you find PGIM useful in your research, please consider citing:

@inproceedings{li2023prompting,

title={Prompting ChatGPT in MNER: Enhanced Multimodal Named Entity Recognition with Auxiliary Refined Knowledge},

author={Li, Jinyuan and Li, Han and Pan, Zhuo and Sun, Di and Wang, Jiahao and Zhang, Wenkun and Pan, Gang},

booktitle={Findings of the Association for Computational Linguistics (EMNLP), 2023},

year={2023}

}

@inproceedings{li2024llms,

title={LLMs as Bridges: Reformulating Grounded Multimodal Named Entity Recognition},

author={Li, Jinyuan and Li, Han and Sun, Di and Wang, Jiahao and Zhang, Wenkun and Wang, Zan and Pan, Gang},

booktitle={Findings of the Association for Computational Linguistics (ACL), 2024},

year={2024}

}

@article{li2024advancing,

title={Advancing Grounded Multimodal Named Entity Recognition via LLM-Based Reformulation and Box-Based Segmentation},

author={Li, Jinyuan and Li, Ziyan and Li, Han and Yu, Jianfei and Xia, Rui and Sun, Di and Pan, Gang},

journal={arXiv preprint arXiv:2406.07268},

year={2024}

}

Our code is built upon the open-sourced AdaSeq and MoRe, Thanks for their great work!