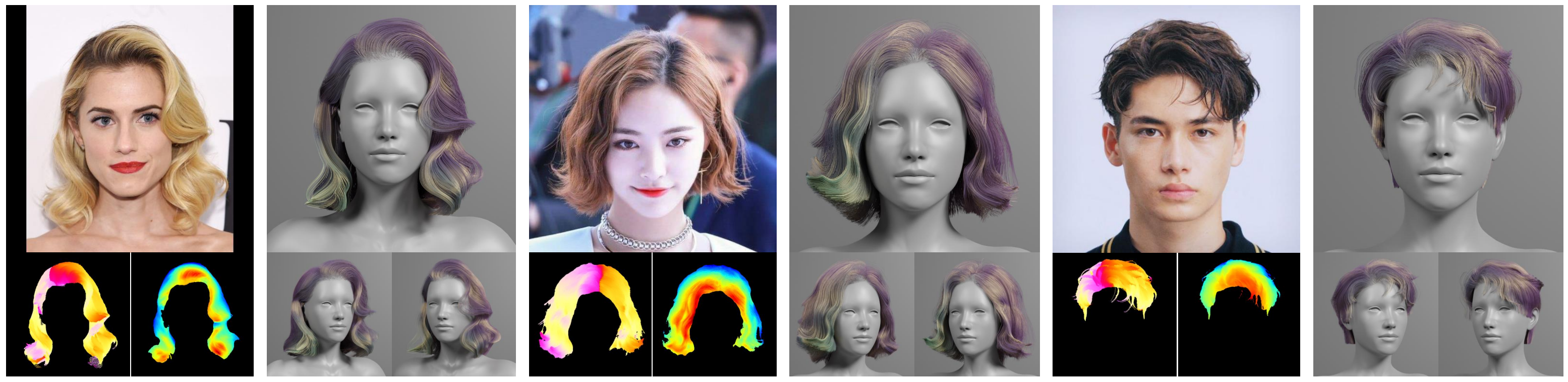

HairStep: Transfer Synthetic to Real Using Strand and Depth Maps for Single-View 3D Hair Modeling (CVPR2023 Highlight) [Projectpage]

All data of HiSa & HiDa is hosted on Google Drive:

| Path | Files | Format | Description |

|---|---|---|---|

| HiSa_HiDa | 12,503 | Main folder | |

| ├ img | 1,250 | PNG | Hair-centric real images at 512×512 |

| ├ seg | 1,250 | PNG | Hair masks |

| ├ body_img | 1,250 | PNG | Whole body masks |

| ├ stroke | 1,250 | SVG | Hair strokes (vector curves) manually labeled by artists |

| ├ strand_map | 1,250 | PNG | Strand maps with body mask |

| ├ camera_param | 1,250 | NPY | Estimated camera parameters for orthogonal projection |

| ├ relative_depth | 2,500 | Folder for the annotation of the ordinal relative depth | |

| ├ pairs | 1,250 | NPY | Pixel pairs randomly selected from adjacent super-pixels |

| ├ labels | 1,250 | NPY | Ordinal depth labels for which pixels are closer in pixel pairs |

| ├ dense_depth_pred | 2,500 | Folders for dense depth maps generated by our domain adaptive depth estimation method | |

| ├ depth_map | 1,250 | NPY | Nomalized depth maps (range from 0 to 1, the closer the bigger) |

| ├ depth_vis | 1,250 | PNG | Visulization of depth maps |

| ├ split_train.json | 1 | JSON | Split file for training |

| └ split_test.json | 1 | JSON | Split file for testing |

The HiSa & HiDa dataset is available for non-commercial research purposes only. All real images are collected from the Internet. Please contact Yujian Zheng and Xiaoguang Han for questions about the dataset.

git clone https://github.com/GAP-LAB-CUHK-SZ/HairStep.git

cd HairStep

conda create -n hairstep python=3.8

conda activate hairstep

pip install torch==1.9.0+cu111 torchvision==0.10.0+cu111 -f https://download.pytorch.org/whl/torch_stable.html

OR

pip install torch==1.12.1+cu113 torchvision==0.13.1+cu113 --extra-index-url https://download.pytorch.org/whl/cu113

pip install -r requirements.txt

Code is tested on torch1.9.0/1.12.1, CUDA11.1/11.3, Ubuntu 20.04 LTS.

Put collected and cropped potrait images into ./results/real_imgs/img/. Download the checkpoint of SAM and put it to ./checkpoints/SAM-models/.

python -m scripts.img2hairstep

Results will be saved in ./results/real_imgs/.

- Share the HiSa & HiDa datasets

- Release the code for converting images to HairStep

- Release the code for reconstructing 3D strands from HairStep (before 2023.8.31)

- Release the code for computing metrics HairSale & HairRida (before 2023.8.31)

- Release the code for traning and data pre-processing (later)

Note: A more compact and efficient sub-module for 3D hair reconstruction will be released, which has comparable performance to NeuralHDHair* reported in the paper.