This is the official implementation of Generative Neurosymbolic Machines by Jindong Jiang & Sungjin Ahn; accepted to the Neural Information Processing Systems (NeurIPS) 2020 as a 🌟Spotlight🌟 paper.

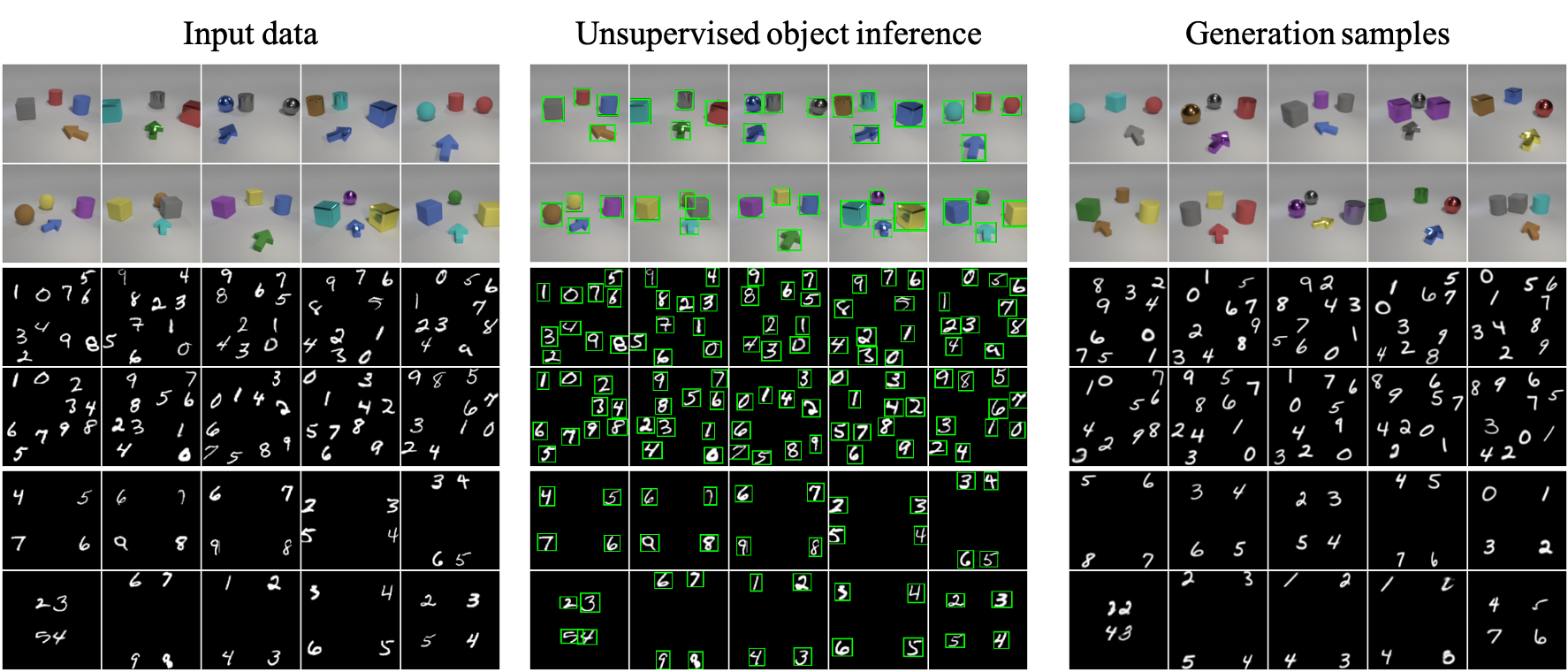

We also provide the generation samples we used to evaluate the scene structure accuracy in the paper in the generations directory.

This project uses Python 3.7 and PyTorch 1.4.0, but it is also tested on Python 3.8 and PyTorch 1.7.0.

To install the requirements, first install Miniconda (or Anaconda) then run:

conda env create -f environment.ymlNote that the environment.yml installs PyTorch with cudatoolkit=10.1. If you have another CUDA version installed, you may want to re-install it with the corresponding PyTorch version. See here.

The datasets used in the paper can be downloaded from the following link:

Once you download the datasets, unzip the files into the data directory.

To help you quickly get a feeling of how the code works, we provide pretrained models and a jupyter notebook for you to generate images for all three environments. These models can be downloaded from the following link.

Please put the downloaded checkpoints (.pth files) to the pretrained directory and use the generation.ipynb to play with them.

To train the model, use the following command:

python train.py --config-file config/[configuration].yaml

The configuration files for all three datasets are provide in the config folder. You may also want to specify the GPU you use for training by setting the CUDA_VISIBLE_DEVICES variable.

The training process includes the visualization and log-likelihood computation. Note that the log-likelihood computation is only for logging, final LL value should be computed using more particles in importance sampling, we use 100 for computing the results in the paper.

If you find our work useful to your research, please feel free to cite our paper:

@inproceedings{jiang2020generative,

title = {Generative Neurosymbolic Machines},

author = {Jiang, Jindong and Ahn, Sungjin},

booktitle = {Advances in Neural Information Processing Systems},

volume = {33},

pages = {12572--12582},

url = {https://proceedings.neurips.cc/paper_files/paper/2020/file/94c28dcfc97557df0df6d1f7222fc384-Paper.pdf},

year = {2020}

}- We didn't fix the random seed when generating the samples, so it is very difficult to generation the exact same images we provided in generations directory, but feel free to generate more using the pretrained models and the jupyter notebook.

- This project uses YACS -- Yet Another Configuration System, which is a very nice configuration library for arguments management, please also check their repo for more information.

- The

README.mdtemplate is partially taken from G-SWM and SCALOR