Baseline for IEEE ICME 2024 Grand Challenge.

This Challenge aims to push the boundaries of computational audition by tackling one of its most compelling problems: effectively classifying acoustic scenes under significant domain shifts.

ICME2024 GC

Challenge website

Google groups

Development dataset

Evaluation dataset

2024-FEB-05 The challenge has started and links to the development dataset and registration are available.

2024-MAR-10 Update on train.py script to correct issue with calculating macro-average accuracy.

2024-MAR-15 The link to the evaluation dataset is available.

conda create -n ASC python=3.10

conda activate ASC

git clone git@github.com:JishengBai/ICME2024ASC.git; cd ICME2024ASC

pip install -r requirement.txtThis step includes dataset download, unzip, and feature extraction.

# Takes about an hour

python3 setup_data.py

# Our development dataset is available on Zenodo: https://zenodo.org/records/10616533.

# Our evaluation dataset will be released on Mar 15, 2024.# Model training, which includes the following three steps:

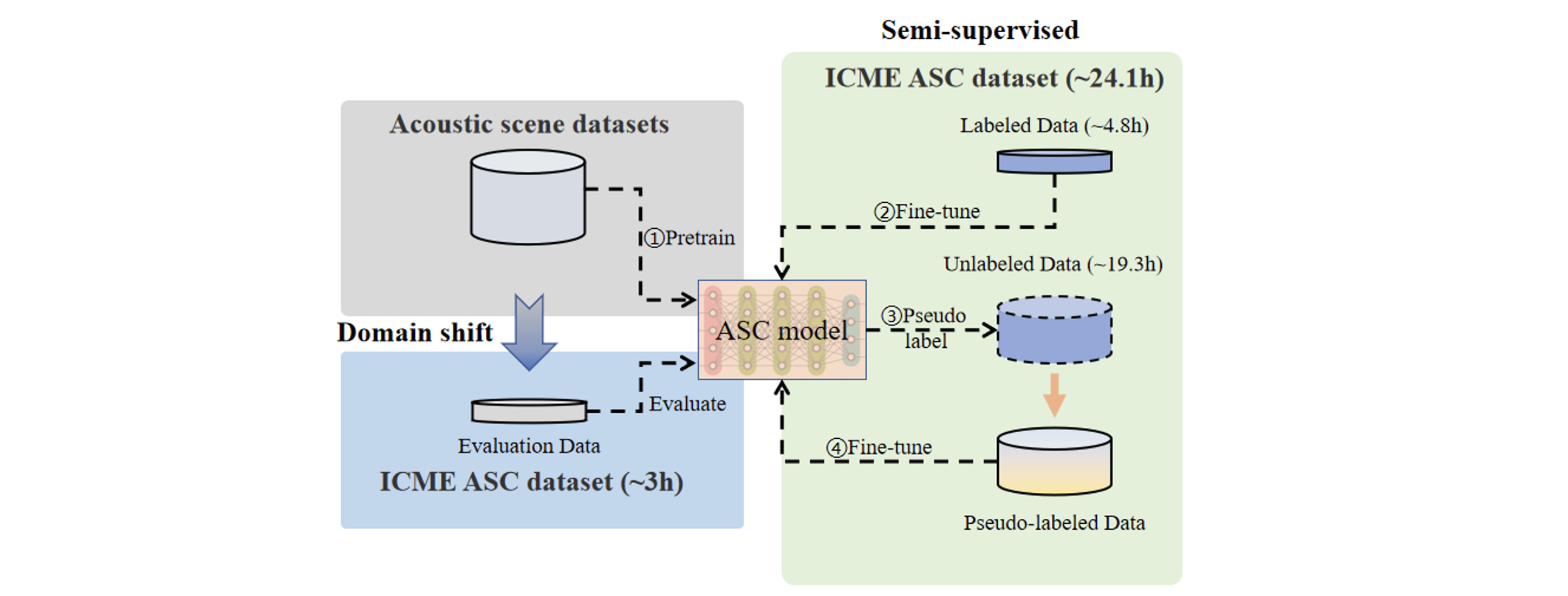

# (1) Training with limited labels; (2) Pseudo labeling; (3) Model training with pseudo labels.

# In total the training process takes about 30 minutes on a single NVIDIA 2080 Ti.

python train.py

# Model testing.

python test.pyYou can find an example training log here

@misc{bai2024description,

title={Description on IEEE ICME 2024 Grand Challenge: Semi-supervised Acoustic Scene Classification under Domain Shift},

author={Jisheng Bai and Mou Wang and Haohe Liu and Han Yin and Yafei Jia and Siwei Huang and Yutong Du and Dongzhe Zhang and Dongyuan Shi and Woon-Seng Gan and Mark D. Plumbley and Susanto Rahardja and Bin Xiang and Jianfeng Chen},

year={2024},

eprint={2402.02694},

archivePrefix={arXiv},

primaryClass={eess.AS}

}@ARTICLE{9951400,

author={Bai, Jisheng and Chen, Jianfeng and Wang, Mou and Ayub, Muhammad Saad and Yan, Qingli},

journal={IEEE Transactions on Cognitive and Developmental Systems},

title={A Squeeze-and-Excitation and Transformer-Based Cross-Task Model for Environmental Sound Recognition},

year={2023},

volume={15},

number={3},

pages={1501-1513},

keywords={Task analysis;Acoustics;Computational modeling;Speech recognition;Transformers;Pattern recognition;Computer architecture;Attention mechanism;cross-task model;data augmentation;environmental sound recognition (ESR)},

doi={10.1109/TCDS.2022.3222350}}- Northwestern Polytechnical University, China

- Xi'an Lianfeng Acoustic Technologies Co., Ltd., China

- Nanyang Technological University, Singapore

- Institute of Acoustics, Chinese Academy of Sciences, China

- University of Surrey, UK