✉ Corresponding author

- [10/2024] 🔥 We release the presentation video and demo.

- [10/2024] 🔥 We release the code. Enjoy it! 😄

- [09/2024] 🔥 Optimus-1 is accepted to NeurIPS 2024!

- [08/2024] 🔥 Project page released.

- [08/2024] 🔥 Arxiv paper released.

# install uv

curl -LsSf https://astral.sh/uv/install.sh | shgit clone https://github.com/JiuTian-VL/Optimus-1.git

cd Optimus-1

uv sync

source .venv/bin/activate

uv pip install -r requirements.txt

# install java, clang, xvfb

sudo apt install clang

sudo apt-get install openjdk-8-jdk

sudo apt-get install xvfb

# install mineclip dependicies

uv pip install setuptools==65.5.1 wheel==0.38.0 x_transformers==0.27.1 dm-tree

# install minerl

cd minerl

uv pip install -r requirements.txt

uv pip install -e . # maybe slow

cd ..

# download our MCP-Reborn and compile

# url: https://drive.google.com/file/d/1GLy9IpFq5CQOubH7q60UhYCvD6nwU_YG/view?usp=drive_link

mv MCP-Reborn.tar.gz minerl/minerl

cd minerl/minerl

rm -rf MCP-Reborn

tar -xzvf MCP-Reborn.tar.gz

cd MCP-Reborn

./gradlew clean build shadowJar

# download steve1 checkpoint

# url: https://drive.google.com/file/d/1Mmwqv2juxMuP1xOZYWucnbKopMk0c0DV/view?usp=drive_link

unzip optimus1_steve1_ckpt.zip! Before running the code, open src/optimus1/models/gpt4_planning.py, change the OpenAI api key to your own key.

- start the server, Optimus1 connect the MineRL via the server

bash scripts/server.sh- test minerl environment

bash scripts/test_minerl.sh- test diamond benchmark

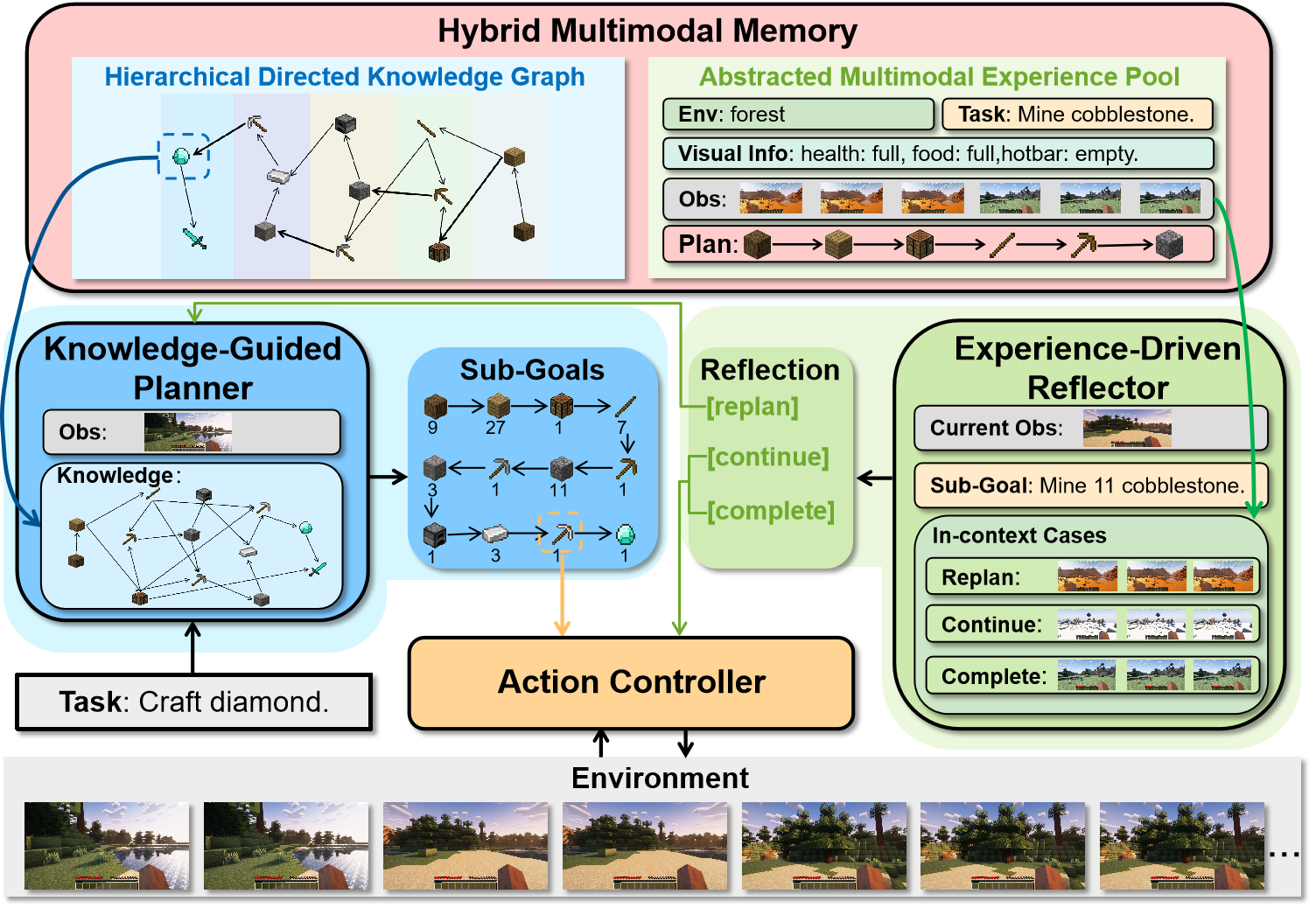

bash scripts/diamond.shWe divide the structure of Optimus-1 into Knowledge-Guided Planner, Experience-Driven Reflector, and Action Controller. In a given game environment with a long-horizon task, the Knowledge-Guided Planner senses the environment, retrieves knowledge from HDKG, and decomposes the task into executable sub-goals. The action controller then sequentially executes these sub-goals. During execution, the Experience-Driven Reflector is activated periodically, leveraging historical experience from AMEP to assess whether Optimus-1 can complete the current sub-goal. If not, it instructs the Knowledge-Guided Planner to revise its plan. Through iterative interaction with the environment,Optimus-1 ultimately completes the task.

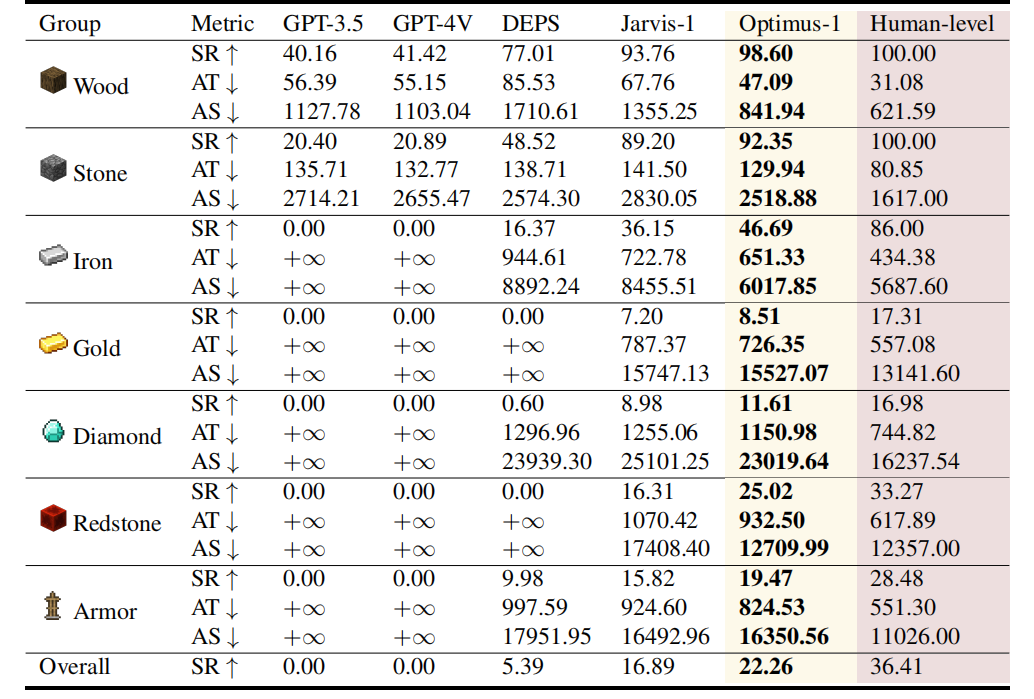

We report the average success rate (SR), average number of steps (AS), and average time (AT) on each task group, the results of each task can be found in the Appendix experiment. Lower AS and AT metrics mean that the agent is more efficient at completing the task, while

If you find this work useful for your research, please kindly cite our paper:

@inproceedings{li2024optimus,

title={Optimus-1: Hybrid Multimodal Memory Empowered Agents Excel in Long-Horizon Tasks},

author={Li, Zaijing and Xie, Yuquan and Shao, Rui and Chen, Gongwei and Jiang, Dongmei and Nie, Liqiang},

booktitle={NeurIPS},

year={2024}

}