OneDiff is an out-of-the-box acceleration library for diffusion models, it provides:

- PyTorch Module compilation tools and strong optimized GPU Kernels for diffusion models

- Out-of-the-box acceleration for popular UIs/libs

OneDiff is the abbreviation of "one line of code to accelerate diffusion models". Here is the latest news:

- 🚀Accelerating Stable Video Diffusion 3x faster with OneDiff DeepCache + Int8

- 🚀Accelerating SDXL 3x faster with DeepCache and OneDiff

- 🚀InstantID can run 1.8x Faster with OneDiff

Here is the introduction of OneDiff Community.

- Create an issue

- Chat in Discord:

- Email for Enterprise Edition or other business inquiries: contact@siliconflow.com

The Full Introduction of OneDiff:

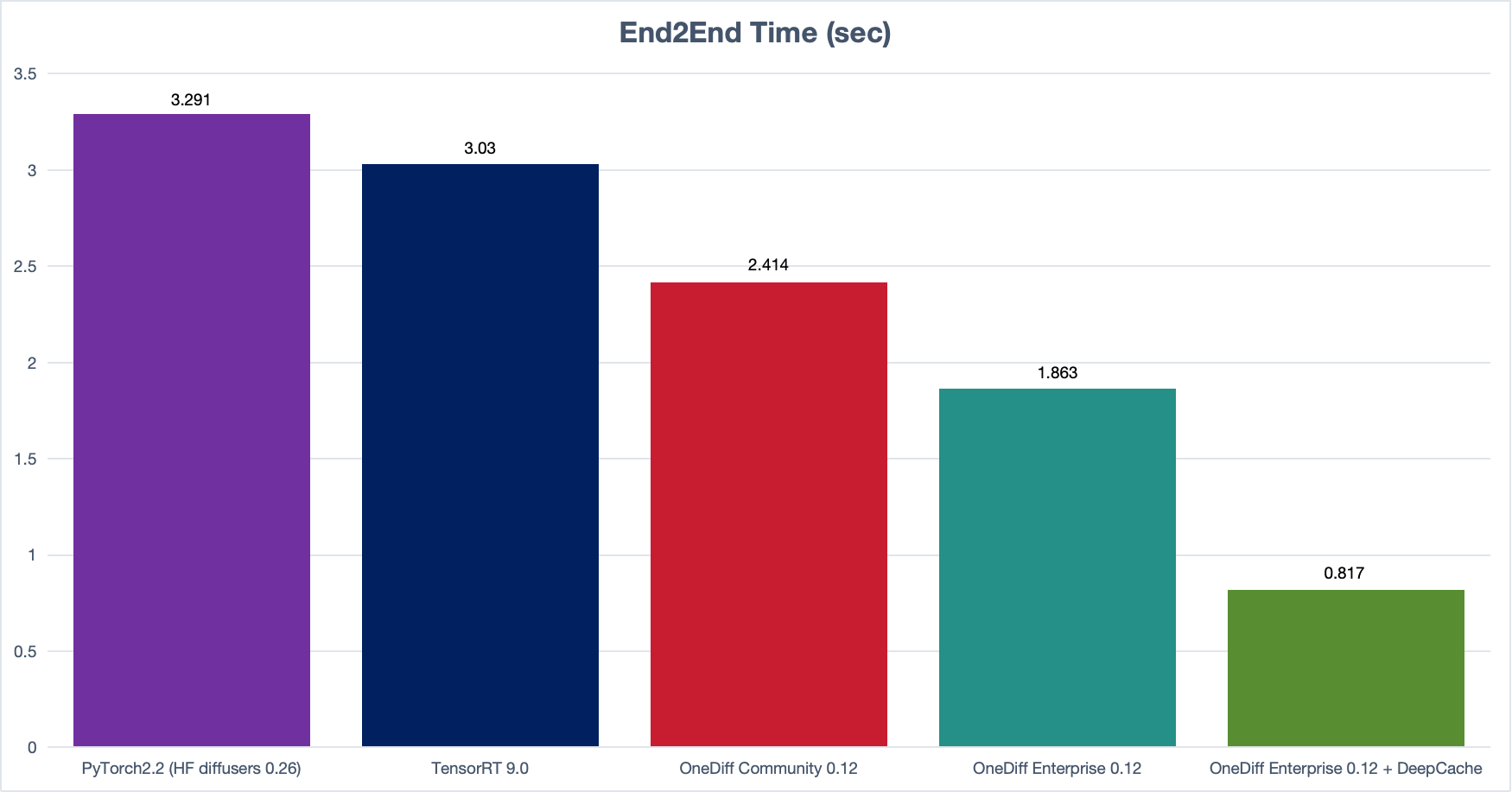

- Model stabilityai/stable-diffusion-xl-base-1.0;

- Image size 1024*1024, batch size 1, steps 30;

- NVIDIA A100 80G SXM4;

- Model stabilityai/stable-video-diffusion-img2vid-xt;

- Image size 576*1024, batch size 1, steps 25, decoder chunk size 5;

- NVIDIA A100 80G SXM4;

OneDiff supports the acceleration for SOTA models.

- stable: release for public usage, and has long-term support;

- beta: release for professional usage, and has long-term support;

- alpha: early release for expert usage, and is under active development;

| AIGC Type | Models | HF diffusers | ComfyUI | SD web UI | |||

|---|---|---|---|---|---|---|---|

| Community | Enterprise | Community | Enterprise | Community | Enterprise | ||

| Image | SD 1.5 | stable | stable | stable | stable | beta | beta |

| SD 2.1 | stable | stable | stable | stable | beta | beta | |

| SDXL | stable | stable | stable | stable | beta | beta | |

| LoRA | stable | stable | beta | ||||

| ControlNet | stable | stable | |||||

| SDXL Turbo | stable | stable | |||||

| LCM | stable | stable | |||||

| SDXL DeepCache | stable | beta | stable | beta | |||

| InstantID | stable | stable | |||||

| Video | SVD(stable Video Diffusion) | stable | beta | stable | beta | ||

| SVD DeepCache | stable | beta | stable | beta |

Note: Enterprise Edition contains all the functionality in Community Edition.

Compile and save the compiled result offline, then load it online for serving

- Save and Load the compiled graph

- Change device of the compiled graph to do multi-process serving

- Compile at one device(such as device 0), then use the compiled result to other device(such as device 1~7).

- This is for special scene and is in the Enterprise Edition.

If you need Enterprise-level Support for your system or business, you can

- subscribe to Enterprise Edition online and get all support after the order: https://siliconflow.com/onediff.html

- or send an email to contact@siliconflow.com and tell us about your user case, deployment scale, and requirements.

OneDiff Enterprise Edition can be subscripted for one month and one GPU and the cost is low.

| OneDiff Enterprise Edition | OneDiff Community Edition | |

|---|---|---|

| Multiple Resolutions | Yes(No time cost for most of the cases) | Yes(No time cost for most of the cases) |

| More Extreme and Dedicated optimization(usually another 20~100% performance gain) for the most used model | Yes | |

| Tools for specific(very large scale) server side deployment | Yes | |

| Technical Support for deployment | High priority support | Community |

| Get the experimental features | Yes |

- Linux

- If you want to use OneDiff on Windows, please use it under WSL.

- NVIDIA GPUs

NOTE: We have updated OneFlow frequently for OneDiff, so please install OneFlow by the links below.

-

CUDA 11.8

# For NA/EU users python3 -m pip install -U --pre oneflow -f https://github.com/siliconflow/oneflow_releases/releases/expanded_assets/community_cu118# For CN users python3 -m pip install -U --pre oneflow -f https://oneflow-pro.oss-cn-beijing.aliyuncs.com/branch/community/cu118

Click to get OneFlow packages for other CUDA versions.

-

CUDA 12.1

# For NA/EU users python3 -m pip install -U --pre oneflow -f https://github.com/siliconflow/oneflow_releases/releases/expanded_assets/community_cu121# For CN users python3 -m pip install -U --pre oneflow -f https://oneflow-pro.oss-cn-beijing.aliyuncs.com/branch/community/cu121 -

CUDA 12.2

# For NA/EU users python3 -m pip install -U --pre oneflow -f https://github.com/siliconflow/oneflow_releases/releases/expanded_assets/community_cu122# For CN users python3 -m pip install -U --pre oneflow -f https://oneflow-pro.oss-cn-beijing.aliyuncs.com/branch/community/cu122

Note: You can choose the latest versions you want for diffusers or transformers.

python3 -m pip install "torch" "transformers==4.27.1" "diffusers[torch]==0.19.3"

- From PyPI

python3 -m pip install --pre onediff

- From source

git clone https://github.com/siliconflow/onediff.git

cd onediff && python3 -m pip install -e .

NOTE: If you intend to utilize plugins for ComfyUI/StableDiffusion-WebUI, we highly recommend installing OneDiff from the source rather than PyPI. This is necessary as you'll need to manually copy (or create a soft link) for the relevant code into the extension folder of these UIs/Libs.

python3 -m pip install huggingface_hub

~/.local/bin/huggingface-cli login

-

run examples to check it works

cd onediff_diffusers_extensions python3 examples/text_to_image.py -

bump version in these files:

.github/workflows/pub.yml src/onediff/__init__.py -

install build package

python3 -m pip install build

-

build wheel

rm -rf dist python3 -m build

-

upload to pypi

twine upload dist/*