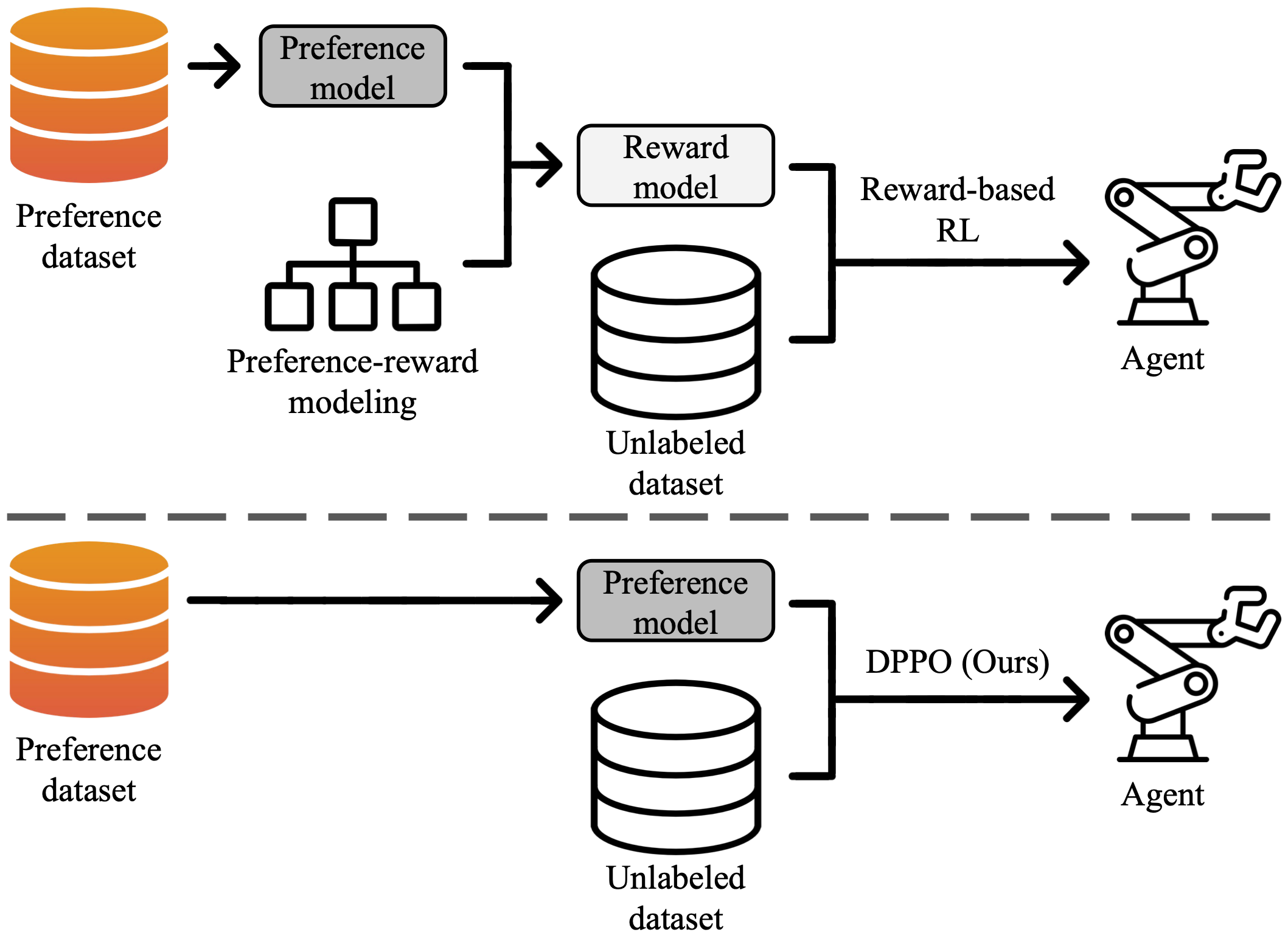

Official implementation of Direct Preference-based Policy Optimization without Reward Modeling, NeurIPS 2023.

Note: Our code was tested on Linux OS with CUDA 12. If your system specification differs (e.g., CUDA 11), you may need to modify the requirements.txt file and the installation commands.

To install the required packages using Conda, follow the steps below:

conda create -n dppo python=3.8

conda activate dppo

conda install -c "nvidia/label/cuda-12.3.0" cuda-nvcc

pip install -r requirements.txt -f https://storage.googleapis.com/jax-releases/jax_cuda_releases.html

python -m JaxPref.new_preference_reward_main --env_name [ENV_NAME] --seed [SEED] --transformer.smooth_w [NU] --smooth_sigma [M]

python train.py --env_name [ENV_NAME] --seed [SEED] --transformer.smooth_w [NU] --smooth_sigma [M] --dropout [DROPOUT] --lambd [LAMBDA]

@inproceedings{

an2023dppo,

title={Direct Preference-based Policy Optimization without Reward Modeling},

author={Gaon An and Junhyeok Lee and Xingdong Zuo and Norio Kosaka and Kyung-Min Kim and Hyun Oh Song},

booktitle={Neural Information Processing Systems},

year={2023}

}

Our code is based on PreferenceTransformer, which was also based on FlaxModels and IQL.