The starting point of this code was taken from https://github.com/gdmarmerola/interactive-intro-rl.

In main.py there should be everything to run the tests you could want.

You need to only change bandit_matrix and values in order to define a parametric bandit problem. The values represent the values for bandit_matrix is an np array of arrays. Each array represents one bandit, and its values the parameters of that banit. For instance:

bandit_matrix = np.array([[1, 0], [1, -1], [0, 1], [-0.4, 1.5]])

values = [0.6, 0.5]represents

Note that the length of each array in bandit_matrix should be equal to the length of bandit_probs, i.e. the dimension of the value space.

The other examples from the paper are

bandit_matrix = np.array(

[

[1.4, 0.2, -1, 0.6],

[0.2, -0.5, 1.2, 0.2],

[0.8, -0.1, 0, 1],

[0.9, 0, 0, -0.5],

]

)

values = [0.3, 0.2, 0.3, 0.4]for

bandit_matrix = np.array(

[

[1, 1, 1, 0, 0],

[1, 1, 0, 1, 0],

[1, 1, 0, 0, 1],

[1, 0, 1, 1, 0],

[1, 0, 1, 0, 1],

[1, 0, 0, 1, 1],

[0, 1, 1, 1, 0],

[0, 1, 1, 0, 1],

[0, 1, 0, 1, 1],

[0, 0, 1, 1, 1],

]

)

values = [0.3, 0.3, 0.15, 0.1, 0.05]for

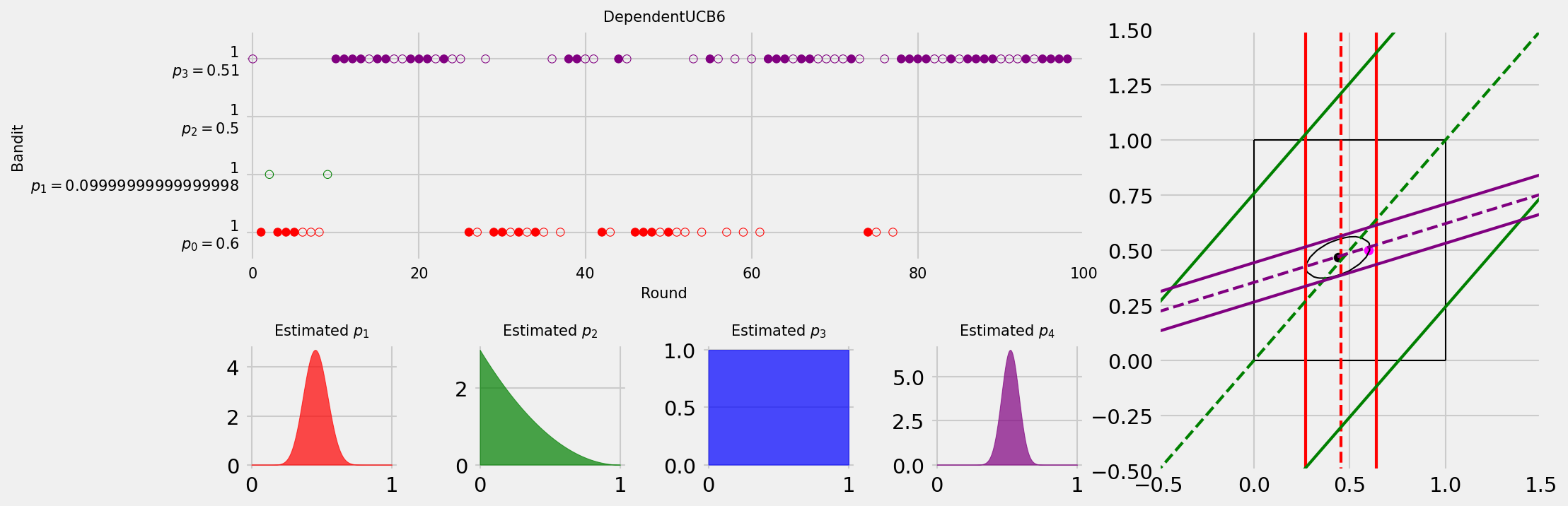

With plot.plot_MAB_experiment you can plot one run of a mab experiment as a video or frame like this:

def plot_MAB_experiment(

decision_policy,

mab,

N_DRAWS,

bandit_probs,

plot_title,

graph=True,

video=False,

column_vecs=None,

theta_values=None,

alpha=None,

)Arguments:

-

decision_policy(k_array, reward_array, n_bandits)wherek_arrayis a list of bandits where each bandit is a list of zeroes and ones whether that bandit was picked that round in the history, andreward_arrayis the same but the reward for that bandit in each round, andn_banditsis the number of bandits. It should return what bandit to play. -

mabthe multi-armed bandit problem likeclass MABinmain.py. -

N_DRAWSthe number of draws -

plot_titletitle -

graphwhether to show the graph shown on the right side of the image. -

videowhether to show a video where each round is a frame, or just output the last frame. -

column_vecscolumn vectors as forLinUCBin order to make the ellipsoid work for the graph -

theta_valuesthe actual value for$\theta$ to be used for the graph. -

alphathe$\alpha$ for the ellipsoid from LinUCB in the graph.

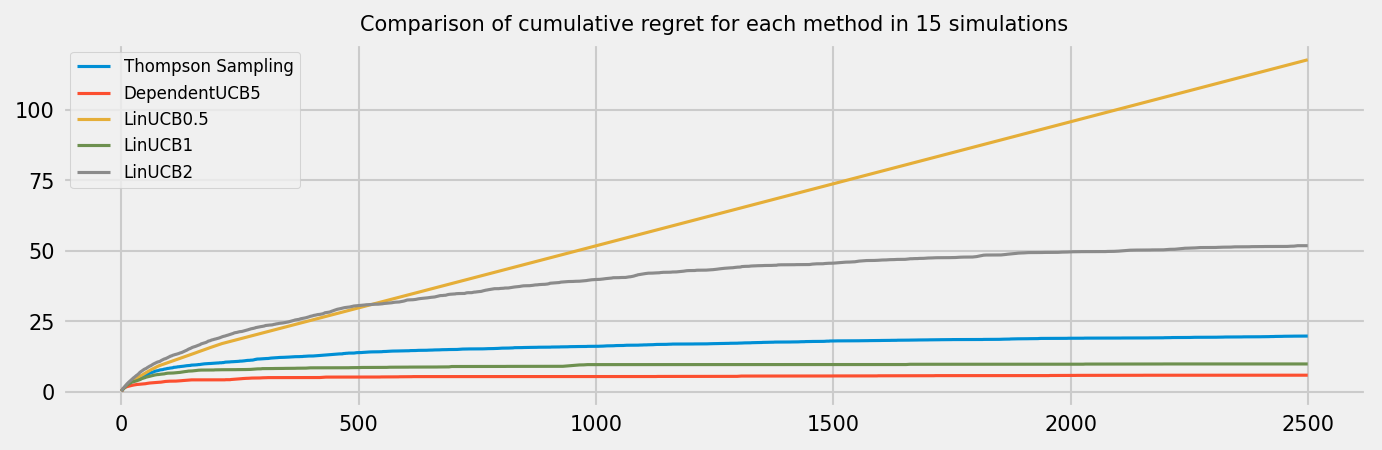

Simulates some number of runs of multiple policies on a certain MAB. This can then be input into plot_simulation to plot this:

def simulation(

mab,

policies,

n_rounds=1000,

n_simulations=1000,

uncertainty=None,

):Returns a dictionary which has for every policy:

k_arrayfor every bandit for every round number of times that bandit was selected that roundreward_arrayfor every bandit for every round number of times that bandit gave a reward in that roundregret_arrayfor every simulation for every round the regret

def plot_simulation(

results_dict,

n_bandits,

plot_reward=False

):Arguments

mabthe multi-armed bandit problem likeclass MABinmain.py.policiesa dictionary of policies to run on the bandit problem.n_roundsthe number of roundsn_simulationsthe number of simulations with eachn_roundsrounds.plot_rewardwhether to plot the cumulative reward as well as the cumulative regret.uncertaintyif this is given it adds some uncertainty 'noise'. It adds to each bandit probability a certain error which is drawn from a uniform distribution between -uncertainty and +uncertainty. These errors are added at the start and then all policies are run on the same probabilities for all simulations.

-

TSPolicy- Thompson Sampling -

eGreedyPolicy- epsilon greedy RandomPolicy-

UCBPolicy. UCB1 from Auer2002 "Finite-time Analysis of the Multiarmed Bandit Problem" -

UCBPolicy2. UCB2 from the same paper. -

UCBPolicyTuned. UCB1-Tuned from the same paper. You need to provide the argumentsvariablesandbandit_expressionsmade from the packagecvxpy,main.pyhandles this. This is the same for the other dependent policies. -

UCBPolicyB. Variant that rounds to 3 decimals and picks random on tie -

UCBPolicyC. Additionally to UCBPolicyB also initially max probability upper bounds at 1. -

UCBDependent. Dependent version of UCB1. -

UCBDependentB. Dependent version of UCBPolicyB. -

UCBPolicy2Dependent. Dependent version of UCB2. -

UCBPolicyTunedDependent. Dependent version of UCB1-Tuned. -

LinUCB. LinUCB from the paper. Arguments are$\alpha$ and feature vectors, which crucially need to be np column vectors. This is handled inmain.py. -

eGreedyPolicyDecaying. Epsilon greedy but instead of epsilon, you provide epsilon function, which for every round has to give a certain epsilon.lin_decaying(start,end,steps)inmain.pyprovides such a function that starts epsilon atstart, and moves towardsendinstepssteps. -

eGreedyPolicyDecayingUCBTunedDependent. The same as eGreedyPolicyDecaying except that if the policy does not explore but rather exploit, it uses the UCBTunedDependent poliicy for this. -

DerivativeExplore. Same as eGreedyPolicyDecayingUCBTunedDependent except that when the policy explores it uses the derivatives of the linear program to determine which arm would be most effective to draw in order to gain the most information about the system.