If you are interested in step by step explanation and review, check out the post for this repo at www.startdataengineering.com

- docker (also make sure you have

docker-compose) we will use this to run Airflow locally - pgcli to connect to our databases(postgres and Redshift)

- AWS account to set up our cloud components

- AWS Components to start the required services

By the end of the setup you should have(or know how to get)

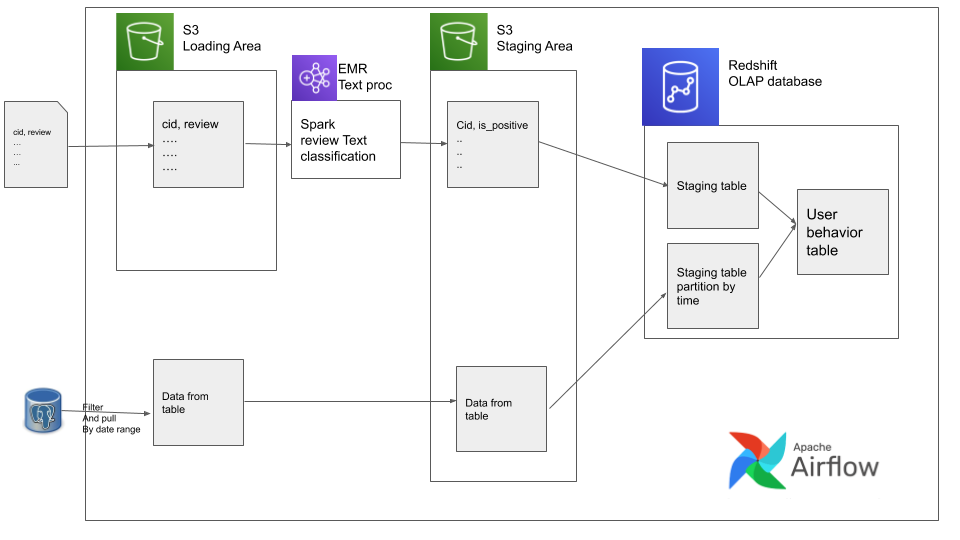

aws cliconfigured with keys and regionpem or ppkfile saved locally with correct permissionsARNfrom youriamrole for RedshiftS3bucketEMR IDfrom the summary pageRedshifthost, port, database, username, password

Data is available at data.Place this folder within the setup folder as such setup/raw_input_data/

In you local terminal type within your project base directory

docker-compose -f docker-compose-LocalExecutor.yml up -dThen wait a couple seconds and sign into the Airflow postgres metadata database(since our data is small we pretend that our metadata database is also our OLTP datastore)

pgcli -h localhost -p 5432 -U airflowand run the script at setup/postgres/create_user_purchase.sql.

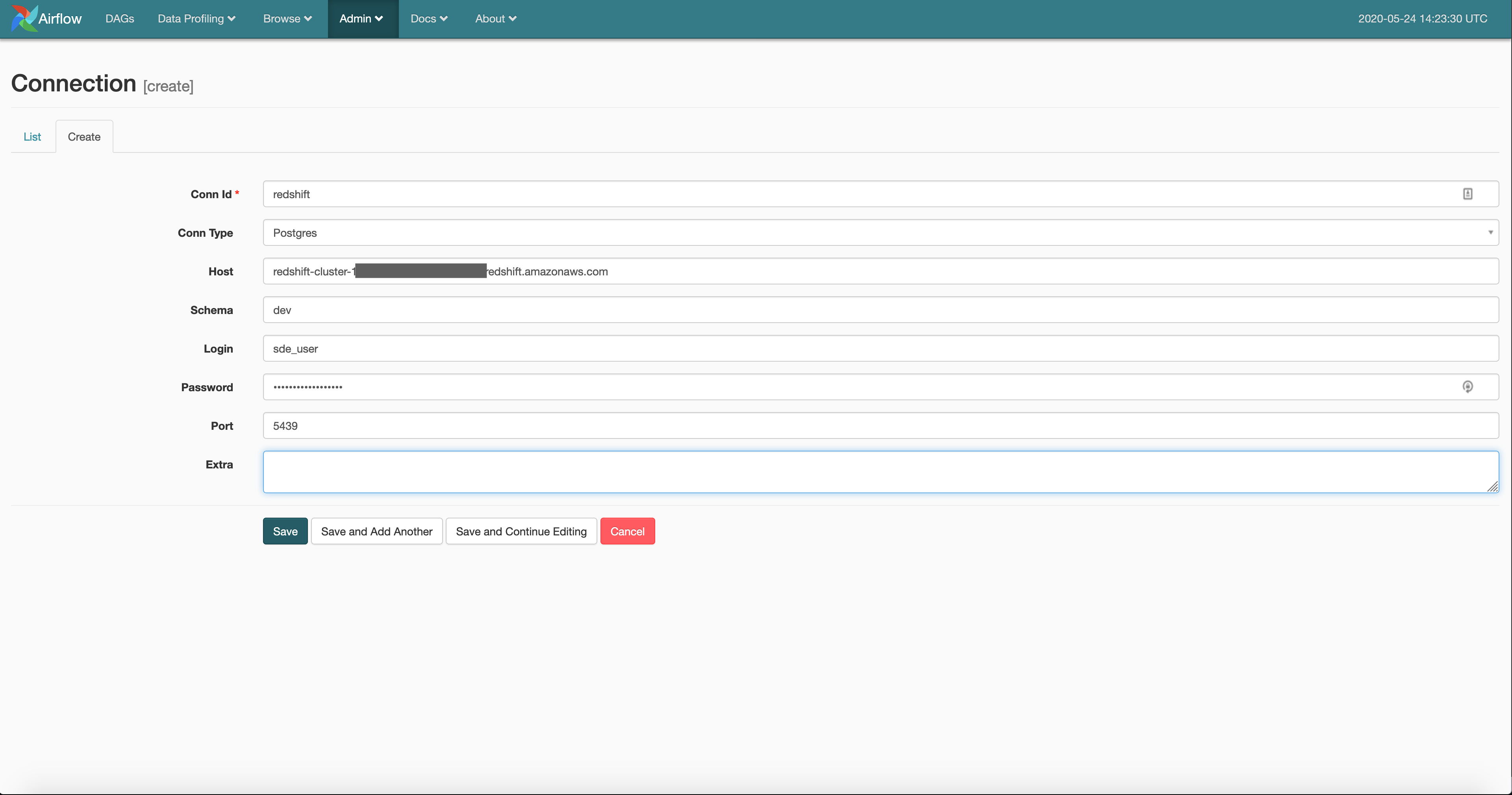

Get the redshift cluster connection details and make sure you have a spectrum IAM role associated with it(as shown in AWS Components )

log into redshift using pgcli

pgcli -h <your-redshift-host> -p 5439 -d <your-database> -U <your-redshift-user>

# type password when promptedIn the redshift connection run the script at setup/redshift/create_external_schema.sql, after replacing it with your iam role ARN and s3 bucket.

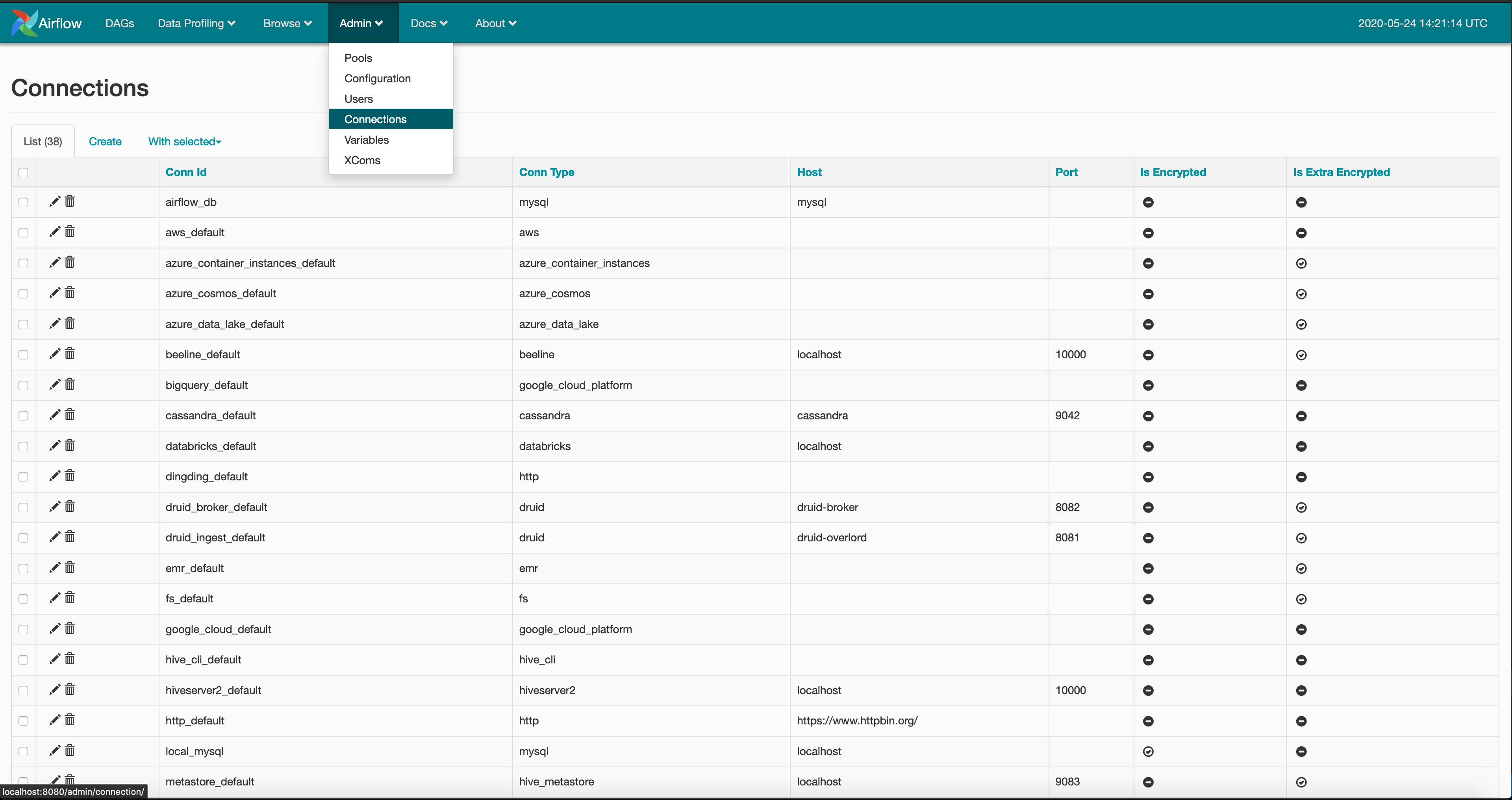

log on to www.localhost:8080 to see the Airflow UI Create a new connection as shown below for your 'redshift'

Get your EMR ID from the EMR UI, then in dags/user_behaviour.py fill out your BUCKET_NAME and EMR_ID.

switch on your DAG, after running successfully, verify the presence of data in redshift using

select * from public.user_behavior_metric limit 10;.

From your AWS console, stop the redshift cluster and EMR cluster In you local terminal type within your project base directory

docker-compose -f docker-compose-LocalExecutor.yml down