MRTK is a Microsoft driven open source project.

MRTK-Unity provides a set of foundational components and features to accelerate MR app development in Unity. The latest Release of MRTK (V2) supports HoloLens/HoloLens 2, Windows Mixed Reality, and OpenVR platforms.

- Provides the basic building blocks for unity development on HoloLens, Windows Mixed Reality, and OpenVR.

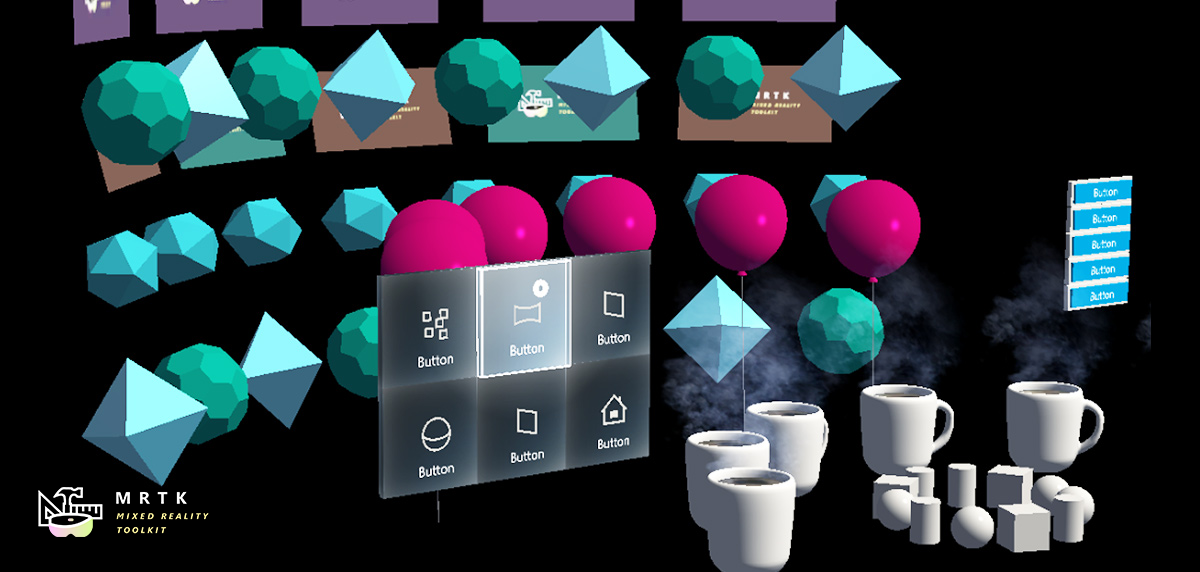

- Showcases UX best practices with UI controls that match Windows Mixed Reality and HoloLens Shell.

- Enables rapid prototyping via in-editor simulation that allows you to see changes immediately.

- Is extensible. Provides devs ability to swap out core components and extend the framework.

- Supports a wide range of platforms, including

- Microsoft HoloLens

- Microsoft HoloLens 2

- Windows Mixed Reality headsets

- OpenVR headsets (HTC Vive / Oculus Rift)

| Branch | CI Status | Docs Status |

|---|---|---|

mrtk_development |

Windows SDK 18362+ Windows SDK 18362+ |

Unity 2018.4.x Unity 2018.4.x |

Visual Studio 2017 Visual Studio 2017 |

Simulator (optional) Simulator (optional) |

|---|---|---|---|

| To build apps with MRTK v2, you need the Windows 10 May 2019 Update SDK. To run apps for Windows Mixed Reality immersive headsets, you need the Windows 10 Fall Creators Update. |

The Unity 3D engine provides support for building mixed reality projects in Windows 10 | Visual Studio is used for code editing, deploying and building UWP app packages | The Emulators allow you test your app without the device in a simulated environment |

Please check out the Getting Started Guide

Find this readme, other documentation articles and the MRTK api reference on our MRTK Dev Portal on github.io.

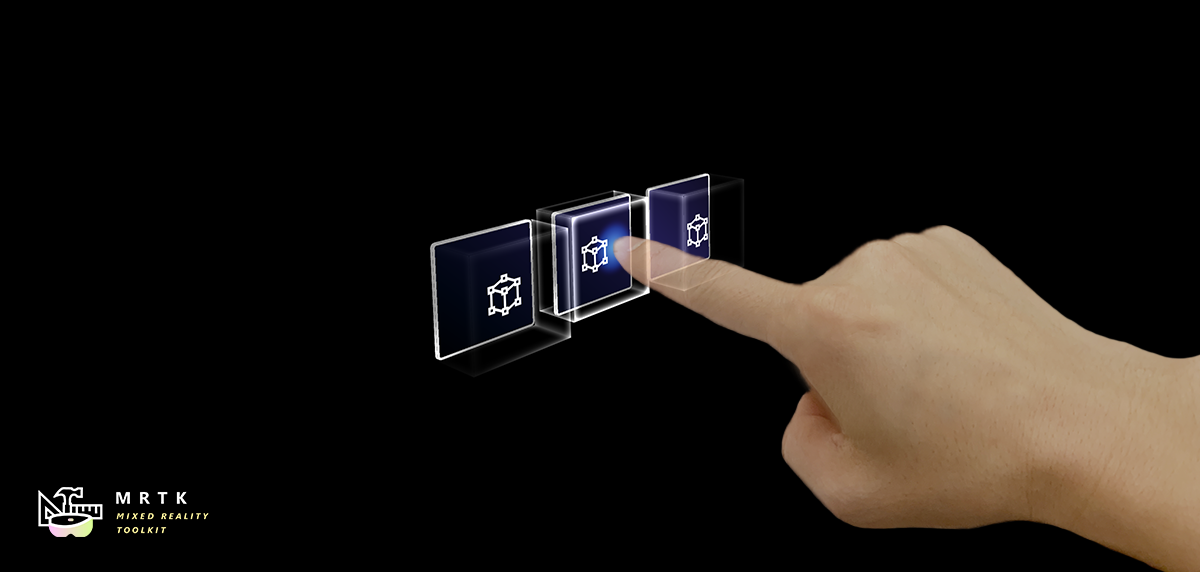

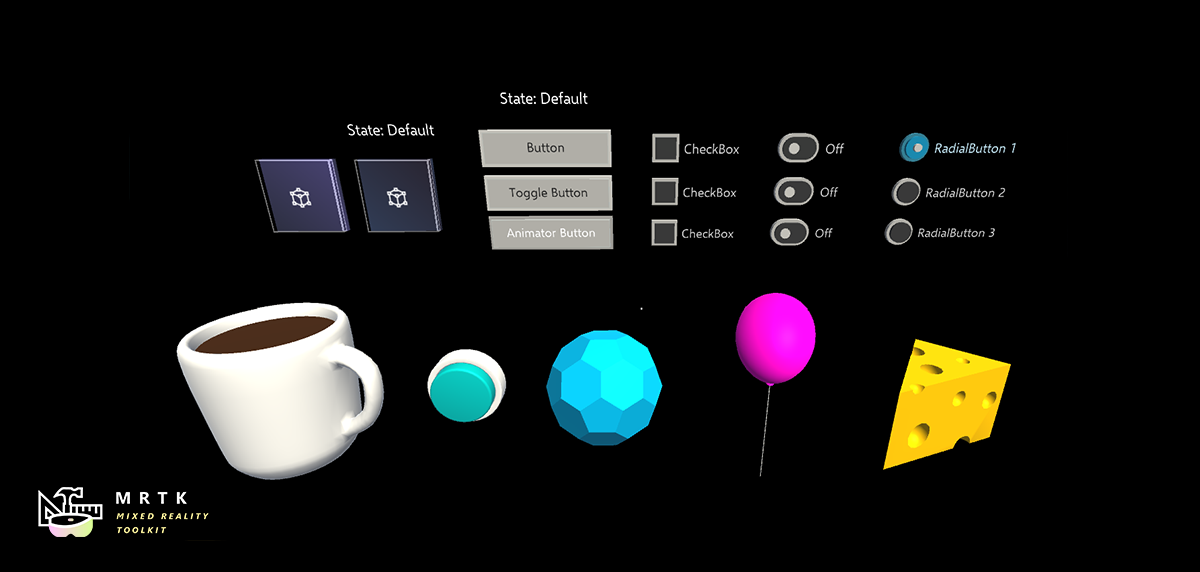

Button Button |

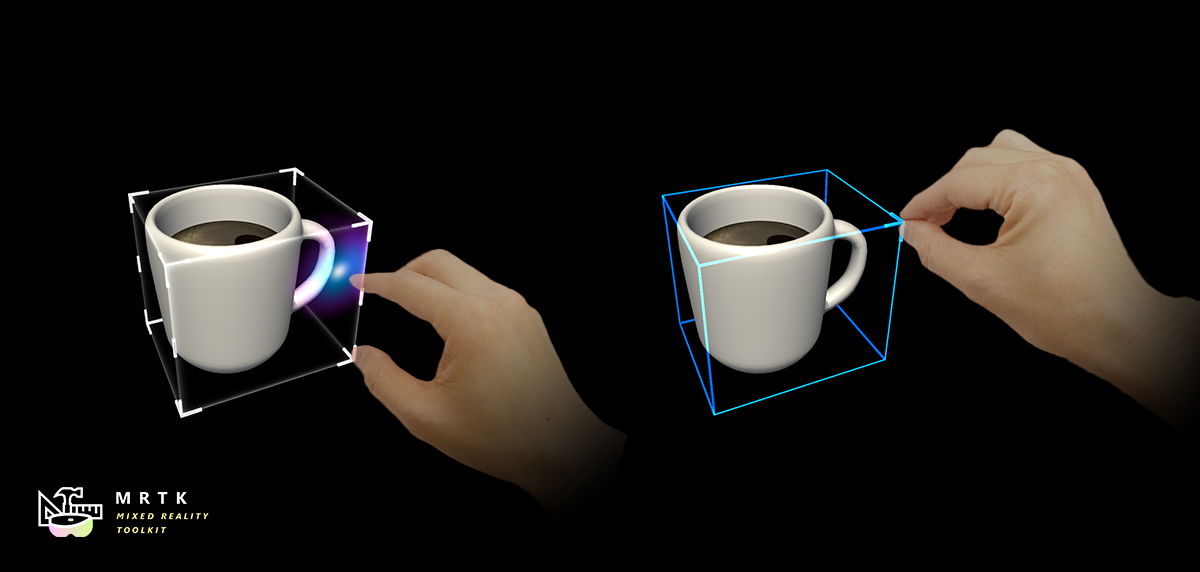

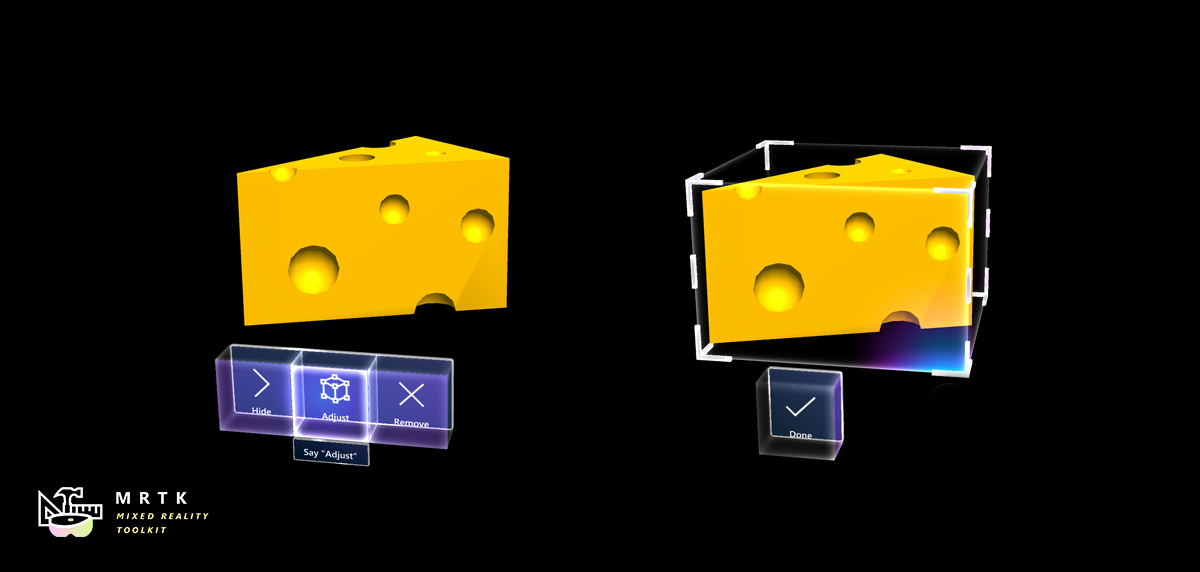

Bounding Box Bounding Box |

Manipulation Handler Manipulation Handler |

|---|---|---|

| A button control which supports various input methods including HoloLens 2's articulated hand | Standard UI for manipulating objects in 3D space | Script for manipulating objects with one or two hands |

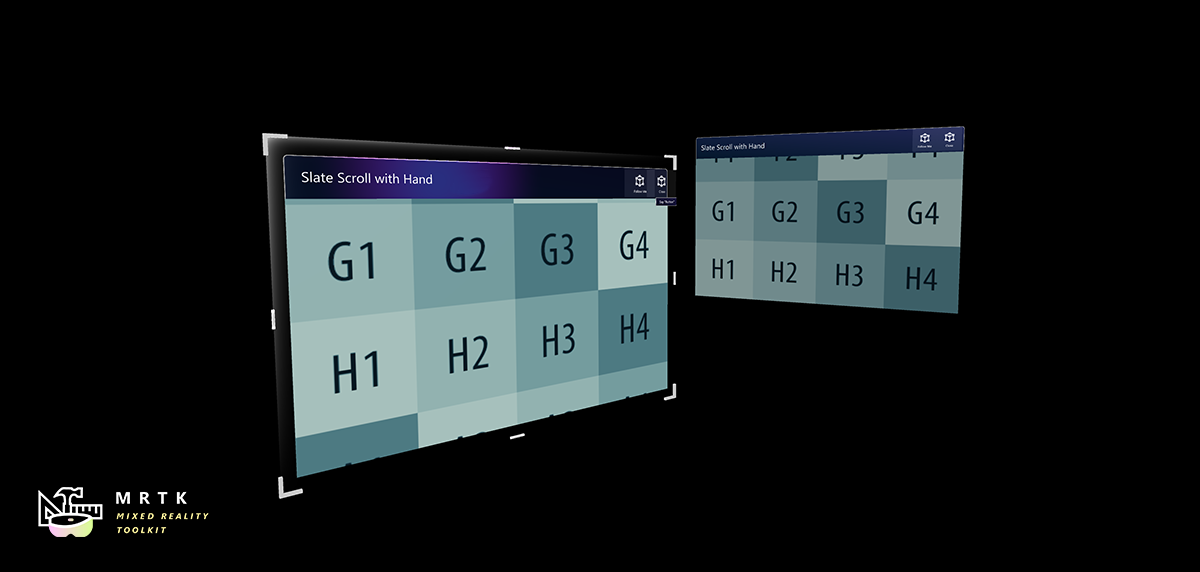

Slate Slate |

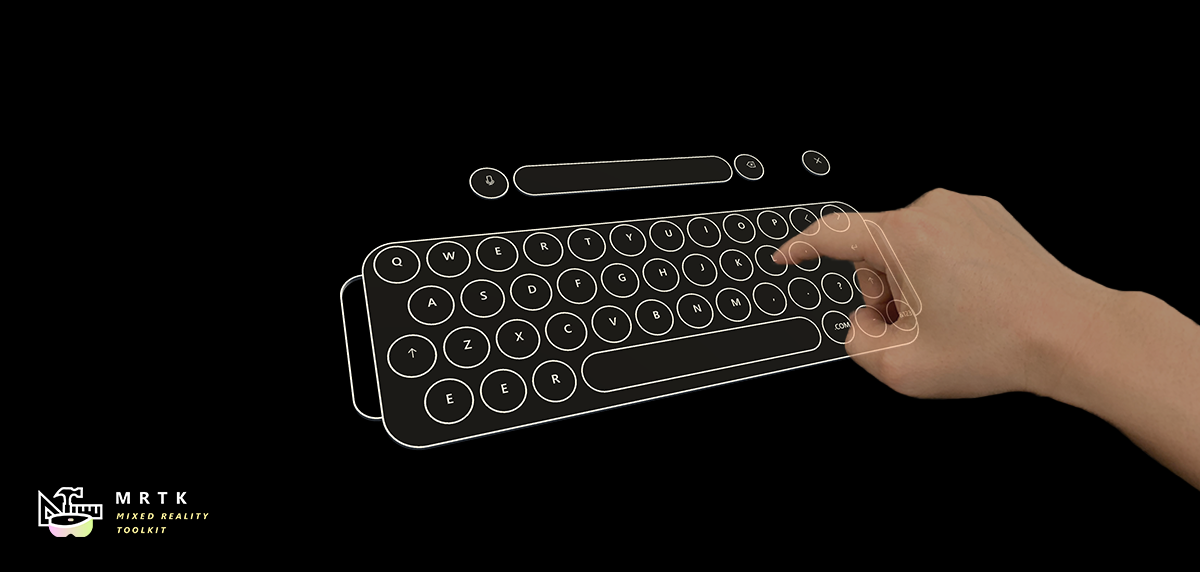

System Keyboard System Keyboard |

Interactable Interactable |

| 2D style plane which supports scrolling with articulated hand input | Example script of using the system keyboard in Unity | A script for making objects interactable with visual states and theme support |

Solver Solver |

Object Collection Object Collection |

Tooltip Tooltip |

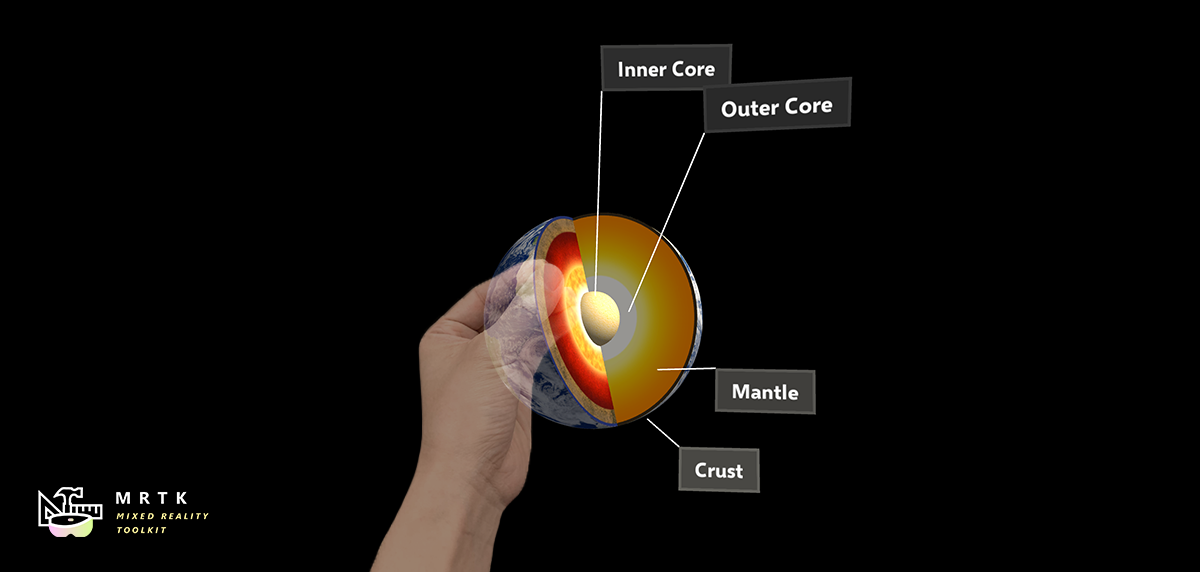

| Various object positioning behaviors such as tag-along, body-lock, constant view size and surface magnetism | Script for lay out an array of objects in a three-dimensional shape | Annotation UI with flexible anchor/pivot system which can be used for labeling motion controllers and object. |

App Bar App Bar |

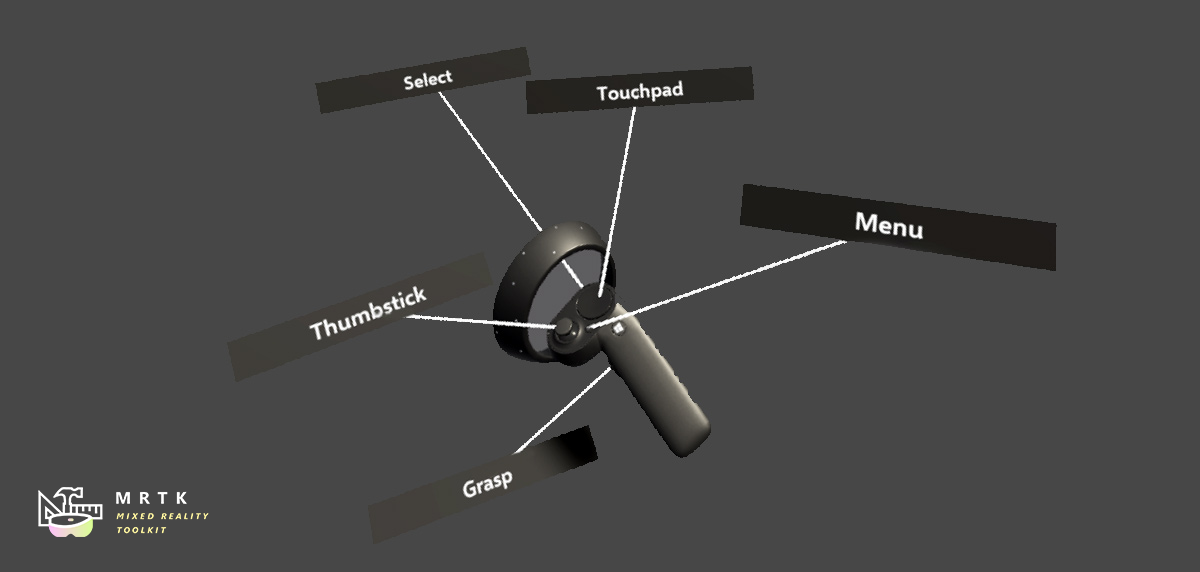

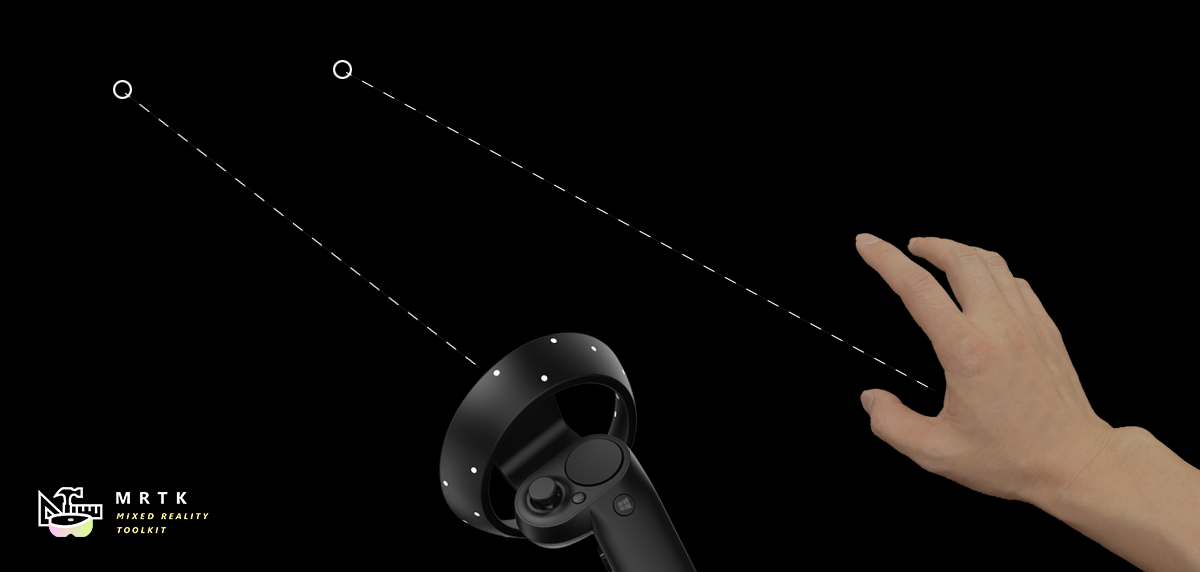

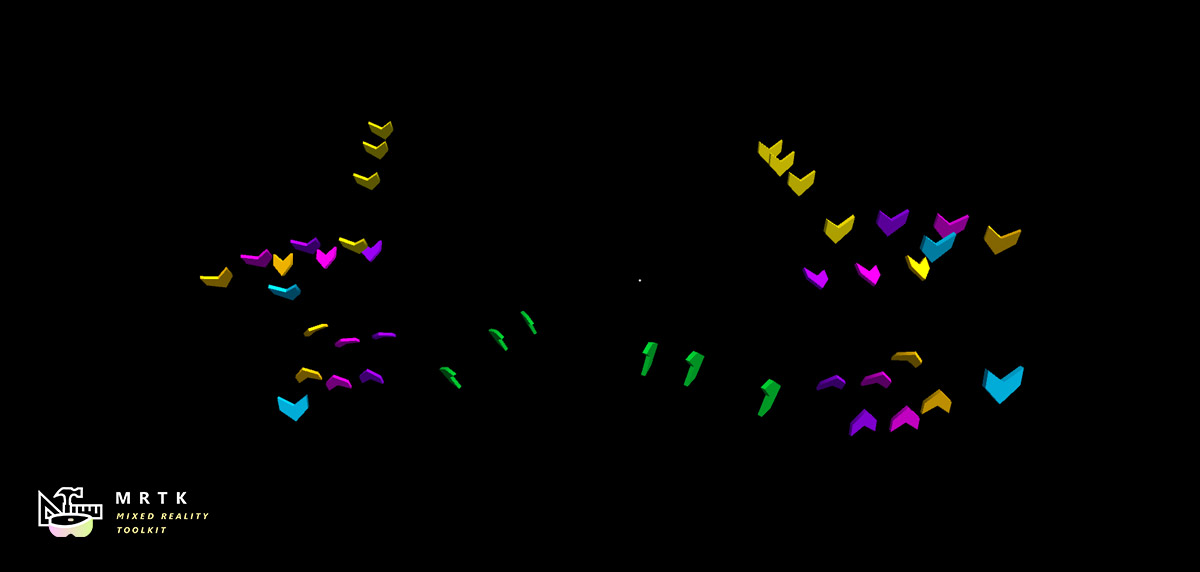

Pointers Pointers |

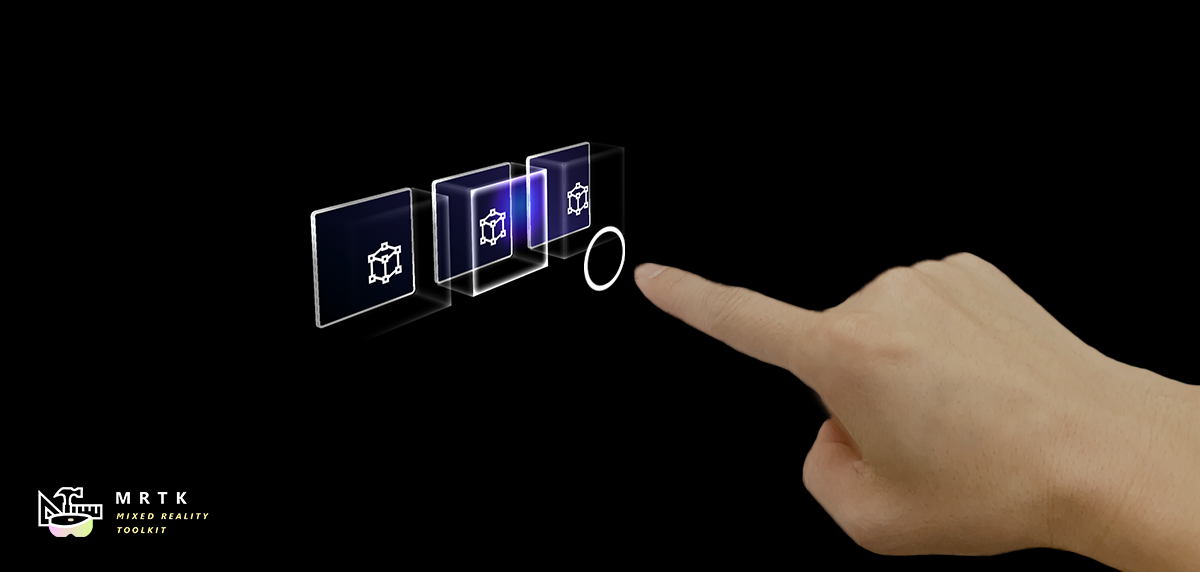

Fingertip Visualization Fingertip Visualization |

| UI for Bounding Box's manual activation | Learn about various types of pointers | Visual affordance on the fingertip which improves the confidence for the direct interaction |

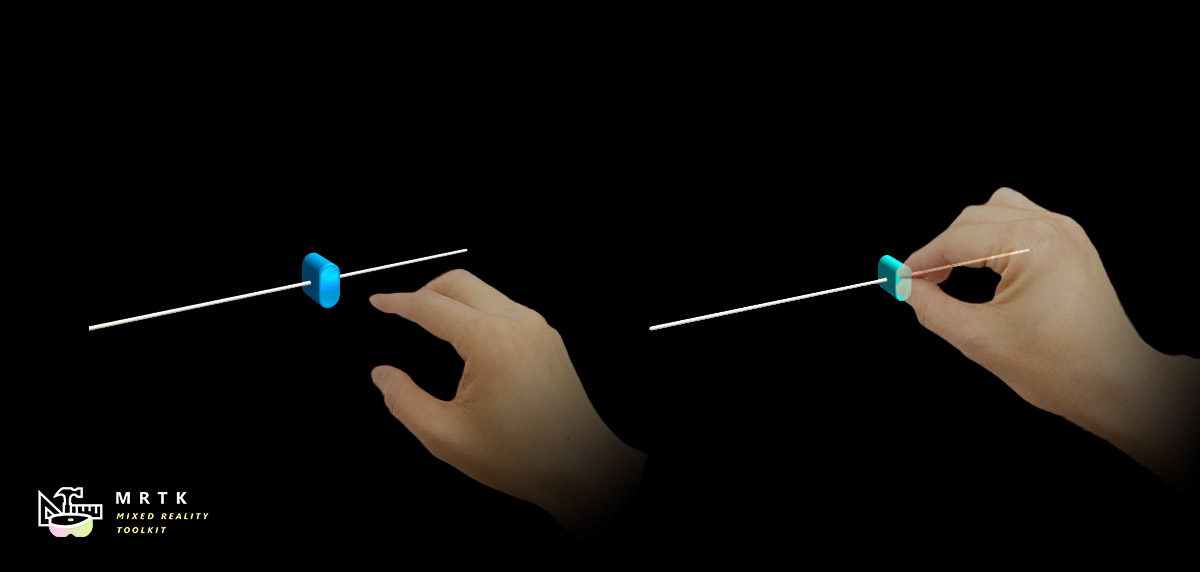

Slider Slider |

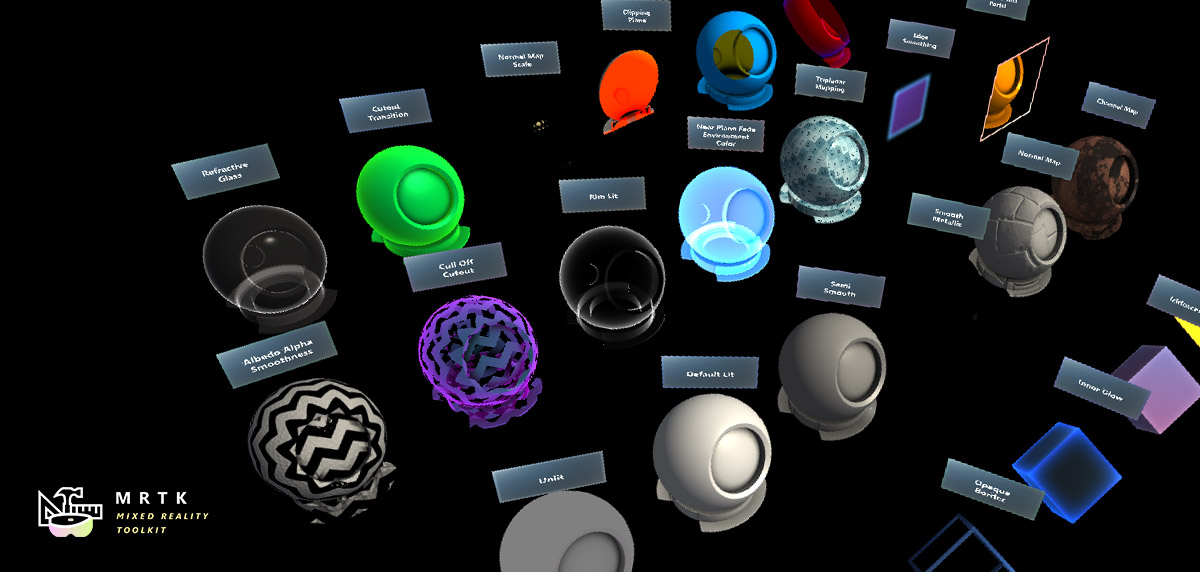

MRTK Standard Shader MRTK Standard Shader |

Hand Joint Chaser Hand Joint Chaser |

| Slider UI for adjusting values supporting direct hand tracking interaction | MRTK's Standard shader supports various Fluent design elements with performance | Demonstrates how to use Solver to attach objects to the hand joints |

| Combine eyes, voice and hand input to quickly and effortlessly select holograms across your scene | Learn how to auto scroll text or fluently zoom into focused content based on what you are looking at | Examples for logging, loading and visualizing what users have been looking at in your app |

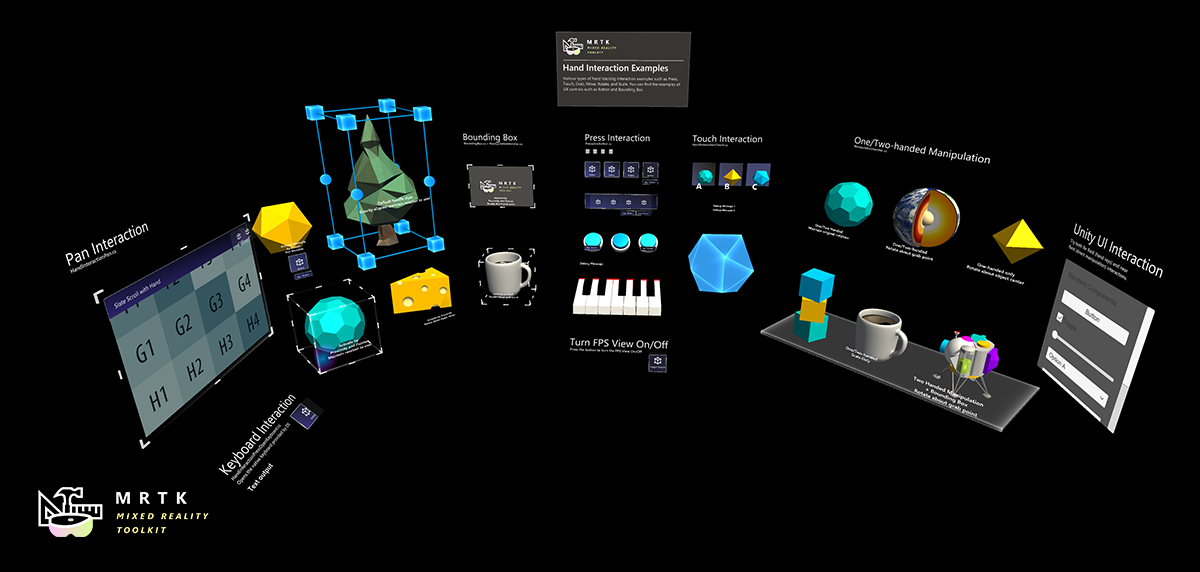

Explore MRTK's various types of interactions and UI controls in this example scene.

You can find other example scenes under Assets/MixedRealityToolkit.Examples/Demos folder.

-

Join the conversation around MRTK on Slack.

-

Ask questions about using MRTK on Stack Overflow using the MRTK tag.

-

Search for known issues or file a new issue if you find something broken in MRTK code.

-

Join our weekly community shiproom to hear directly from the feature team. (link coming soon)

-

Deep dive into project plan and learn how you can contribute to MRTK in our wiki.

-

For issues related to Windows Mixed Reality that aren't directly related to the MRTK, check out the Windows Mixed Reality Developer Forum.

This project has adopted the Microsoft Open Source Code of Conduct. For more information see the Code of Conduct FAQ or contact opencode@microsoft.com with any additional questions or comments.

| Learn to build mixed reality experiences for HoloLens and immersive headsets (VR). | Get design guides. Build user interface. Learn interactions and input. | Get development guides. Learn the technology. Understand the science. | Get your app ready for others and consider creating a 3D launcher. |

Spatial Anchors |

||

|---|---|---|

| Spatial Anchors is a cross-platform service that allows you to create Mixed Reality experiences using objects that persist their location across devices over time. | Discover and integrate Azure powered speech capabilities like speech to text, speaker recognition or speech translation into your application. | Identify and analyze your image or video content using Vision Services like computer vision, face detection, emotion recognition or video indexer. |

You can find our planning material on our wiki under Project Management Section. You can always see the items the team is actively working on in the Iteration Plan issue.

View the How To Contribute wiki page for the most up to date instructions on contributing to the Mixed Reality Toolkit!