Deep Exploration via Bootstrapped DQN, NIPS 2016

This is pytorch implmentation project of Bootsrapped DQN Paper

Overview

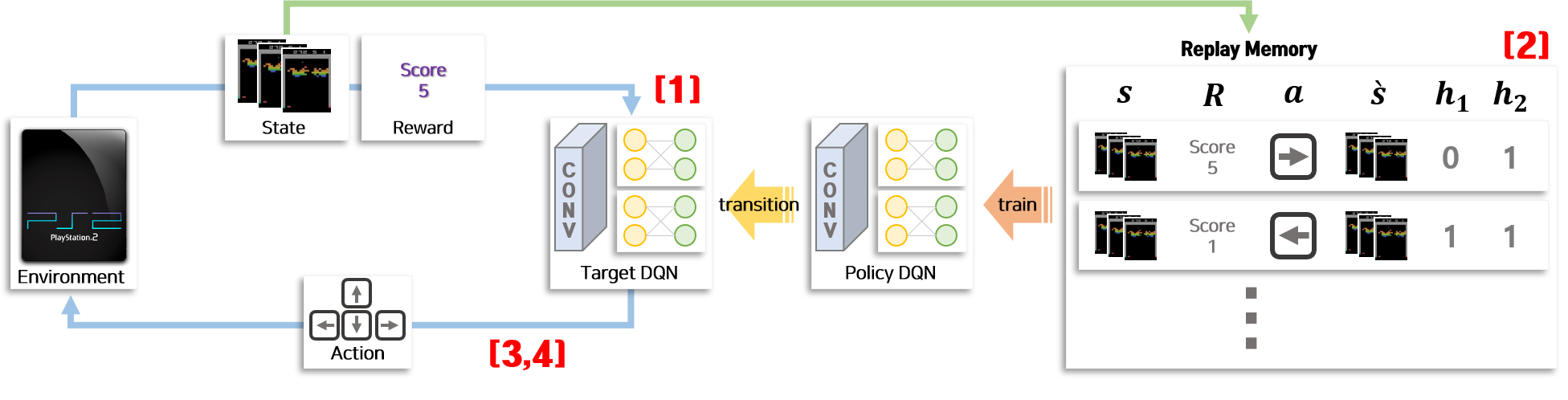

Bootsrapped DQN is differ from DQN(Deep Q Network) with 4 main architecture

[1] Adapt multiple head in DQN architecture as ensemble model [2] Add Bootsrapped Replay Memory with Bernoulli fuction [3] Choose one head of ensemble DQN for each episod to make it run in training period [4] Vote with best action of each heads when it comes to make action in evaluation period

Detail description is supported in my blog which is written in Korean.

Training Code

python breakout.py \

--mode=train \

--env=BreakoutDeterministic-v4 \

--device=gpu \

--memory_size=1e5 \

--n_ensemble=9 \

--out_dir=resultsThis is how you train atari game[Breakout]. There is many hyper-parameters to set. Paper setting is provided as a default. But please make sure that you got enough memory to train this model. Because of Repaly Memory, It takes a lot of resources

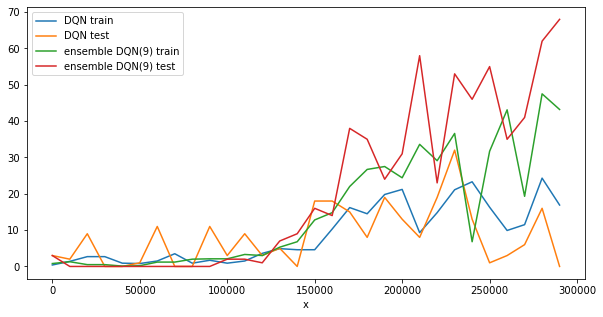

Result Visulization

python breakout.py \

--mode=test \

--device=gpu \

--pretrained_dir=my_dir \

--out_dir=animation \

--refer_img=resourceAfter train your own model, you can run some game with your model.

I put some extra visualization code to make you enjoy.

To make animation, put test in mode option and resource in refer_img option.

refer_img option gives more enjoyable GIF animations.